Understanding the Scope and Purpose of ISO 42001 Annex C

Annex C: A Cornerstone of the AI Management System

Annex C of ISO/IEC 42001 is a cornerstone in the AI Management System (AIMS), offering a structured approach for organisations to align their AI systems with ethical, secure, and effective management practices. It is designed to guide organisations in identifying and managing AI-related organisational objectives and risk sources, such as accountability (Requirement 5.1), AI expertise, and data quality (A.7.4), while also addressing risk sources like environmental complexity and technology readiness (C.3.1 and C.3.7).

Integration with ISO 42001 Standard

This annex is seamlessly integrated into the broader ISO 42001 standard, complementing the standard’s requirements by providing detailed insights into the objectives and risks unique to AI systems. This integration facilitates a comprehensive risk management strategy, ensuring that organisations can address the multifaceted challenges posed by AI technologies, including ethical dilemmas and security threats (B.5.2).

Critical Role in AI Implementation

For organisations implementing AI systems, Annex C is indispensable, offering a clear roadmap for fostering trustworthiness in AI systems. By adhering to the guidelines within Annex C, organisations ensure their AI systems are reliable, responsible, and aligned with societal values, thus addressing the objectives and risk sources outlined in C.2.1, C.2.2, and C.2.10.

ISMS.online Support for Compliance

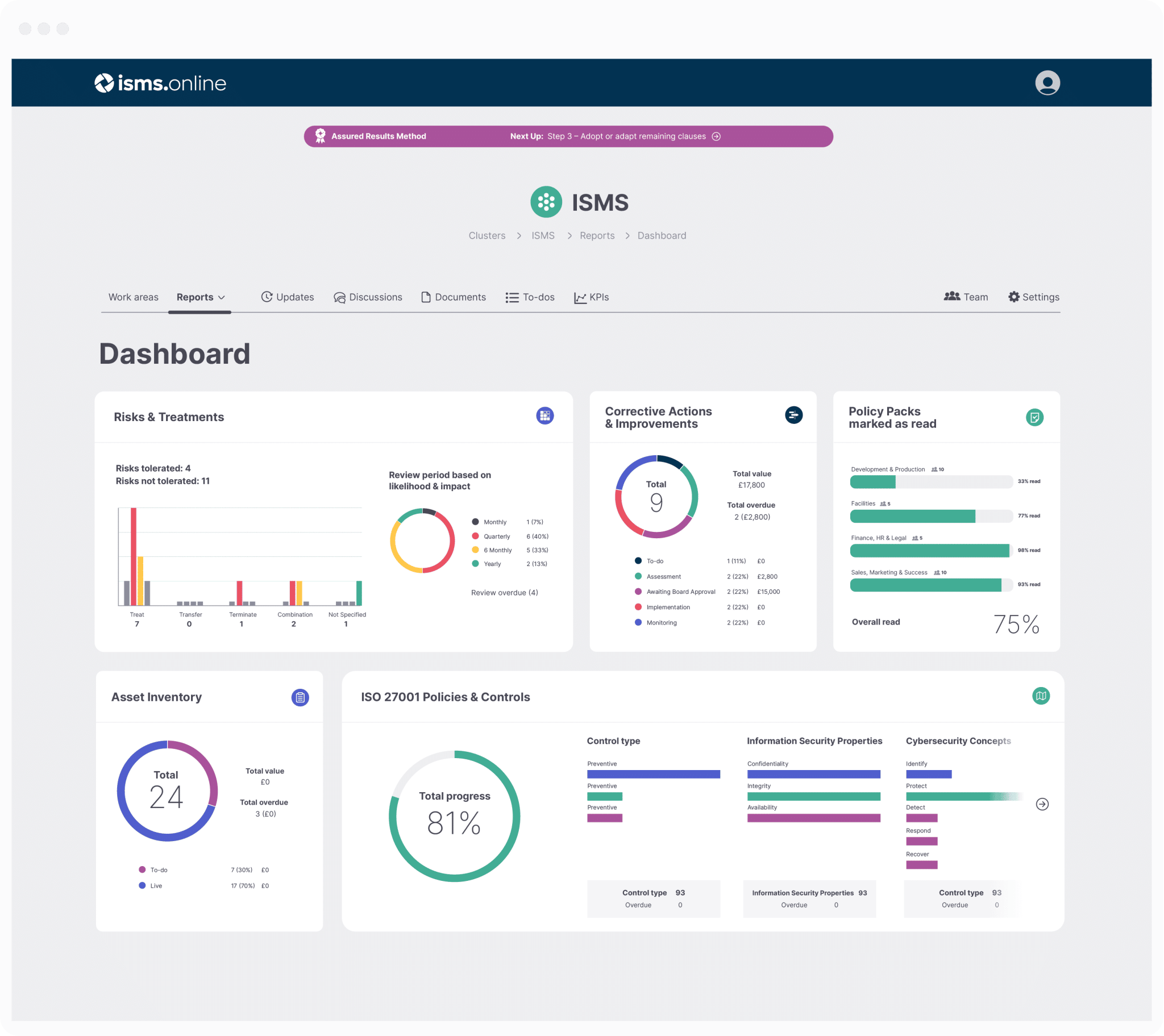

ISMS.online is a robust platform that supports organisations in achieving compliance with Annex C requirements. It provides essential tools for risk assessment, policy management, and documentation control, all crucial for meeting the standard's objectives. With its comprehensive suite of features, ISMS.online enables organisations to manage their AI systems effectively, ensuring continuous improvement and alignment with the evolving landscape of AI governance, as suggested by the guidance in Annex D for using the AI management system across various domains or sectors.Objectives of ISO 42001 Annex C

Accountability in AI Systems

In accordance with Annex C of ISO 42001, organisations are required to establish transparent and traceable decision-making processes to ensure accountability in AI systems (C.2.1). This involves the creation of clear mechanisms to attribute responsibility for AI behaviours and outcomes, which must be documented as part of the AI management system (Requirement 5.1). The AI system impact assessment process, as outlined in A.5.2, is a critical control that supports accountability by evaluating potential consequences and documenting these assessments. Furthermore, roles and responsibilities related to AI must be clearly defined and communicated within the organisation (B.3.2).

AI Expertise Expectations

Organisations are expected to develop a strong foundation of AI expertise, encompassing both technical skills and an understanding of the ethical implications and risk management associated with AI systems (C.2.2). This expertise is essential for the responsible design of AI systems and must align with the organisation’s competence requirements as per Requirement 7.2. The quality of data for AI systems is a key focus area, and organisations must ensure that competencies related to data management and quality are developed in accordance with A.7.4. The implementation guidance provided in B.4.6 can assist organisations in identifying and documenting the necessary human resources and their competencies for AI system development and operation.

Training and Test Data Quality

The quality and integrity of training and test data are fundamental to the reliability of AI systems, as recognised in Annex C (C.2.3). Organisations must ensure that data handling processes are transparent and meet established quality standards, in line with A.7.2. This includes ensuring that data is representative, unbiased, and of high integrity. The general documented information requirement (7.5.1) emphasises the need for organisations to maintain accurate and controlled documentation of data management processes, which is further supported by the implementation guidance on data quality (B.7.4).

Minimising Environmental Impact

Annex C of ISO 42001 advocates for sustainable practices in AI system development and deployment to minimise environmental impact (C.2.4). Organisations are encouraged to efficiently use resources and energy and to consider the AI system’s lifecycle environmental footprint. This aligns with the control related to system and computing resources (A.4.5) and is supported by the general actions to address risks and opportunities (5.2). The guidance on documenting system and computing resources with environmental considerations is provided in B.4.5, which organisations can use to integrate environmental sustainability into their AI management system.

By adhering to these detailed objectives and leveraging platforms like ISMS.online, organisations can ensure a responsible approach to AI system management, effectively addressing key areas such as accountability, expertise, data quality, and environmental impact.

Get an 81% headstart

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Risk in AI Environments as Identified by ISO 42001 Annex C

Environmental Complexity and AI

In the dynamic and unpredictable operational settings where AI systems are deployed, the complexity of the environment is recognised as a significant risk source (C.3.1). Organisations are encouraged to adopt comprehensive risk management strategies, as outlined in A.5.2, focusing on actions to address risks and opportunities. This includes considering the external and internal issues that are relevant to the organisation’s purpose and that affect its ability to achieve the intended outcomes of its AI management system (Requirement 4.1). The implementation guidance provided in B.6.7 further specifies the need for documenting requirements that consider the complexity of the environment.

Transparency and Explainability Challenges

Annex C emphasises the importance of transparency and explainability in AI systems, highlighting the need for stakeholders to understand AI decision-making processes (C.3.2). This aligns with A.8.2, which mandates system documentation and information for users, ensuring that AI systems are accessible and interpretable. Organisations are tasked with fostering trust and accountability by making the rationale behind AI decisions clear and understandable, as supported by the implementation guidance in B.8.2.

Automation Level Risks

The risks associated with varying levels of automation in AI systems are addressed in Annex C, underscoring the importance of maintaining human oversight to prevent over-reliance on automated processes (C.3.3). This is in line with A.9.2, which calls for processes that ensure the responsible use of AI systems and maintain ethical standards and safety. The implementation guidance in B.9.2 provides direction for defining and documenting processes that manage the risks of automation, ensuring that automation does not compromise the organisation’s commitment to responsible AI use.

Machine Learning-Specific Risk Sources

Machine learning-specific risk sources, such as data quality issues, algorithmic biases, and model robustness, are detailed in Annex C (C.3.4). These risks are addressed through controls in Annex A, particularly A.7.4, which emphasises the importance of high-quality data for AI systems. Ensuring that machine learning models are built on solid, unbiased data foundations is crucial for their resilience to evolving threats and challenges. The implementation guidance in B.7.4 provides organisations with direction for defining data quality requirements and ensuring that data used in AI systems meets these standards.

Annex C’s Influence on AI Risk Management

Informing the Risk Management Process

Annex C of ISO 42001 critically informs the risk management process for AI systems, providing a structured approach to identifying and analysing potential AI-related risks. By considering objectives such as C.2.1 Accountability and C.2.10 Security, organisations can ensure a comprehensive evaluation of AI-related risks, aligning with Requirement 6.1 on addressing risks and opportunities.

Significance in AI Risk Assessments

The incorporation of Annex C objectives into AI risk assessments ensures a holistic consideration of AI risks, including ethical, societal, and technical aspects. This approach is vital for the responsible deployment of AI systems and aligns with A.5.2, which mandates an AI system impact assessment process, reinforcing the significance of Requirement 5.3 in AI risk assessments.

Guiding the AI Risk Treatment Process

Annex C provides guidance for the AI risk treatment process by recommending appropriate controls and measures to mitigate identified risks, such as those found in A.5.5, focusing on AI risk treatment. This helps organisations prioritise risks and select effective treatment options, aligning with Requirement 5.5 on AI risk treatment.

ISMS.online’s Role in Risk Management Alignment

ISMS.online facilitates alignment with Annex C’s risk management guidelines through its comprehensive suite of tools designed for risk assessment and treatment. The platform’s features enable organisations to document, manage, and monitor the implementation of risk treatment plans, ensuring continuous alignment with Requirement 5.5 and the specific guidance provided in Annex C, as well as supporting the objectives for responsible development of AI systems outlined in B.5.3.

Compliance doesn't have to be complicated.

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Harmonising Annex C with Other ISO 42001 Components

Correlation with Annex A Objectives and Controls

Annex C’s focus on AI-specific considerations is designed to enhance the control objectives and controls found in Annex A. For instance, A.5.5 on AI risk treatment is further elaborated in Annex C, which discusses AI risks and their mitigation strategies in depth. Additionally, objectives such as A.2.1 on AI policy and A.3.2 on AI roles and responsibilities are underpinned by the strategic insights provided in Annex C, ensuring that the AI management system is comprehensive and addresses the nuances of AI risks.

Synchronisation with Annex B Implementation Guidance

The practical application of Annex C is closely tied to the implementation guidance provided in Annex B. While Annex B offers the ‘how-to’ of AI controls, such as B.6.2.3 on documenting AI system design and development, Annex C provides the ‘why’, detailing the objectives behind these controls and offering an expanded perspective on their application within AI systems. This synchronisation ensures that the AI management system is not only compliant with Requirement 1 of ISO 42001 but also deeply rooted in a clear understanding of the purpose and rationale behind each control.

Complementing Sector-Specific Guidance in Annex D

Annex C is designed to work in tandem with the sector-specific guidance found in Annex D. It addresses the distinct objectives and risks associated with AI in various domains, such as D.1‘s mention of healthcare and finance, ensuring that the AI management system is versatile and tailored to the unique challenges of different sectors. This complementary relationship enhances the system’s relevance and efficacy across a wide range of industries.

Streamlining Integration with ISMS.online

The integration of Annex C with other components of ISO 42001 is facilitated by platforms like ISMS.online, which provide a unified solution that aligns with the standard’s structure. This platform aids in the documentation, implementation, and monitoring of the AI management system, harmonising the objectives and controls across all annexes, such as incorporating C.2.10‘s focus on security into the platform’s security management features. Such integration promotes a unified approach to AI governance, risk, and compliance, customised to the specific needs of an organisation.

Enhancing Accountability and Expertise in AI Management

Mechanisms for Accountability in AI Systems

In accordance with C.2.1, establishing robust accountability within AI systems is paramount. This involves the meticulous documentation of roles and decision-making processes, as mandated by A.3.2, and the creation of governance structures to oversee AI operations. Such structures ensure traceability of actions back to responsible parties, a principle that is further reinforced by A.5.2 which calls for a comprehensive AI system impact assessment process. This process, detailed in B.5.2, is designed to evaluate the potential consequences of AI systems on individuals and society, thereby enhancing accountability.

Building and Maintaining AI Expertise

To cultivate AI expertise, C.2.2 suggests that organisations should invest in continuous learning and professional development, covering both the technical and ethical dimensions of AI. This aligns with A.7.6, which emphasises the significance of data preparation, a critical aspect of AI expertise. As per B.7.6, defining criteria for data preparation is essential, ensuring that personnel are equipped with the skills necessary to manage AI data effectively. This commitment to interdisciplinary knowledge and continuous improvement is crucial for maintaining a high standard of AI governance.

Role of Continuous Learning

The role of continuous learning is pivotal in achieving the objectives set forth in C.2.2. It ensures that personnel remain informed about the latest AI advancements and ethical standards, thereby enhancing the organisation’s AI management system. This ongoing education is crucial for adapting to the rapidly evolving AI landscape and maintaining a competitive edge.

Using ISMS.online for AI Management

Organisations can utilise ISMS.online to effectively enhance accountability and AI expertise. The platform offers tools for documenting AI management processes, assigning roles and responsibilities in line with A.3.2, and tracking compliance with ISO 42001 standards. Its comprehensive suite of resources supports the implementation of continuous learning strategies, aligning with C.2.2 and facilitating adherence to high standards of AI governance. Moreover, ISMS.online’s capabilities can be applied across various domains, as suggested in D.1 and D.2, providing a versatile solution for organisations seeking to align with Annex C’s objectives and improve their AI management systems.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Managing Data Quality and Environmental Impact in AI Systems

Ensuring High-Quality Data in AI

To ensure AI decisions are reliable, organisations must manage the quality of data used in AI systems, aligning with Requirement 5.2 by addressing risks and opportunities related to data quality. Annex C.2.3 emphasises the need for high-quality training and test data, which is supported by Control A.7.4, mandating organisations to establish data quality criteria. Guidance B.7.4 provides further details on ensuring data quality, such as defining data quality metrics and validation procedures. Organisations should also control and maintain data quality documentation as per Requirement 7.5.

Strategies for Reducing AI’s Environmental Impact

Addressing the environmental impact of AI, Annex C.2.4 recommends strategies like optimising AI operations’ energy efficiency. These strategies are supported by Control A.4.5, which pertains to system and computing resources, encouraging organisations to manage these resources responsibly. Guidance B.4.5 offers further insights into documenting system and computing resources, including environmental considerations.

Upholding Fairness in AI Systems

Annex C.2.5 underscores the importance of fairness in AI systems, advocating for measures to detect and mitigate biases. This commitment to fairness is reflected in Control A.5.4, which requires an assessment of AI system impact on individuals or groups, ensuring AI systems operate equitably and without discrimination.

Leveraging ISMS.online for Effective Management

ISMS.online offers a suite of tools that align with the objectives of Annex C, aiding organisations in managing data quality and environmental impact. The platform’s features facilitate the documentation of data management processes, the assessment of environmental impacts, and the implementation of fairness measures, ensuring that organisations can effectively meet the standards set forth in ISO 42001 Annex C.

Further Reading

Ensuring Fairness, Maintainability, and Privacy in AI Systems

Fairness in AI Systems as Mandated by Annex C

Annex C of ISO 42001 emphasises fairness in AI systems, mandating operations without bias and equitable outcomes for all user groups. This is in line with A.5.4, which necessitates AI system impact assessments to prevent discrimination against any user or group. Fairness is a core objective (C.2.5) and is further supported by implementation guidance on assessing impacts on individuals or groups (B.5.4).

Maintainability of AI Systems

Annex C underscores the importance of designing AI systems with foresight for future updates and improvements, ensuring long-term functionality and effectiveness. This objective of maintainability (C.2.6) is supported by A.6.2.6, which focuses on AI system operation and monitoring, highlighting the significance of regular maintenance and updates. The guidance provided in B.6.2.6 aids in defining and documenting elements necessary for ongoing AI system operation.

Privacy Considerations in AI Systems

Annex C provides comprehensive privacy considerations, requiring AI systems to protect personal and sensitive data in line with data protection laws. This aligns with the information security focus of ISO 27001 and A.5.4, which calls for privacy impact assessments. The privacy objective (C.2.7) is reinforced by guidance on conducting privacy impact assessments as part of the AI system impact assessment process (B.5.4).

Utilising ISMS.online for AI System Management

ISMS.online offers a robust platform for organisations to manage fairness, maintainability, and privacy in AI systems, aligning with Annex C requirements. The platform provides tools for documenting processes, assessing impacts, and managing data protection measures. The integration of AI management practices with standards like ISO 27001 for information security is demonstrated by D.2, showcasing ISMS.online’s capabilities in the broader context of AI management across various domains and sectors.

Ensuring Robustness, Safety, and Security in AI Systems

Defining Robustness in AI Systems

Robustness in AI systems is essential for maintaining performance levels amidst environmental changes or uncertainties, as highlighted in C.2.8. This robustness includes resilience to attacks, anomalies, and operational variances, which is why A.6.2.4 is critical, ensuring systems are fortified against identified risks. The implementation guidance in B.6.2.4 further emphasises the need for robustness by providing detailed processes for verification and validation.

Safety Requirements in AI Systems

Safety in AI systems, as mandated by C.2.9, ensures they operate without causing harm to users or the environment. This safety is achieved through rigorous testing and validation, in line with A.6.2.4, which calls for AI system verification and validation to confirm safety standards are met before deployment. The implementation guidance in B.6.2.4 provides the necessary steps to ensure these safety requirements are thoroughly met.

Aligning with ISO 27001 for AI System Security

Security in AI systems, in alignment with ISO 27001, requires protection against unauthorised access, data breaches, and other cyber threats, as stated in C.2.10. This is where A.5.4 becomes crucial, mandating privacy and security impact assessments to identify vulnerabilities within AI systems. The corresponding implementation guidance in B.5.4 provides the necessary steps for conducting these assessments, ensuring comprehensive security measures are in place.

Achieving Compliance with ISMS.online

ISMS.online supports organisations in achieving robustness, safety, and security in AI systems by providing a comprehensive platform for managing compliance with ISO 42001. The platform’s features enable systematic documentation, risk assessments, and the implementation of security controls, ensuring that AI systems are robust, safe, and secure in accordance with Annex C‘s directives and the broader framework of Annex D.

Transparency and Explainability in AI Systems

Impact on AI System Design and Deployment

Incorporating transparency and explainability into AI system design and deployment is mandated by Requirement 4.1, which necessitates a clear understanding of the organisation and its context. This includes:

- Documenting AI algorithms and data usage as per A.7.5, ensuring data provenance is traceable.

- Developing user-friendly interfaces that align with A.8.2, providing system documentation and information to users.

- Implementing mechanisms for users to query and receive explanations about AI outputs, supporting C.2.11‘s emphasis on transparency and explainability.

Challenges in Achieving Transparency and Explainability

Organisations face challenges such as:

- Complex AI algorithms, which Requirement 7.5 addresses by requiring controlled documented information.

- Balancing transparency with the protection of proprietary information, a concern highlighted in C.3.2.

- Making explanations accessible to non-technical stakeholders, necessitating competence as outlined in Requirement 7.2.

Using Tools and Frameworks

To overcome these challenges, organisations can leverage:

- AI documentation frameworks, ensuring standardised presentation of AI system information as guided by B.7.6.

- Explainability interfaces, allowing user interaction with AI systems in a controlled environment to understand behaviour, aligning with A.8.5‘s requirement for information for interested parties.

- Continuous training and education programmes, enhancing stakeholder understanding of AI technologies, which is crucial as per Requirement 7.2.

By adhering to ISO 42001’s Annex C principles and utilising appropriate tools and frameworks, organisations can ensure their AI systems are not only compliant but also trusted by users. Integrating AI management practices with other standards, as encouraged by D.2, further reinforces transparency and explainability across various domains and sectors.

Steps for Achieving ISO 42001 Annex C Compliance

Conducting a Gap Analysis for ISO 42001 Annex C

Organisations embarking on compliance with ISO 42001 Annex C should initiate the process with a thorough gap analysis. This critical step involves a detailed comparison of existing AI management practices against the Annex C requirements. The gap analysis, integral to the planning process addressed in Requirement 5.2, identifies areas for improvement and pinpoints specific controls, such as A.7.2 for data management, that are crucial for meeting the standard. It also highlights the importance of AI ethics and data governance, aligning with organisational objectives like C.2.5 for fairness and C.2.7 for privacy.

Ensuring Comprehensive Coverage

The gap analysis must encompass all aspects of the AI management system, including leadership, planning, support, operation, performance evaluation, and improvement, as outlined in Requirements 4 to 10. This ensures a holistic approach to compliance, addressing every facet of the standard.

Role of Internal Audits in Compliance

Internal audits are pivotal in the compliance journey, offering an objective assessment of the AI management system’s alignment with Annex C. As mandated by Requirement 9.2, these audits should encompass all relevant areas, such as risk management practices, AI system documentation, and impact assessments, ensuring thorough evaluation and adherence to controls like A.5.2 for AI system impact assessment.

The Effectiveness of the AI Management System

Internal audits must not only assess conformity with Annex C but also evaluate the effectiveness of the AI management system in achieving its intended outcomes, as per Requirement 9.1. This evaluation is crucial for ensuring that the system is not only compliant but also effective and efficient in its operation.

Leveraging ISMS.online for Compliance Preparation

ISMS.online is an invaluable tool for organisations preparing for ISO 42001 Annex C compliance. The platform’s structured approach to managing the AI lifecycle aligns with Annex A controls, offering tools for risk assessment (A.5.3), impact assessment (A.5.2), and documentation (A.7.5). It facilitates the implementation of necessary changes identified during the gap analysis and ensures that internal audits are conducted effectively, contributing to a state of continuous readiness for compliance.

Integration with Other Management Systems

ISMS.online‘s capabilities extend to integrating the AI management system with other management systems, beneficial for organisations operating across various domains or sectors, as highlighted in Annex D.2. This integration is essential for a unified approach to managing AI-related issues within the broader organisational context.

Continuous Improvement

The platform also plays a significant role in driving continual improvement, referencing Requirement 10.1, to emphasise the dynamic nature of compliance and the AI management system’s evolution. Through ISMS.online, organisations can maintain a state of continuous improvement, adapting to changes and enhancing their AI management practices over time.

How ISMS.online Help for ISO 42001 Annex C Compliance

Managing the AI Lifecycle with ISMS.online

ISMS.online provides a comprehensive solution for managing the AI lifecycle in accordance with ISO 42001 Annex C. The platform offers a suite of tools designed to support the establishment (Requirement 4.4), implementation, maintenance, and continual improvement (Requirement 10.1) of an AI management system. It aligns with Annex A controls, ensuring that your organisation’s AI systems are developed and managed responsibly.

Continuous Monitoring and Retraining Resources

For continuous monitoring and retraining of AI systems, ISMS.online offers:

- Automated workflows for regular system reviews and updates, aligning with A.6.2.6 for AI system operation and monitoring.

- Tracking features for monitoring AI system performance against established KPIs, as guided by B.6.2.6.

- Resources for retraining AI systems, ensuring they adapt to new data and evolving operational environments, in line with C.2.3 on the availability and quality of training and test data.

Documentation and Transparency Facilitation

ISMS.online facilitates thorough documentation and transparency in AI systems management by:

- Providing centralised document control for easy access and management of AI system records, supporting A.7.5 on documented information.

- Enabling clear audit trails for AI decision-making processes, aligning with B.7.5.3 for documentation of AI system impact assessments.

- Offering features for stakeholder engagement and reporting, enhancing the transparency of AI operations, and addressing C.2.11 on transparency and explainability.

Choosing ISMS.online for Annex C Compliance

Choosing ISMS.online for your ISO 42001 Annex C compliance journey ensures that your organisation benefits from:- A structured approach to AI risk management, with tools that align with A.5.3 for AI risk assessment.

- A platform that supports the integration of AI management with other management system standards, as highlighted in Annex D.

- A user-friendly interface that simplifies the compliance process, making it accessible to all stakeholders involved in AI management.