Understanding the Purpose and Scope of ISO 42001 Annex D

Integration with ISO 42001

Annex D is integral to ISO 42001, providing a structured approach for organisations to manage AI systems responsibly across various sectors, ensuring accountability, transparency, and fairness. It aligns with Requirement 4.1 by guiding organisations in understanding their context and with Requirement 4.2 in identifying the needs and expectations of interested parties. Annex D also aids in determining the scope of the AI management system as per Requirement 4.3 and supports the establishment of the AI management system in accordance with Requirement 4.4. It offers sector-specific guidance, as outlined in D.1, and facilitates the integration of the AI management system with other management system standards, as suggested in D.2.

Improvement of AI Management Systems

Annex D promotes continuous improvement through the PDCA cycle, emphasising the importance of regular reviews and updates to AI management practices, aligning with Requirement 10.1. This iterative process ensures that AI systems remain aligned with evolving ethical standards and technological advancements. It also supports the operation and monitoring of AI systems as per B.6.2.6, and addresses the objectives of security (C.2.10) and transparency and explainability (C.2.11).

How ISMS.online Help

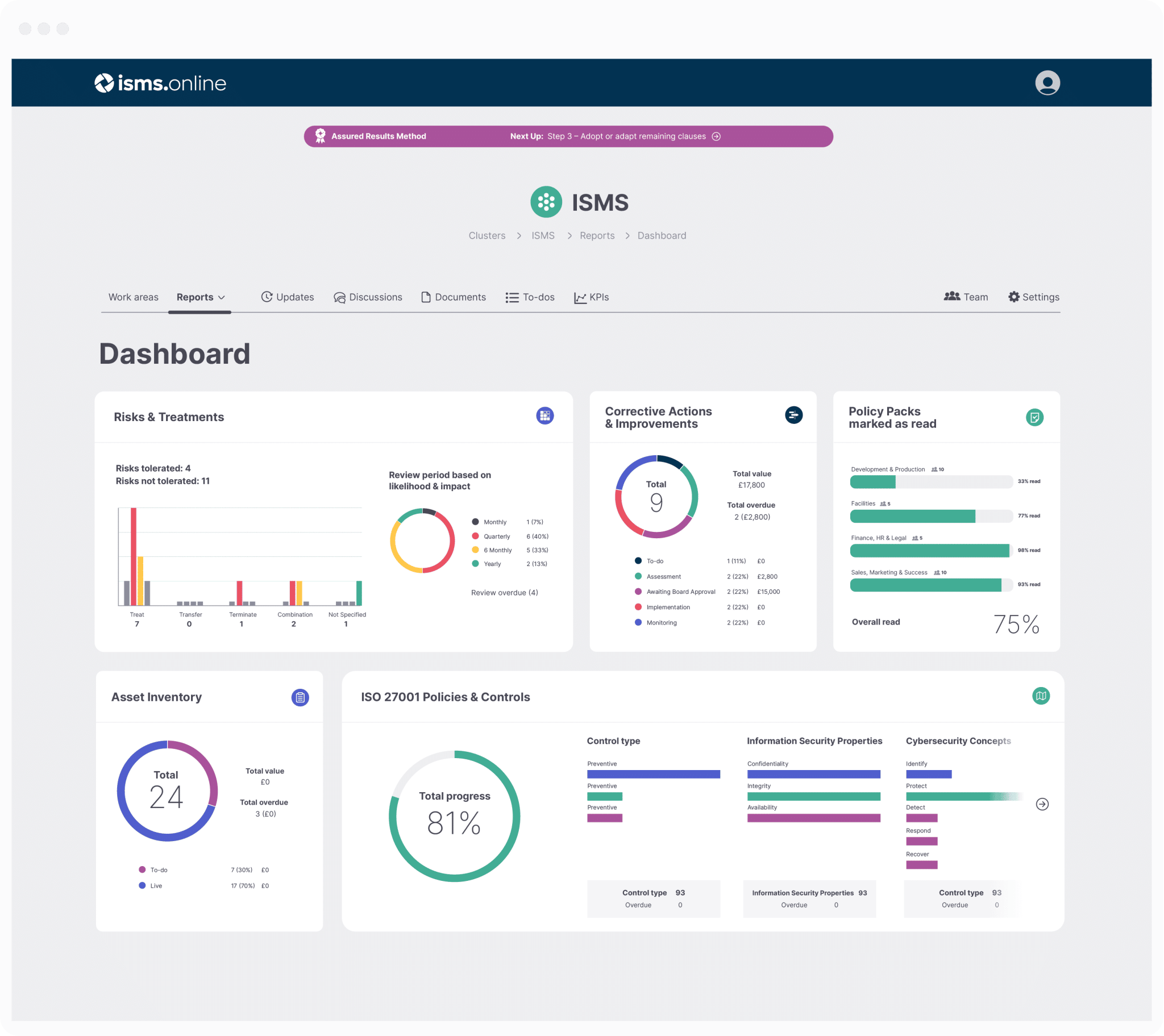

ISMS.online provides a robust platform that aligns with ISO 42001, including Annex D, to facilitate the implementation of AI management systems. It offers a suite of tools for documentation (B.6.2.7), risk assessment, and compliance tracking, enabling organisations to establish and maintain a comprehensive AI management system. By leveraging ISMS.online, entities can ensure that their AI systems are not only compliant with ISO 42001 but also optimised for performance and aligned with industry best practices. The platform supports the quality of data for AI systems (B.7.4), system documentation and information for users (B.8.2), intended use of the AI system (B.9.4), and customer relations (B.10.4).Applicability of Annex D Across Various Sectors

Addressing Sector-Specific AI Management Challenges

Annex D recognises the unique requirements of different industries and provides a flexible framework to cater to these variations. For instance, in healthcare, the emphasis on data privacy and accuracy of diagnosis aligns with Requirement 7.5 and A.7.4, ensuring that AI systems are developed and used responsibly across all sectors. In finance, the focus on security and decision-making transparency is supported by A.6.2.3 and A.9.2, promoting adaptable controls and guidance for responsible AI development and use.

Examples of Broad Applicability

In the healthcare sector, AI’s application for patient data analysis necessitates stringent controls for privacy and data integrity, as outlined in A.7.3 and A.7.5. Similarly, in the defence sector, AI systems must be robust and secure against adversarial attacks, aligning with the risk management controls specified in A.6.2.4 and A.7.6, ensuring the systems’ integrity and security throughout their lifecycle.

Ensuring Responsible AI System Development and Use

Implementing Annex D’s guidelines, organisations can ensure their AI systems are accountable, fair, and maintainable, as emphasised in C.2.1, C.2.5, and C.2.6. The annex promotes a risk-based approach, addressing environmental complexity, transparency challenges, and lifecycle management issues, in line with B.5.2 and B.6.2.6. It also underscores the importance of bias mitigation and privacy protection, ensuring AI systems are not only efficient but also ethically sound and trustworthy, as per C.2.7 and C.2.11.

Get an 81% headstart

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Integration with Other Management System Standards

Complementing Existing Standards

Annex D of ISO 42001, designed to be interoperable with existing management system standards, complements standards such as ISO/IEC 27001 and ISO/IEC 27701. This integration aligns AI management practices with established protocols for information security and privacy management, which is crucial for organisations prioritising the security and privacy of AI systems. It provides a unified approach to managing these critical aspects, as outlined in D.2.

Benefits of Integration

Integrating Annex D with ISO/IEC 27001 and ISO/IEC 27701 offers several benefits:

- Strengthened Security and Privacy: Following Annex D alongside ISO/IEC 27001, organisations can ensure that AI systems are secure against potential breaches and misuse, as emphasised in C.2.10. Similarly, ISO/IEC 27701’s privacy guidelines help manage personal data within AI systems, aligning with Annex D’s emphasis on data protection, which is critical as per C.2.7.

- Enhanced Quality Management: Applying Annex D in conjunction with ISO 9001 promotes quality management within AI systems, ensuring that AI services and products meet customer and regulatory requirements, supporting the objectives in C.2.6.

ISMS.online’s Support for Integration

ISMS.online provides a robust platform that supports the integration of Annex D with these standards, offering:

- Documented Information Control: Ensuring compliance with A.7.5, ISMS.online helps manage documented information as required by ISO/IEC 27001 and ISO/IEC 27701, aligning with B.7.5 for data provenance.

- Risk Management Processes: Aligning with Annex D’s risk-based approach, the platform offers customisable risk management processes essential for ISO/IEC 27001 and ISO/IEC 27701 compliance, addressing the risk sources related to machine learning as per C.3.4.

- Continuous Improvement: The platform’s features facilitate the PDCA cycle, a core component of ISO 9001, promoting continual improvement within AI management systems, in line with the objectives of C.2.11 for transparency and explainability.

ISMS.online’s capabilities in documented information control, risk management processes, and continuous improvement demonstrate its alignment with the requirements and controls of ISO 42001, specifically A.2.2 for AI policy and A.8 for information for interested parties. The platform’s support for integrating Annex D with ISO/IEC 27001, ISO/IEC 27701, and ISO 9001 ensures that organisations can manage their AI systems effectively, addressing the objectives and risk sources outlined in Annex C and integrating with other management systems as per Annex D.

Benefits of Adopting ISO 42001 Annex D for AI Management

Enhanced Risk Management

Annex D significantly enhances risk management by systematically identifying, assessing, and mitigating AI-specific risks, such as automation biases and machine learning vulnerabilities. This proactive approach is in line with Annex A controls, which emphasise risk-based thinking and due diligence. The Requirement 5.2 underscores the importance of addressing risks and opportunities within the AI management system. The A.6.2.4 control ensures AI systems undergo thorough verification and validation, while B.3.4 and C.3.4 highlight the need to consider machine learning-specific risks, such as data quality issues and model vulnerabilities.

Compliance with Legal and Regulatory Requirements

Adherence to Annex D’s guidelines ensures AI systems comply with current legal and regulatory standards, a critical factor in heavily regulated sectors like healthcare and finance. This compliance provides a clear pathway to meet and exceed statutory obligations. Requirement 4.1 involves considering legal and regulatory requirements as part of the organisation’s context. A.8.5 ensures organisations provide necessary AI system information to comply with reporting obligations, and B.8.5 offers implementation guidance for reporting AI system information to comply with legal and regulatory requirements.

Building Customer Confidence

Incorporating the principles of Annex D into AI management systems can significantly boost customer confidence. Transparency, accountability, and a commitment to ethical AI use are increasingly important to customers. Annex D’s framework is designed to promote these values, fostering trust and loyalty among users. Requirement 5.2 establishes the need for an AI policy that includes a commitment to meet applicable requirements and to continual improvement. A.8.2 relates to providing necessary information to users, enhancing transparency and trust. C.2.11 outlines the importance of transparency and explainability as organisational objectives for AI systems. D.2 discusses the integration of the AI management system with other management system standards, emphasising the importance of customer trust and sector-specific compliance.

Compliance doesn't have to be complicated.

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Relationship Between Annex D and Other Annexes in ISO 42001

Aligning with Control Objectives in Annex A

Annex D of ISO 42001, through its sector-specific applications, extends the general controls into a detailed framework tailored for AI systems, ensuring that the objectives of responsible AI use, such as accountability (C.2.1) and transparency (C.2.11), are met. For example, the control on organisational roles and responsibilities (A.3.2) is given specific context in Annex D, detailing how these roles should be adapted for AI governance, in alignment with the integration of the AI management system with other management system standards (D.2).

Complementing Implementation Guidance in Annex B

The sector-specific insights offered by Annex D complement the guidance provided in Annex B, ensuring that the implementation of controls is not only in line with ISO 42001’s general principles but also attuned to the unique challenges presented by different industries using AI. This includes aligning AI roles and responsibilities (B.3.2) with sector-specific needs, as well as integrating the AI management system with other management system standards (D.2).

Enhancing Effectiveness with Information in Annex C

Incorporating the risk sources and objectives identified in Annex C, Annex D enhances the effectiveness of ISO 42001 by providing a practical approach to managing these risks and achieving these objectives. This strengthens the AI management system’s overall robustness and resilience, ensuring that organisations can apply a consistent and thorough approach to AI management across various sectors, enhancing the value and effectiveness of their AI management systems. This approach is associated with the accountability (C.2.1), transparency and explainability (C.2.11), and risk sources (C.3) outlined in Annex C, and the integration of the AI management system with other management system standards (D.2).

Implementing AI Management Systems Across Domains and Sectors

Organisations embarking on the implementation of ISO 42001 Annex D must adopt a structured approach that is customised to the unique challenges and needs of their specific sectors. Understanding the domain-specific requirements and the impact of AI systems on operations and stakeholders is essential.

Steps for Effective Implementation

To effectively implement Annex D, organisations are encouraged to:

-

Conduct a Gap Analysis: Evaluate current AI management practices against the requirements of Annex D to pinpoint areas for enhancement, ensuring alignment with Requirement 4.1 and Requirement 6.1, and supported by Annex D.2 guidance for a comprehensive approach.

-

Develop an Implementation Plan: Craft a detailed strategy that addresses the gaps identified, aligns with sector-specific demands, and integrates controls from Annex A for AI application, in line with Requirement 6 for planning, and incorporating controls such as A.6.7 and A.6.2.3.

-

Engage Stakeholders: Involve all relevant parties in the implementation process, clarifying their roles and responsibilities as outlined in Annex A, particularly Requirement 5.3 and A.3.2, to ensure a collaborative approach.

Adapting to Sector-Specific Requirements

The application of Annex D is significantly influenced by sector-specific requirements. For example, in the healthcare sector, the protection of patient data is critical, necessitating strict adherence to controls for data integrity and privacy, as guided by Annex D.1 and B.7.4.

Challenges in Diverse Organisational Contexts

Tailoring Annex D to the unique context of an organisation presents challenges. Balancing the standard’s universal principles with operational realities is key to managing AI systems responsibly while fulfilling sector-specific requirements, as acknowledged in Annex D.1 and considering system life cycle issues as a potential risk source in C.3.6.

How ISMS.online Help

ISMS.online aids in customising the implementation of Annex D by offering:

-

Tailored Documentation: Assisting in the development of policies and procedures that cater to the distinct needs of various sectors, aligning with guidance from B.2.2 and B.6.2.7 for creating AI policies and technical documentation.

-

Risk Management Tools: Providing adaptable tools for risk assessment and treatment that align with the risk-based approach of Annex A, supported by A.5.3 for responsible development objectives and B.7.2 for data management guidance.

-

Continuous Improvement Mechanisms: Facilitating the PDCA cycle to enable organisations to evolve and enhance their AI management systems over time, in accordance with Requirement 10.1 and supported by C.2.10, focusing on security as an organisational objective.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Key Objectives and Controls Defined in Annex D

Annex D of ISO 42001 establishes a comprehensive set of objectives for AI management, aiming to ensure that AI systems are used responsibly and ethically. The annex delineates 39 controls for AI use, each meticulously detailed to guide organisations in enhancing the trustworthiness of their AI applications.

Objectives for Responsible AI Use

The objectives set forth in Annex D focus on critical areas such as AI accountability, expertise, data integrity, environmental impact, fairness, system maintainability, privacy protection, robustness, safety, security, transparency, and explainability. These objectives are aligned with the controls specified in Annex A, ensuring a cohesive and standardised approach to AI management.

Associated with:

- C.2.1 – Accountability

- C.2.2 – AI expertise

- C.2.3 – Availability and quality of training and test data

- C.2.4 – Environmental impact

- C.2.5 – Fairness

- C.2.6 – Maintainability

- C.2.7 – Privacy

- C.2.8 – Robustness

- C.2.9 – Safety

- C.2.10 – Security

- C.2.11 – Transparency and explainability

Detailed Controls for AI Management

Annex D provides explicit implementation guidance for each of the 39 controls, which cover a broad spectrum of AI management aspects, including but not limited to:

- AI Governance: Establishing clear leadership and policy development for AI risk assessment (A.5.3).

- AI System Justification: Setting criteria for usage and performance metrics (A.6.7).

- Data Management: Ensuring transparency and quality of training data (A.7.4).

Associated with:

- A.5.3 – Objectives for responsible development of AI system

- A.6.7 – AI system requirements and specification

- A.7.4 – Quality of data for AI systems

- B.5.3 – Objectives for responsible development of AI system (Implementation guidance)

- B.6.7 – AI system requirements and specification (Implementation guidance)

- B.7.4 – Quality of data for AI systems (Implementation guidance)

Supporting Responsible AI Use

These controls support responsible AI use by addressing the entire AI lifecycle, from conception to deployment, and by emphasising continuous monitoring. They guide organisations in establishing policies, setting procedures, conducting risk assessments, applying risk treatments, and maintaining documentation, all of which are essential for managing AI systems effectively and ethically.

Associated with:

- Requirement 6 – Planning

- Requirement 8 – Operation

- Requirement 9 – Performance evaluation

- Requirement 10 – Improvement

- A.6 – AI system life cycle

- B.6 – AI system life cycle (Implementation guidance)

Further Reading

Risk Identification and Mitigation Strategies in Annex D

Annex D of ISO 42001 equips organisations with a systematic approach to managing AI-specific risks, advocating for the Plan-Do-Check-Act (PDCA) cycle and risk-based thinking integral to Requirement 6. This approach ensures that risks stemming from environmental complexity, automation biases, and machine learning vulnerabilities are addressed comprehensively.

Addressing Automation Biases and Hardware Vulnerabilities

To mitigate risks such as automation biases, Annex D underscores the importance of implementing controls for diversity in training data and regular review of decision-making processes. These measures are supported by A.7.4, which calls for the quality of data for AI systems, and B.7.4, offering implementation guidance to ensure data integrity and mitigate biases.

For hardware vulnerabilities, robust security protocols and regular system audits are advised, aligning with A.10.3‘s emphasis on supplier management. B.10.3 provides further guidance on establishing these security protocols and conducting system audits, ensuring that hardware vulnerabilities are addressed effectively.

Lifecycle Management and Technology Readiness

Annex D promotes lifecycle management by advocating for continuous monitoring and iterative improvement of AI systems, aligning with A.6.2.6 on AI system operation and monitoring. B.6.2.6 offers implementation guidance to help organisations apply these controls effectively, ensuring AI systems remain effective and secure throughout their operational life.

In addressing technology readiness, Annex D guides organisations to assess the maturity and limitations of AI technologies before full-scale implementation. This proactive stance is supported by C.3.7, listing technology readiness as a potential organisational objective and risk source. B provides implementation guidance to assist organisations in conducting these technology assessments, ensuring they are well-prepared for potential challenges in deploying AI systems.

Sector-Specific Standards and Third-Party Conformity Assessment

ISO 42001 Annex D is engineered to facilitate the application of sector-specific standards, ensuring that AI management systems are adaptable to the unique requirements of various industries, a crucial aspect for sectors such as healthcare, finance, and defence, where AI applications are subject to stringent regulatory standards and ethical considerations (D.1).

Importance of Third-Party Conformity Assessment

Third-party conformity assessment, a pivotal component of Annex D, provides an objective evaluation of whether AI management systems meet the international standards set forth in ISO 42001 (D.2). This assessment is vital for maintaining transparency and trust in AI systems, particularly in sectors where the consequences of AI failure can be significant.

Addressing Industry-Specific Needs

In the healthcare sector, Annex D emphasises the protection of sensitive patient data, aligning with Annex A controls on privacy and data integrity (A.7.4). For the finance sector, it underscores the need for robust AI systems that can withstand malicious cyber activities, resonating with Annex A’s focus on security (A.10.3). In defence, the standard stresses the importance of AI systems’ reliability and safety, crucial for national security applications (C.2.9).

Ensuring Compliance Through ISMS.online

Organisations can leverage ISMS.online to ensure compliance with sector-specific standards. The platform’s comprehensive features align closely with ISO 42001’s requirements, offering tools for risk assessment Requirement (6.1), policy development Requirement (5.2), and continuous improvement Requirement (10.1). By utilising ISMS.online, organisations can effectively manage their AI-related risks and opportunities, ensuring their AI systems are not only compliant but also optimised for performance and trustworthiness.

Bias Mitigation and AI System Impact Assessment

Mitigating Bias within AI Systems

Annex D emphasises the integration of AI management systems with sector-specific standards to ensure responsible AI practices. To mitigate bias, organisations should conduct a thorough AI risk assessment (Requirement 5.3) and outline AI risk treatment processes (Requirement 5.5). Emphasising the importance of diverse training datasets and regular reviews of decision-making algorithms is crucial to prevent discriminatory outcomes. This is supported by the fairness objective (C.2.5) and the need for quality data (B.7.4), as well as the implementation of regular algorithm reviews as part of the AI system impact assessment process (B.5.2).

Ensuring Fair and Equitable Operations

Organisations must ensure that AI systems operate fairly and equitably, aligning with societal values and ethical norms. This involves incorporating robust privacy protections to maintain user trust and comply with regulations, as highlighted in the privacy (C.2.7) and transparency and explainability (C.2.11) objectives. Documenting the provenance of data used in AI systems is crucial for privacy and ethical considerations (B.7.5).

Continuous Monitoring for Bias Detection

Continuous monitoring is essential for detecting biased outcomes and taking corrective actions as necessary. This involves regularly reviewing the impact of AI systems to ensure they remain beneficial and non-harmful over time. Continuous monitoring is associated with defining the necessary elements for ongoing operation and monitoring of AI systems (A.6.2.6), determining phases in the AI system life cycle where event logging should be enabled for monitoring purposes (B.6.2.8), and ensuring accountability in AI systems through continuous monitoring (C.2.1). Regularly reviewing the impact of AI systems is part of the AI system impact assessment process (A.5.2).

By adhering to these guidelines and aligning with Annex A controls that focus on accountability, transparency, and ethical governance, organisations can foster responsible AI development and use.

AI Cybersecurity and Data Management Considerations

Addressing cybersecurity concerns, Annex D provides a framework for safeguarding AI systems against a range of cyber threats, emphasising robust security measures throughout the AI system lifecycle, from design to deployment and beyond.

Ensuring Secure AI Data Management

For secure data management, Annex D advises on implementing controls for data integrity and confidentiality, which are critical for maintaining the trustworthiness of AI systems, especially when handling sensitive or personal data.

- Requirement 7.5 ensures the availability and suitability of documented information for secure data management.

- A.7.4 addresses the need for defining and documenting requirements for data quality to maintain data integrity and confidentiality.

- B.7.4 provides implementation guidance for ensuring data quality in AI systems.

- C.2.7 highlights privacy as a potential AI-related organisational objective when managing data.

Transparency and Explainability in AI Systems

Annex D also stresses the need for transparency and explainability in AI operations, guiding organisations to document AI decision-making processes and make these processes understandable to stakeholders, aligning with Annex A’s emphasis on clear and accessible information.

- A.8.2 relates to providing necessary information to users for transparency.

- B.8.2 offers implementation guidance for creating and maintaining user documentation for AI systems.

- C.2.11 identifies transparency and explainability as key organisational objectives for AI systems.

ISMS.online’s Role in Supporting Annex D Requirements

ISMS.online supports these cybersecurity and data management requirements by offering:

- Centralised Document Management: A secure platform for storing and managing critical AI system documentation, ensuring compliance with A’s control on documented information.

- Customisable Risk Management Processes: Tools to assess and treat AI-related risks, in line with A’s risk management controls.

- Integrated Audit and Review Capabilities: Features that facilitate the regular review of AI systems for security and data management efficacy, as recommended by Annex D.

By leveraging these features, organisations can enhance the security and integrity of their AI systems, ensuring they meet the high standards set by ISO 42001 Annex D.

- A.7.5 supports the documentation of data provenance, essential for ISMS.online’s centralised document management feature.

- B.7.5 provides implementation guidance for recording data provenance, aligning with ISMS.online’s document management capabilities.

- A.6.2.8 aligns with ISMS.online’s integrated audit and review capabilities, ensuring event logs are recorded and managed.

- D.2 demonstrates ISMS.online’s ability to integrate AI management systems with other standards, enhancing cybersecurity and data management.

How ISMS.online Help With ISO 42001 Annex D Implementation

Initiating the Implementation Process

To effectively manage AI systems in accordance with Annex D.1, organisations should:

-

Conduct an Initial Assessment: Evaluate current AI management practices against the standard’s requirements, considering the organisation’s context (Requirement 4.1), the needs and expectations of interested parties (Requirement 4.2), and the scope of the AI management system (Requirement 4.3). This assessment should also take into account the AI policy (B.2.2) and system life cycle issues as a risk source (C.3.6).

-

Develop a Structured Plan: Create a roadmap that incorporates the necessary changes and aligns with the organisation’s specific context. This plan should be informed by the organisation’s AI objectives (6.2) and should integrate processes for responsible AI system design and development (B.5.5). Additionally, the plan should consider the integration of the AI management system with other management system standards (D.2).

The Imperative of Continuous Improvement

To ensure AI management systems evolve and adapt to new challenges and technologies, organisations should:

-

Regular Monitoring: Continuously assess the performance of AI systems against the objectives set out in Annex D, in line with the standard’s requirements for monitoring, measurement, analysis, and evaluation (Requirement 9.1). This includes AI system operation and monitoring (B.6.2.6).

-

Iterative Updates: Implement incremental changes to enhance system performance and compliance, ensuring continual improvement of the AI management system (Requirement 10.1). This should involve updating AI system technical documentation as necessary (B.6.2.7).

Leveraging ISMS.online for Compliance and Management

ISMS.online can facilitate the journey towards effective AI management and compliance by providing:- Integrated Tools: A suite of tools for risk assessment, policy development, and control implementation, supporting the documented information requirements of the AI management system (Requirement 7.5). These tools can address controls related to data for AI systems (A.7) and provide guidance for data development and enhancement (B.7.2).

- Centralised Platform: A centralised platform for documentation and processes, facilitating easier management and oversight. This aligns with the requirements for an AI management system (4.4) and supports the provision of information to interested parties of AI systems (A.8), including system documentation and information for users (B.8.2).

By integrating these steps and leveraging platforms like ISMS.online, organisations can manage their AI systems in a manner that is not only compliant with ISO 42001 Annex D but also optimised for performance and ethical considerations, across various domains or sectors as outlined in Annex D.1.