Understanding ISO 42001 and Its Importance for AI Management

ISO 42001 is a pioneering standard, specifically designed to guide organisations in establishing, implementing, maintaining, and improving an AI management system. It is pivotal for managing AI systems as it provides a structured framework to address the unique challenges posed by AI technologies, including ethical considerations, data quality, and risk management, ensuring that AI systems are developed and used responsibly, with a clear focus on societal and individual impacts (Requirements 4.1, 4.4, 6.1, 5.6, 10.1).

Complementing Existing Standards

ISO 42001 complements existing standards like ISO 27001 by integrating AI-specific management practices with information security principles. It extends the scope of traditional information security management systems (ISMS) to encompass the complexities of AI, ensuring a holistic approach to managing both AI and information security risks (D.2, Requirement 5.2).

Addressing AI System Challenges

The standard addresses AI system challenges through its comprehensive control objectives and controls listed in Annex A. These controls are tailored to manage risks associated with AI systems, from development to deployment and operation, ensuring that AI technologies are leveraged in a secure, ethical, and effective manner (Annex A, Annex B, C.3.4).

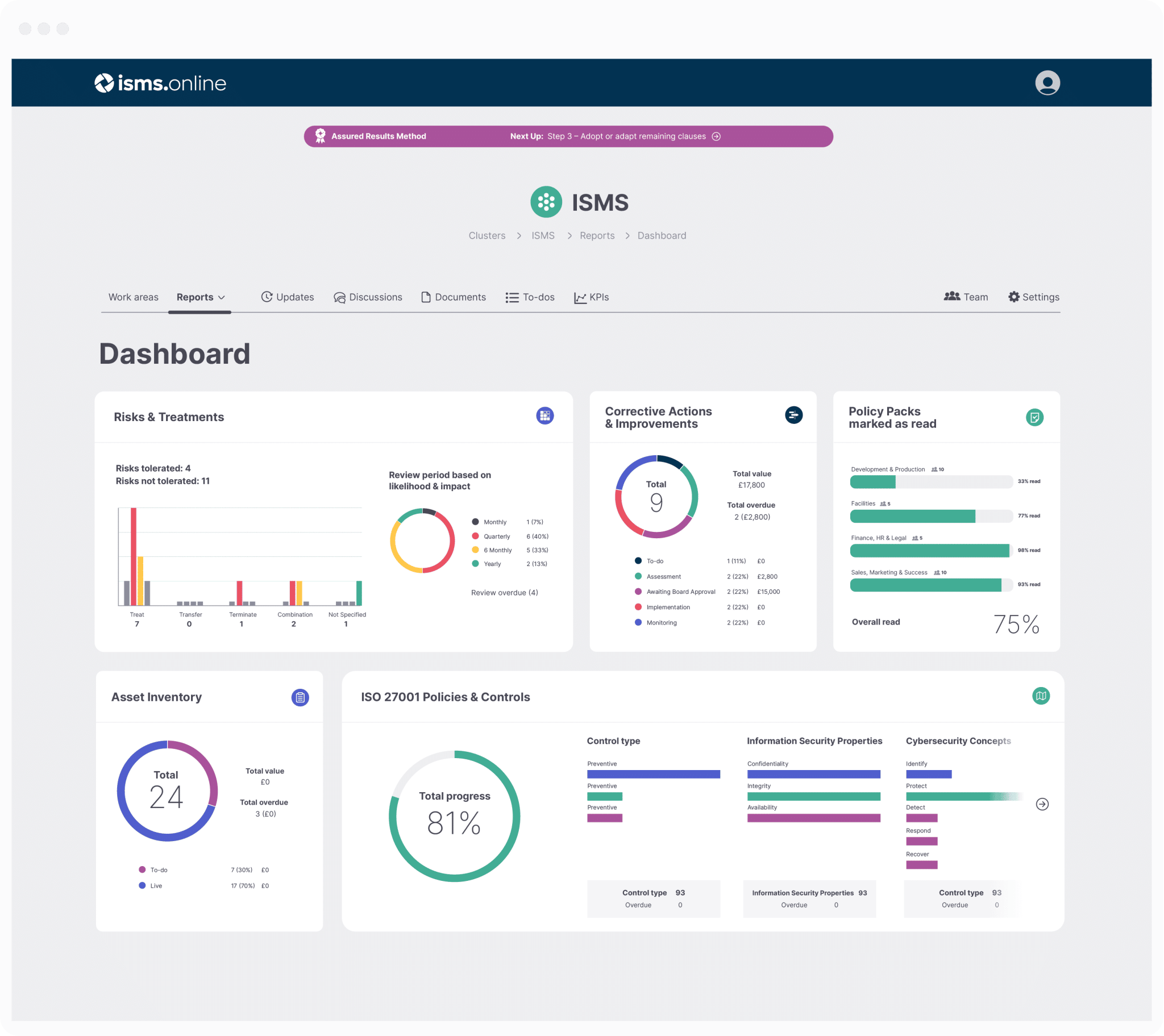

Using ISMS.online for ISO 42001 Compliance

ISMS.online provides a robust platform that aligns closely with ISO 42001 requirements, offering tools and resources to facilitate the development and maintenance of an AI management system. It aids organisations in documenting their AI management processes, conducting risk assessments, and creating a Statement of Applicability (SoA) that reflects their commitment to managing AI risks and opportunities. With ISMS.online, organisations can ensure that their AI management practices are compliant with ISO 42001 and are positioned for continuous improvement (Requirement 7.5, Requirement 8.1, Requirement 9.1, A.4.6, B.4, B.9, D.1).The Role of Statement of Applicability in ISO 42001 Compliance

The Statement of Applicability (SoA) is a foundational document within the ISO 42001 framework, outlined in A.26, that details the controls an organisation has selected for managing risks associated with artificial intelligence (AI) systems. It acts as a declaration of the controls applicable to the organisation’s AI management system, evidencing a commitment to identifying, assessing, and treating AI-related risks as per Requirement 5.5.

Demonstrating Commitment to AI Risk Management

Through the SoA, an organisation demonstrates its dedication to AI risk management by documenting the chosen controls and justifying their relevance or exclusion, as guided by B.2.2. This pivotal document for ISO 42001 compliance provides a transparent account of how AI risks are managed, aligning with the standard’s focus on accountability and ethical considerations, as emphasised in C.2.1.

AI Objectives and Governance Framework

An effective SoA must include clearly defined AI objectives and the governance framework that guides the organisation’s approach to AI risk management, aligning with Requirement 6.2. This framework should reflect the organisation’s strategic direction, as supported by top management’s commitment under Requirement 5.1 and the internal organisation objective B.3.1.

Risk Management Strategies

The SoA should detail the risk management strategies and processes in place to address AI-specific risks, in accordance with Requirement 5.2. This includes leveraging resources for AI systems as per A.4 and data for development and enhancement of AI systems in line with B.7.2. It should also consider risk sources related to machine learning, as identified in C.3.4.

Control Selection

Justifications for the inclusion or exclusion of controls, particularly those outlined in Annex A, are a critical component of the SoA. This includes updated control categories for nuanced security management, as per Requirement 5.5, and the review of the AI policy as per A.2.4. The documentation of AI system design and development should be in accordance with B.6.2.3.

Impact Assessment

The SoA must consider the AI system’s impact on individuals and society, ensuring that ethical AI practices are integrated into the management system, as required by Requirement 5.6. This involves assessing impacts of AI systems as per A.5 and assessing AI system impact on individuals or groups of individuals as guided by B.5.4. Fairness, as an organisational objective, should be addressed as per C.2.5.

By meticulously documenting these components, the SoA becomes an essential tool for organisations to achieve compliance and maintain a robust and ethical AI management system, as supported by the general guidance in D.1 and the integration of the AI management system with other management system standards as per D.2.

Get an 81% headstart

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Navigating the Requirements for a Comprehensive SoA

ISO 42001 establishes specific requirements for the Statement of Applicability (SoA) to ensure that organisations implement a comprehensive AI risk management and control system. The SoA must reflect a clear understanding of the AI system’s context, including its life cycle, and the associated risks and opportunities.

Ensuring Thorough AI Risk Management and Control

The SoA is required to document the following:

- The rationale for selecting or excluding certain controls from Annex A.

- How these controls are implemented within the AI management system, as guided by Annex B.

- The effectiveness of these controls in mitigating AI-related risks, in line with Requirement 5.5.

Organisations must ensure that the SoA is developed under the leadership and commitment of top management, as stipulated in Requirement 5.1, to align with the strategic direction of the organisation.

Addressing Challenges in Meeting SoA Requirements

Organisations may encounter challenges such as:

- Aligning the SoA with the dynamic nature of AI technologies and risks, which requires consideration of the organisation’s context and the requirements of interested parties as per Requirement 5.2.

- Ensuring that the SoA remains relevant and up-to-date with evolving regulatory landscapes, reflecting the potential organisational objectives and risk sources outlined in Annex C.

ISMS.online for Streamlined SoA Compliance

ISMS.online can be instrumental in addressing these challenges by providing:

- A centralised platform for documenting and managing the SoA, aligning with the establishment and documentation of an AI management system as required by Requirement 4.4.

- Tools for continuous monitoring and updating of the SoA to reflect changes in the AI system’s context or risk profile, ensuring the SoA is a living document that is regularly reviewed and updated as per Requirement 10.1.

- Features that facilitate the integration of AI management system controls with broader organisational processes, as suggested in Annex D for using the AI management system across various domains or sectors.

By utilising ISMS.online, organisations can maintain an SoA that is not only compliant with ISO 42001 but also adaptable to the fast-paced evolution of AI technologies and practices. The platform’s capabilities in competency and training (Requirement 7.2), communication and awareness (Requirement 7.3 and Requirement 7.4), and performance evaluation (Requirement 9.1) support the effective implementation and continual improvement of the AI management system.

Linking AI Risk Assessment and Treatment to the SoA

Informing SoA Development Through AI Risk Assessment

The AI risk assessment process is instrumental in shaping the Statement of Applicability (SoA), as it identifies potential threats and vulnerabilities inherent in AI systems (Requirement 5.3). This critical evaluation informs which Annex A controls are pertinent and necessary for risk mitigation (A.5.2). By conducting a comprehensive analysis of AI-specific risks, the SoA is crafted to accurately mirror the organisation’s risk landscape and the strategic measures implemented to manage these risks (B.5.3).

Shaping the SoA with AI Risk Treatment

Selecting suitable risk responses and controls to mitigate AI risks to an acceptable level is the essence of AI risk treatment (Requirement 5.5). These selected treatments are integral to the SoA’s content, as they must be documented with a rationale for their implementation. This critical documentation underscores the organisation’s proactive stance in managing AI risks and aligns with the processes for responsible AI system design and development (A.5.5).

Documenting the Link Between Risk Assessment, Treatment, and the SoA

The documentation connecting risk assessment, treatment, and the SoA serves multiple essential functions:

- It establishes a transparent traceability path from risk identification to the implementation of controls.

- It ensures that all decisions are substantiated by a thorough understanding of the AI system’s risk profile.

- It underpins accountability and fosters continuous improvement within the AI management system (Requirement 5.6).

This documentation is also crucial for recording the provenance of data used in AI systems throughout their life cycles (B.7.5).

Prioritising AI Management Controls in the SoA

The SoA is a strategic instrument that prioritises AI management controls based on the outcomes of the risk assessment. Controls that address the most significant risks are prioritised, ensuring that resources are allocated effectively to the areas of highest impact. This prioritisation is vital for upholding the integrity and resilience of AI systems against evolving threats and challenges. The organisation’s commitment to producing a statement of applicability that encompasses the necessary controls is evident in this prioritisation process (Requirement 5.5).

The organisation also ensures that the requirements for new AI systems or significant enhancements to existing systems are specified and documented, reflecting the security expectations that a system does not lead to a state where human life, health, property, or the environment is endangered (A.6.7; C.2.10). Furthermore, the integration of the AI management system with other management system standards is considered, particularly when the AI system is used in sectors such as food safety or medical devices (D.2).

Compliance doesn't have to be complicated.

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Annex A Controls Are the Backbone of ISO 42001 SoA

Annex A of ISO 42001 outlines a comprehensive set of control objectives and controls essential for managing AI system risks. These controls are meticulously crafted to address the complex nature of AI risks, encompassing data privacy, ethical use, robustness, and accountability.

Addressing AI System Risks with Specific Controls

The controls within Annex A are strategically categorised to encompass various aspects of AI management:

-

AI Policy Development: Establishing a governance framework that aligns with organisational objectives and regulatory requirements is crucial. Controls such as A.2.2 mandate the documentation of an AI policy, while A.2.3 ensures alignment with other organisational policies. Regular reviews, as stipulated in A.2.4, guarantee the AI policy’s ongoing relevance and effectiveness.

-

AI Risk Assessment: Identifying and evaluating potential risks throughout the AI system lifecycle is a fundamental process, guided by A.5.3. This control ensures that the AI risk assessment process is informed by and aligned with the AI policy and AI objectives.

-

AI Impact Analysis: Assessing the effects of AI systems on individuals and society is a critical consideration. Control A.5.2 establishes a process for assessing potential consequences, ensuring a comprehensive understanding of the AI system’s impact.

Importance of Control Design Flexibility

The design flexibility of controls is paramount, allowing organisations to adapt to their unique operational contexts, AI system complexities, and specific risk profiles. This adaptability, as highlighted in B.2.2, ensures that controls remain effective and relevant as AI technologies and associated threats evolve.

Tailoring Controls to Organisational Needs

Organisations can tailor these controls by:

-

Conducting thorough risk assessments to determine the most pertinent threats, as emphasised by risk sources related to machine learning in C.3.4.

-

Customising control implementations to address identified risks effectively, guided by B.5.5, which outlines the processes for responsible AI system design and development.

-

Ensuring controls are scalable and adaptable to changes in the AI environment, a concept supported by D.2, which discusses the integration of the AI management system with other management system standards.

By leveraging the guidance provided in Annex A, organisations can develop a robust SoA that not only complies with ISO 42001 but also fortifies their AI systems against a spectrum of risks, ensuring ethical, secure, and effective AI operations. The AI management system’s applicability across various sectors, as described in D.1, underscores its versatility and broad relevance.

Implementing Controls with Guidance from Annex B

Understanding the Context for AI Management System

To effectively manage AI-related risks, organisations must first understand their specific context as outlined in Requirement 4.1. ISMS.online aids in documenting both external and internal factors that influence the AI management system, ensuring a comprehensive approach to risk management that aligns with Annex C.

Leadership and Commitment in AI Management

Leadership plays a pivotal role in the success of an AI management system. As per Requirement 5.1, top management must demonstrate commitment to the AI policy and objectives. ISMS.online facilitates this by providing a platform where top management can actively engage with and oversee the AI management system’s implementation and effectiveness.

Risk Assessment and Treatment in AI Systems

A thorough risk assessment process, as required by Requirement 5.3, is critical for identifying and analysing AI risks. ISMS.online’s risk management features align with Requirement 5.5 to ensure that risks are evaluated and treated effectively, contributing to the robustness of the AI management system.

Continual Improvement of the AI Management System

Continual improvement, as mandated by Requirement 10.1, is essential for maintaining the effectiveness of the AI management system. ISMS.online provides tools for monitoring and enhancing AI system performance, ensuring that the organisation’s AI management practices remain current and effective.

Integrating AI Management with Other Systems

ISMS.online supports the integration of the AI management system with other management systems, as suggested in Annex D. This integration is particularly beneficial for sectors like healthcare or finance, where AI systems must align with specific regulatory and quality standards.

Implementing Specific Annex A Controls

Organisations can implement specific controls from Annex A, such as A.6.2.4 for AI system verification and validation, or A.7.4 for ensuring data quality in AI systems. ISMS.online supports these controls by providing a structured framework for documentation, management, and verification.

Training and Competence for AI Management

Ensuring the competence of personnel involved in the AI management system is crucial, as stated in Requirement 7.2. ISMS.online assists organisations in identifying training needs and tracking the acquisition of necessary competencies, contributing to the overall effectiveness of the AI management system.

Role of Internal Audits in AI Management

Internal audits are an integral part of maintaining conformity to the AI management system requirements, as per Requirement 9.2. ISMS.online streamlines the planning, execution, and reporting of internal audits, ensuring that the AI management system meets both the organisation’s and the standard’s requirements.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Aligning Organisational Objectives with Risk Sources Using Annex C

Importance of Organisational Objectives in the SoA

Incorporating organisational objectives into the Statement of Applicability (SoA) is crucial for giving the AI management system a clear direction and purpose. These objectives, as outlined in Requirement 6.2, guide the selection of controls by aligning them with the organisation’s strategic vision for AI and its risk appetite, ensuring that the AI management system supports the organisation’s overall strategy.

Aligning with the AI Policy

The AI policy, as per Requirement 5.2, should reflect the organisation’s intentions and direction for AI, providing a framework for setting AI objectives. It’s essential that the AI policy includes commitments to fulfil applicable requirements and to the continual improvement of the AI management system, as stated in Requirement 6.2 and Requirement 10.1.

Informing Control Selection with Risk Sources

Risk sources identified in C.3, such as environmental complexity and technology readiness, are critical in informing the selection of controls for the SoA. Understanding these sources enables your organisation to implement controls that are not only compliant with Requirement 5.2 but also tailored to specific organisational needs and the AI system’s operational context.

Continuous Monitoring and Adaptation

To address the dynamic nature of risk sources, organisations should prioritise controls that mitigate significant risks to their AI objectives and implement continuous monitoring, as per Requirement 9.1, to adapt controls as risk sources evolve.

Strategies for Addressing Identified Risk Sources

Organisations can maintain a dynamic and responsive AI management system by:

- Prioritising controls that mitigate the most significant risks to their AI objectives, aligning with the risk treatment process in Requirement 5.5.

- Implementing continuous monitoring, as per Requirement 9.1, to adapt controls as risk sources evolve, ensuring the AI management system remains effective and relevant.

- Engaging in regular risk assessments, in line with Requirement 5.3, to ensure that the SoA remains relevant and effective, supporting the achievement of AI objectives while managing risk sources effectively.

Integration with Other Management Systems

The integration of the AI management system with other management systems, as suggested in D.2, creates a cohesive approach that enhances the organisation’s ability to manage AI-related issues effectively. This integration ensures that AI-specific considerations are effectively addressed within the context of the organisation’s broader governance, risk, and compliance frameworks.

Further Reading

Tailoring the SoA for Sector-Specific Requirements with Annex D

Key Considerations for Sector-Specific Tailoring

When adapting the SoA for sector-specific needs, organisations should consider the distinct operational risks and AI applications prevalent in their sector (Requirement 4.1), regulatory requirements and industry standards that impact AI system management (Requirement 4.2), and stakeholder expectations and the need for sector-specific transparency and accountability (Requirement 5.2). This approach ensures that the SoA is customised to address the unique challenges and regulatory environments of different industries, aligning with Annex D guidance.

Importance of Sector-Specific Tailoring

Customising the SoA for specific sectors ensures that AI risk management practices are relevant and effective within the industry context (Annex A), controls are aligned with sector-specific operational needs and compliance obligations (Annex A), and the organisation can demonstrate due diligence and sector-specific expertise to stakeholders (Annex C). This alignment is crucial for establishing a robust AI management system that resonates with the unique demands of the industry.

Ensuring Compliance with ISO 42001 and Sector-Specific Requirements

Organisations can ensure their SoA meets both ISO 42001 and sector-specific requirements by conducting a detailed gap analysis to identify and address industry-specific risks (Requirement 5.3), engaging with sector experts to refine control selection and implementation strategies (Requirement 7.5), and regularly reviewing and updating the SoA to keep pace with sector developments and emerging AI technologies (Requirement 9.3). This meticulous alignment with sector-specific considerations establishes a robust AI management system that complies with ISO 42001 and meets the unique demands of the industry.

Review & Update of the SoA for Continuous Improvement

Necessity of Regular SoA Reviews

The necessity of regular reviews and updates of the Statement of Applicability (SoA) is paramount to ensure it accurately reflects the current state of an organisation’s AI management system. As AI technologies and associated risks evolve, the SoA must be revisited to incorporate new controls from Annex A or adjust existing ones, maintaining the system’s relevance and effectiveness. This is a critical activity that supports the Requirement 9.3 for management review, ensuring the AI management system’s continuing suitability, adequacy, and effectiveness.

Triggers for SoA Updates

Updates to the SoA may be triggered by:

- Changes in the organisation’s AI systems or operational environment, necessitating a review of the relevant resources as per A.4.2.

- Evolving legal, regulatory, or industry-specific requirements, which may impact the AI management system and its context as outlined in Requirement 5.2.

- Feedback from internal audits, incident reports, or stakeholder communications, which can lead to a reevaluation of the AI deployment plan as indicated in B.6.2.5.

Enhancing AI System Management Through Continuous Improvement

Continuous improvement of the SoA contributes to a dynamic AI management system by:

- Ensuring that risk management strategies are effective and current, aligning with the Requirement 10.1 for continual improvement.

- Aligning the AI management system with best practices and compliance standards, fostering transparency and explainability as highlighted in C.2.11.

- Facilitating proactive responses to new threats and opportunities, reinforcing the management system’s adaptability and resilience.

Facilitation by ISMS.online

ISMS.online plays a pivotal role in the review and update process by:

- Providing a centralised platform for tracking changes and documenting updates, which is essential for maintaining the data provenance as per B.7.5.

- Offering tools for risk assessment and control management that align with ISO 42001, supporting the organisation’s efforts to meet the Requirement 5.2.

- Enabling efficient collaboration and communication among stakeholders involved in maintaining the SoA, which is crucial for the communication of incidents as per A.8.4.

By utilising ISMS.online, organisations can streamline the continuous improvement process, ensuring that their SoA remains a living document that effectively supports the management and governance of AI systems. This approach is in line with the D.2 guidance, which encourages the integration of the AI management system with other domain-specific or sector-specific management systems for a unified governance approach.

Effective Communication and Awareness of the SoA

The Statement of Applicability (SoA) is a critical document within an AI management system, as it outlines the controls an organisation has implemented and the justification for their inclusion or exclusion, as detailed in A.26. Effective communication of the SoA is essential to ensure that all relevant stakeholders are aware of and understand the organisation’s approach to managing AI-related risks, in line with Requirement 7.5.3.

Strategies for Disseminating SoA Information

To ensure that the SoA is well-understood, organisations can:

-

Conduct training sessions to walk stakeholders through the SoA’s contents and implications, ensuring that persons doing work under its control that affects its AI performance are competent, as per Requirement 7.2.

-

Utilise clear, non-technical language to explain the purpose and impact of each control, aligning with B.8.2, which emphasises the importance of providing necessary information to users of the AI system in an understandable manner.

-

Provide accessible documentation, such as summaries or infographics, that highlight key points of the SoA, ensuring that documented information is available and suitable for use, where and when it is needed, as required by Requirement 7.5.

Role of ISMS.online in Enhancing SoA Understanding

ISMS.online can assist in these communication efforts by:

-

Offering a platform for sharing the SoA and related documentation with stakeholders, aligning with Requirement 7.5.3 for controlling documented information required by the AI management system.

-

Providing features that allow for easy updates and distribution of the latest SoA information, which can be associated with A.6.2.6 for defining and documenting elements for the ongoing operation of the AI system.

-

Enabling feedback mechanisms where stakeholders can ask questions or seek clarifications, in line with B.8.3 and B.8.4, ensuring that interested parties can report adverse impacts and that the organisation communicates incidents effectively.

By actively engaging with stakeholders and utilising the tools provided by ISMS.online, organisations can foster a culture of awareness and understanding regarding their AI management system and the importance of the SoA in maintaining ethical, secure, and effective AI operations, as emphasised in C.2.11 for transparency and explainability and D.2 for the integration of AI management system with other management system standards.

Addressing Challenges in SoA Development and Maintenance

Developing and maintaining a Statement of Applicability (SoA) as per Requirement 1 of ISO 42001 can present several challenges for organisations. These challenges often stem from the dynamic nature of AI technologies and the evolving landscape of risks and regulations.

Common Challenges in SoA Development

Organisations may encounter difficulties such as:

-

Aligning the SoA with Rapid Technological Changes: AI systems evolve quickly, necessitating frequent updates to the SoA to ensure it reflects current technologies and controls, in accordance with Requirement 5.2 and A.6 which emphasise the need for actions to address risks and opportunities, including managing changes in AI systems.

-

Integrating Ethical Considerations: ISO 42001 places a strong emphasis on ethical AI, which requires organisations to incorporate considerations beyond traditional risk management, as outlined in C.2.5 and C.2.11, focusing on fairness and transparency in AI.

Best Practices for Effective SoA Maintenance

To maintain an effective SoA, organisations should:

-

Conduct Regular Reviews: Periodically reassess the SoA to align with new AI developments and emerging risks, in line with Requirement 9.2 and B.2.4, which guide the review process of the AI policy.

-

Engage Stakeholders: Involve a broad range of stakeholders in the SoA development process to ensure comprehensive risk coverage, corresponding with Requirement 4.2 and B.3.3, emphasising the importance of stakeholder engagement.

-

Document Thoroughly: Maintain detailed records of the rationale behind the inclusion or exclusion of controls, particularly those related to Annex A of ISO 42001, supported by Requirement 7.5 and B.7.5, which stress the importance of documentation.

Overcoming Challenges with ISMS.online

ISMS.online supports organisations in overcoming these challenges by providing:

-

Centralised Documentation: A single platform to manage and update the SoA, ensuring consistency and accessibility, supporting Requirement 7.5.1 for documented information and B.4.2 for maintaining records.

-

Automated Workflows: Tools to streamline the review and update processes, reducing the administrative burden, aligning with Requirement 6.3 and B.6.2.6, which discuss the importance of managing changes effectively.

-

Comprehensive Control Library: A repository of controls from Annex A of ISO 42001, aiding in the selection and justification of applicable controls, in line with A.1 and B.4, which provide guidance on selecting and documenting controls.

By leveraging these strategies and tools, organisations can ensure their SoA remains a robust and compliant document that effectively supports the management of AI systems, consistent with Annex D, which discusses the application of the AI management system in various organisational contexts.

Complete ISO 42001 Support with ISMS.online

ISMS.online equips organisations with the necessary tools and resources for achieving and maintaining compliance with Requirement 4, ensuring robust, compliant AI management systems aligned with the latest standards. The platform’s comprehensive suite streamlines the development and maintenance of the Statement of Applicability (SoA), a critical component of Requirement 7.5.

Tools and Resources for SoA Development and Maintenance

ISMS.online offers:

- A centralised control library from Annex A, aiding in the selection and justification of applicable controls, crucial for Requirement 5.5 in establishing a risk treatment process.

- Automated workflows for documenting, reviewing, and updating the SoA, ensuring the integrity and security of documented information as required by Requirement 7.5.

- Collaboration features that enable stakeholders to contribute to and understand the SoA, supporting Requirement 5.3 by ensuring that responsibilities and authorities for relevant roles are assigned and communicated within the organisation.

Choosing ISMS.online for ISO 42001 SoA Needs

Organisations opt for ISMS.online due to its:

- Alignment with Requirement 6, providing a clear path to compliance through its risk assessment and treatment capabilities.

- User-friendly interface that simplifies complex compliance processes, in line with the guidance provided in B.2.2 for implementing AI policies.

- Scalable solutions that grow with your organisation’s AI management needs, demonstrating adaptability to various domains and sectors as outlined in Annex D.

Getting Started with ISMS.online

To begin with ISMS.online:- Visit the platform's website and explore the various features and services offered, which support the establishment and implementation of an AI management system as per Requirement 4.4.

- Contact the ISMS.online support team for a guided walkthrough of the platform's capabilities, ensuring that your organisation's AI management system aligns with Requirement 6 for planning.

- Utilise the available resources to initiate the SoA development process within your organisation's AI management system, in accordance with Requirement 7.5 for documented information.

By leveraging ISMS.online, organisations can confidently navigate the intricacies of ISO 42001 compliance, ensuring that their AI systems are managed with the highest standards of security, ethics, and effectiveness, aligning with the organisational objectives and risk management outlined in Annex C.