Biden’s AI Progress Report: Six Months On

Table Of Contents:

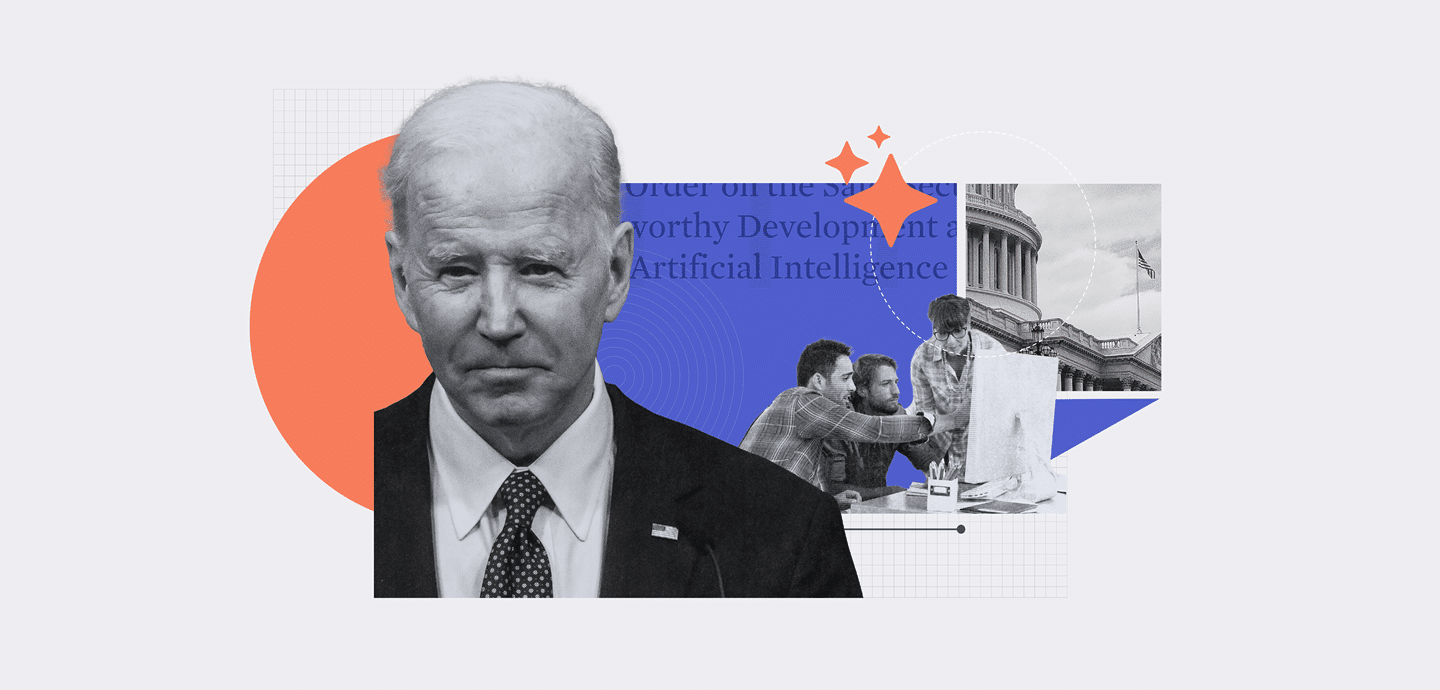

It has been six months since the US government passed a sweeping executive order (EO) addressing the need for responsible AI. The Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence addressed concerns over the security and safety of AI, along with its effect on civil liberties and workers’ rights. It also acknowledged the need to drastically increase the federal workforce’s AI skill set. These concerns translated into a wide range of actions across every federal agency. Half a year on, how is its implementation going?

Coordinated Action

The EO was coordinated with activities across the executive branch. The weeks after its announcement on October 30 2023 saw a flurry of activity across various agencies, such as the Department of State’s announcement of its Enterprise AI Strategy on November 9.

The Department of Defense also clearly had its response lined up. Almost immediately after the publication of the EO, the DoD released its 2023 Data, Analytics and Artificial Intelligence Adoption Strategy, which superseded its 2018 AI strategy. This policy is especially important for the Pentagon, which according to a Brookings Institution report makes up the lion’s share of federal AI contracts in the US government. Military AI contract expenditure accounted for $557m of $675m in funds committed to AI contracts across all agencies in the year to August 2023, the report found.

A Solid Report Card

On March 28, the White House reported that agencies had met all of the 90- and 150-day deadlines under the EO. Measures as detailed in this timeline analysis included the production of a Department of Treasury report on risk management for AI in financial services, and a self-assessment by each agency of the risks AI poses to critical national infrastructure.

Various agencies have contributed to the AI safety effort. The Department of Commerce created the US AI Safety Institute within the National Institute for Standards and Technology (NIST) on Nov 1, and has since assigned staff and created a consortium to support the organization. In mid-April, the NSA published its guidance on deploying AI systems securely.

The National AI Advisory Committee (NAIC) pitched in on issues of AI safety, recommending the introduction of a system to report adverse events from AI systems. The NAIC recommendation would potentially use the Office of Management and Budget (OMB)’s definitions of safety- and rights-impacting AI as a framework. These were laid out in the OMB’s March 28 memorandum on Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence, which cemented many of the mandates laid out for executive government agencies in the EO.

Safety and security practices in the OMB memorandum included a set of minimum practices for rights-impacting AI, along with a demand that agencies begin following them by December 1, 2024. If they cannot follow these guidelines then they must stop using the AI in question.

Other areas of progress based on demands in the EO include designing and implementing plans to recruit more AI talent in the US government. The NAIC made several recommendations in Q4 2023. It called for enhancing AI literacy among Americans, and also recommended a campaign for lifelong AI career success. The White House followed up with a call for AI talent in a hiring drive in January.

The progress has extended to international statecraft. The State Department announced in November that 47 states had endorsed the Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy, an initiative that it launched at the Hague in February 2023.

The Defense Production Act: A Tense Issue

EOs typically affect the private sector either through their impact on federal contractors, or through directions given to agencies that enforce specific rules. Sometimes, however, the White House will invoke special powers. In this case, it used the Defense Production Act to regulate dual-purpose AI models.

Under this 1950 legislation, the President can compel private sector companies to follow given production rules. In this case, AI producers must report on their AI safety testing along with the use of computing power above a given threshold. Providers of computing capacity must also report specific foreign powers’ use of their capacity for AI training.

NetChoice, a trade association for tech companies that numbers Google, Meta, and Amazon among its members, believes the government is overstepping with its wide-ranging regulation. Carl Szabo, the organization’s general counsel, claims that the regulations, including the use of the DPA as outlined in the EO, will have a chilling effect on private sector investment.

“When you start adding in regulatory limitations and regulatory checks that have to be made before you can deploy an artificial intelligence system, that is going to limit my ability as an investor to bring a product to market and to get that necessary return on investment,” he said.

He also believes that the EO is so wide ranging it could be challenged in the courts.

“I can’t tell you when it’s going to happen, but I can say that there are definitely discussions amongst many people I’ve heard from about bringing a challenge,” he added.

In the meantime, the government continues apace with its promise to address AI risks and responsible use, following a growth of almost 260% in its financial commitments to AI contracts during the year to August 2023.

Companies expecting to use AI, especially those that might be affected by US government contracts, would do well to follow emerging standards for responsible usage. NIST has published the AI Risk Management Framework, while the recently published ISO/IEC 42001 standard for deploying and maintaining AI systems is another useful framework to follow. With the passing of the EU’s AI Act in March, the need to demonstrate best practice when implementing AI is more acute than ever.