Should Software Vendors be Held Liable for Insecurity?

Table Of Contents:

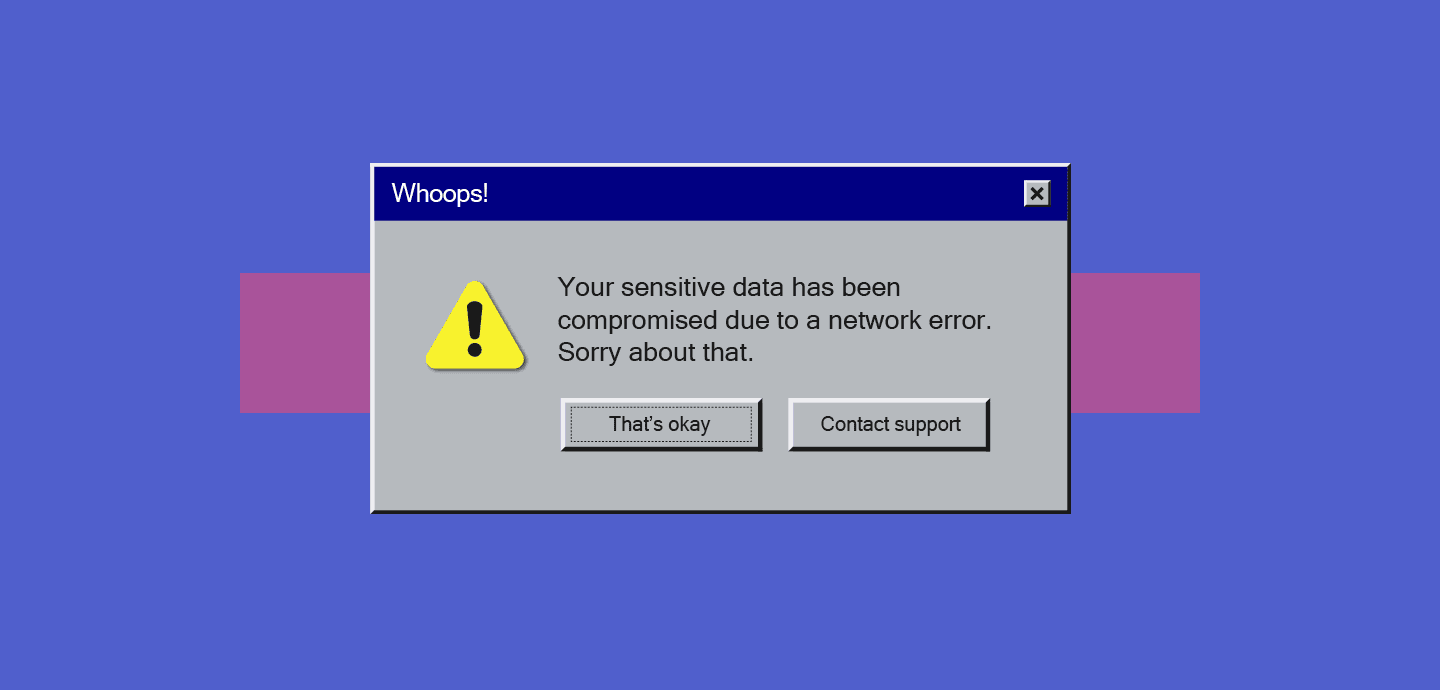

A zero-day flaw in your go-to enterprise software application allowed attackers into your network and compromised sensitive data. It’s going to cost a lot to fix before you even get to regulatory fines and customer lawsuits – and the application isn’t even yours. Who should pay?

It probably won’t be the company that sold you the software. Their end-user license agreements (EULAs) typically limit their liability. Most of us don’t read these because they’re too long and too complex.

Over the last few months, demands to change this situation have been growing louder, reaching the top levels of government. In February, Jen Easterly, director of the Cybersecurity and Infrastructure Security Agency, explicitly called for vendor liability during a speech at Carnegie Mellon University.

The world has come to accept unsafe technology as the rule when it should be the exception, she said. “Technology manufacturers must take ownership of the security outcomes for their customers.”

Easterly asked the government to publish legislation that would stop technology companies from disclaiming liability by contract. The Biden administration’s National Cybersecurity Strategy, launched this March, echoed the call for this law.

A long-standing debate

Governments have mulled this problem before. The UK House of Lords recommended holding software vendors accountable in 2007, even after hearing arguments against vendor liability from software developers on various grounds.

These protests included the sheer complexity of modern software environments. Many types of software co-exist together, pointed out the developer, adding that they might interact with each other in strange ways. Can we reasonably expect a software vendor to predict every interoperability edge case?

Another worry was the potential for user error. What if a user misconfigured the software, making it or a component it interacted with insecure due to a poor user interface? What if the user failed to apply a patch for a legitimate reason, such as a regulatory constraint? Is there such a thing as partial liability for misconfiguration, and how might that be decided?

There are other challenges for companies trying to comply with vendor liability issues. A lot of software isn’t built in a vacuum; it draws upon third-party libraries – often released under open-source licenses – that might contain their own security issues. Log4Shell, the bug in the Log4J Java logging library that affected cybersecurity for thousands of companies unnoticed since 2013 – is a prime example. Who pays if the software you built happens to use an insecure third-party component?

Your view of software should extend beyond your own boundaries, suggested Easterly. She echoed the White House’s call for a Software Bill of Materials (SBOM) to define the provenance of assembled software.

What does secure software look like?

Holding vendors of a complex product accountable raises other issues, such as how we even define software security. Definitions lie along a continuum, ranging from the inadequate – proving some basic static software tests – to the impractical – formal verification. The latter checks software operation against an abstract mathematical model. Such systems do exist, but they’re for specialised applications and involve a lot of coding overhead.

The most realistic definition sits somewhere in the middle, with documented proof of best practices that embed security directly into software production from the design phase onward. NIST’s Secure Software Development Framework, which the NCS recommends, articulates many of these.

Another recommendation of Easterly’s was the use of memory-safe languages. Plenty of modern software security flaws have their basis in memory misuse. As fast, low-level languages that require programmers to manage memory themselves, C and C++ are notably weak here. Go, Python, and Java are higher-level, interpreted languages that handle memory on the programmer’s behalf. A more recent language, Mozilla’s Rust, is also memory-safe – and is the first language other than C and assembly to be supported in the Linux kernel.

Easterly also called for transparent vulnerability disclosure policies among software vendors. All too often, they do their best to keep security bugs low-key, ignoring or sometimes discouraging security bug reports. She said a more open and collaborative relationship with cybersecurity researchers would help close software gaps.

Intermediate steps

While it waits for Congress, the White House relies on the Executive Order on Improving the Nation’s Cybersecurity, now over two years old, to nudge vendors in the right direction. The Oval Office can’t directly punish companies for making crummy code, but the EO prohibits federal agencies from buying it from them.

We can also look to standardisation for answers. The updated ISO 27001:2022 standard includes Annex A Control 8.28, which defines secure design and development principles for both internally developed software and the reuse of external code. With many companies relying on this accreditation as a key differentiator, the addition of this control creates additional pressure to improve and document software security.

Changing political tides

While vendor liability is a sticky topic, Congress hasn’t shied away from using legislation to tackle complex technology issues in the past. Corporate interest plays a significant part in its reluctance to tackle this particular problem, driven by a software industry with lots of lobbying power.

Nevertheless, more people are on a mission to hold an intensely competitive industry to account. Facebook might have officially abandoned its old internal slogan, “move fast and break things”, but that’s still a de facto operating model for tech firms racing to innovate. As software affects more of our everyday lives, some kind of balance between reckless gee-whiz feature development and measured responsibility for secure operation is more necessary than ever.