Why It’s Time To Start Planning For The EU AI Act

Table Of Contents:

Few technologies have the potential to worry regulators and delight businesses quite like artificial intelligence (AI). It’s been around for years in various forms. But European lawmakers have felt compelled to step in as intelligent algorithms become increasingly embedded in business processes and citizen-facing technologies.

The challenge for businesses developing such systems will be to assess whether they fit the strict criteria needed to make it to market within the bloc. For UK firms, in particular, a decision will need to be made on how to manage the diverging regulatory regimes.

What’s In The AI Act?

Governments across the globe are looking closer at AI in a bid to minimise abuse or accidental misuse. The G7 is discussing it. The White House is attempting to establish ground rules to protect individual rights and ensure responsible development and deployment. But it is the EU that is furthest along to fully formed legislation. Its proposals for a new “AI Act” were recently approved by the European Parliament.

The AI Act will seek to take a risk-based approach, classifying AI models according to “unacceptable”, “high”, “limited”, and “minimal” risk.

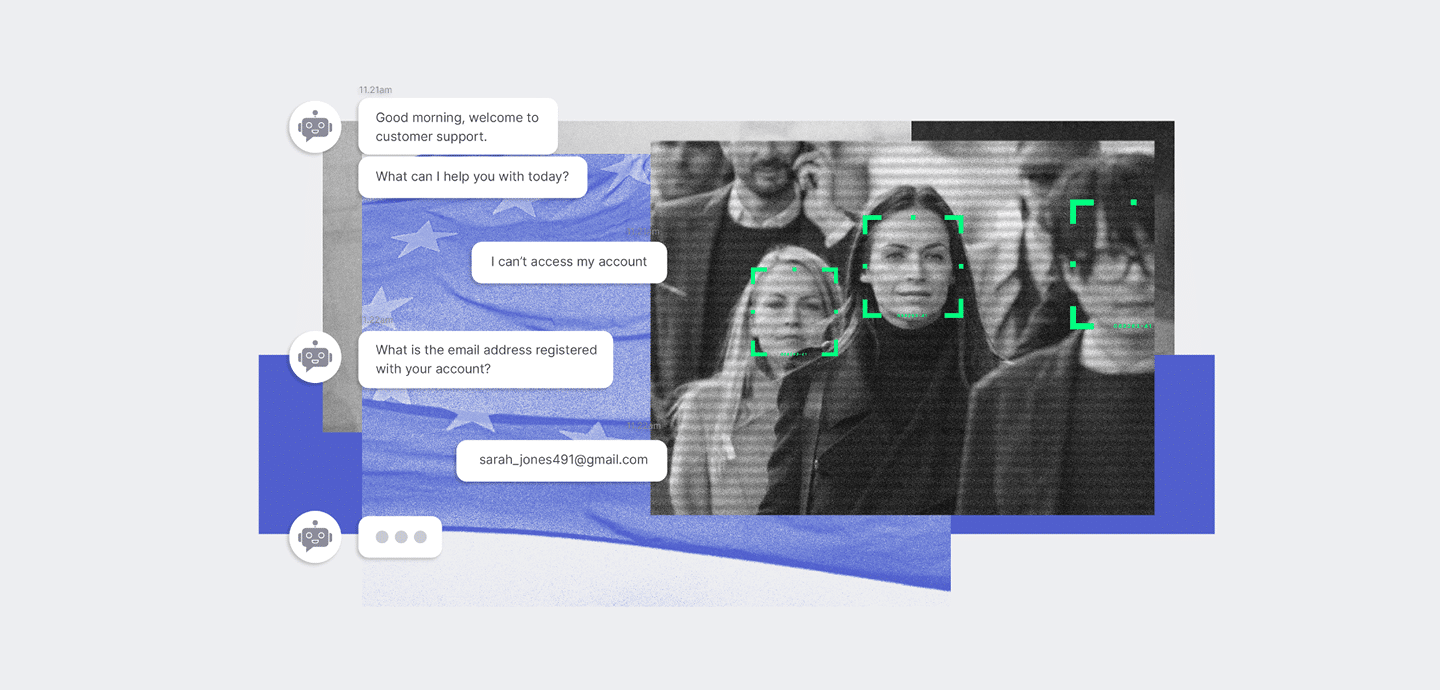

Unacceptable risk means systems that threaten people’s “safety, livelihoods and rights”. They include facial recognition technology, government social scoring and predictive policing, and will be banned outright.

High-risk systems could include AI used in critical infrastructure, which might put citizens’ health at risk, or in educational training settings, where it could be used to score exams. Other examples are:

- Use of AI in workplace settings, such as sorting CVs.

- Credit scoring.

- Verifying documents at border control.

- Police evaluating evidence.

MEPs also put social media recommendation systems, and AI used to influence voters during elections in the high-risk category.

Limited risk refers to AI systems where users must be informed that they are interacting with a machine, such as a chatbot.

Minimal/no-risk systems such as AI spam filters or video games can be used freely without restrictions. The EU claims this category comprises the majority of AI products currently in use.

What Are The Obligations For Complying Firms?

Those developers (providers) of potentially high-risk AI must jump through multiple hoops before their products are allowed on the market. These include:

- Risk assessments and mitigation systems

- Maintaining high-quality datasets to minimise risk and discrimination

- Logging of activity to add transparency to results

- Detailed documentation of the system and its purpose to share with authorities

- Clear and “adequate” information for users

- “Appropriate” human oversight to minimise risk

- High levels of “robustness, security and accuracy.”

Once a system is developed and has passed a conformity assessment, it will be registered in an EU database and given a CE mark. However, corporate users of AI models must ensure continual human oversight and monitoring, while providers have an obligation to monitor systems once on the market. Both stakeholders must report serious incidents and any malfunctioning of AI models.

How Will The Law Impact UK Firms?

The difference between this prospective regime and the UK’s is stark. According to Edward Machin, a senior lawyer in the data, privacy & cybersecurity team at Ropes & Gray, the latter is claimed to be more innovation-driven and pro-business.

“Unlike the EU, the UK government doesn’t plan to introduce AI legislation, nor will it create a new AI regulator. Instead, existing agencies (e.g., ICO, FCA) will support the framework and issue sector-specific guidance,” he tells ISMS.online.

“Although the UK is somewhat of an outlier in its light-touch approach, the framework is based on principles – security, transparency, fairness, accountability and redress – common to how most legislators are currently thinking about AI regulation.”

However, it’s unlikely that UK firms with EU operations will be able to take advantage of this lighter-touch approach unless they choose to support the diverging compliance regimes, which will come with additional overheads.

“If the UK continues to take a lighter-touch approach to regulation than the EU, British organisations subject to the AI Act will need to decide whether or not to adopt a single, Europe-wide approach to compliance,” Machin continues.

“The alternative is to have separate processes for the UK and EU, which for many companies will be expensive, difficult to operationalise and probably of limited commercial benefit. If businesses can clearly and easily separate certain compliance obligations by geography, they should, of course, consider them while acknowledging that in practice, they will also need to meet the higher EU standards in other cases.”

What To Watch Out For

Of course, the rules are still in a state of flux until finalised. The European Commission, member states and parliamentarians will meet in a series of “trilogue” meetings to flesh out the details. For example, it remains to be seen how generative AI models will be treated. All the commission has said so far is that they will need to follow transparency requirements and be prevented from generating “illegal content” and infringing copyright. Stanford researchers have already claimed that many AI models, including GPT-4, don’t currently comply with the AI Act.

Machin adds that businesses developing or using high-risk models must also keep a close eye on what happens next.

“There will likely be disagreements regarding how ‘high-risk’ AI systems are defined, and businesses will be closely watching how this shakes out, given that foundational models and technologies that increasingly impact people’s lives (such as in employment and financial services) will be caught by this definition,” he argues.

Jonathan Armstrong, a partner at Cordery, reckons it will be at least four months before companies get the clarity they’re looking for.

“The advice we’re giving our clients at the moment is to do a formal assessment, but this needn’t be too onerous. We’ve helped clients adapt their data protection impact assessment process, for example. This is a good base as it looks at some of the things the AI Act will address, like fairness, transparency, security and responding to requests,” he tells ISMS.online.

“That will also help them deal with the five AI Principles in the UK [policy paper] too. I think it’s not too much extra burden for most organisations if they are doing GDPR right – but for some, that’s a big if.”