Why Are So Many Leaders Failing the AI Trust Test-And Why Does Evidence Now Matter More Than Intention?

The era where “trust” in artificial intelligence could rest on glossy brochures or handshakes is over. Every compliance officer, CISO, and CEO now navigates a climate where trust is tested-sometimes by regulators, but always in the court of public and board perception. If your AI can’t demonstrate its own fairness or show exactly how it sniffs out bias, hope and good intentions will get you nowhere. The challenge is stark: market access, supply contracts, even investor confidence are increasingly contingent on having a bias defence proven in real time. It’s not about appearing credible-it’s about proving it, every day, and surviving scrutiny when it arrives.

When the outsider shows up-regulator, board, journalist-trust evaporates unless your AI can pull the receipts in seconds.

The silent reality: Most organisations don’t realise the magnitude of this risk until they’re staring down a crisis or the headlines have already broken. By then, credibility drains, markets wobble, and the cost of rebuilding trust climbs higher than any old compliance budget could cover. For leaders, the old doctrine-“we’re fair because we try to be”-guarantees exposure. The new minimum? Mapped, living controls that defend themselves and produce a trail an auditor can trust without a cross-examination.

Why Regulatory, Board, and Market Pressures Demand a New Trust Perimeter

Traditional playbooks-annual reviews, static reports, or spreadsheet compliance-simply collapse under today’s real-time demands. Regulators and critical customers ask for mapped proof: which step, by which person, at which hour, did what to defend against bias? Boardrooms echo the same demand when a journalist or competitor throws a question in their direction.

- Mapped, operational controls eclipse retroactive intentions.: A policy that “cares about fairness” is irrelevant if your logs, dashboards, and alerts don’t prove it.

- Compliance is now evidence-based and adversarial.: Laws like the EU AI Act, ISO/IEC 42001, and global procurement rules require live controls, test logs, and defence against bias that’s reproducible and immediate.

- High-trust markets move fast-one botched outcome can erase years of goodwill.: Anything less than daily vigilance courts irrecoverable risk, shaking board confidence, slowing deals, and shrinking your market.

The uncomfortable truth: most “AI trust” failures brewed quietly and became visible only when a crisis hit. Waiting to be tested isn’t a plan-it’s a fast lane to disaster.

Book a demoHow Does Bias Creep In? The Quiet Threats No Leader Should Ignore

You might assume bias comes from reckless engineers or tainted data. But most bias leaks quietly-through “default” models, historical patterns, or feedback-running loops that nobody contests. In regulated spaces like healthcare, finance, and public sector, failing to catch silent bias isn’t just a blunder. It’s exposure-legal, reputational, operational-and often happens without warning.

Bias compounds like technical debt-it’s invisible until the numbers spike and suddenly you’re in the crisis zone.

Today, “good enough” is a dangerous myth. Most boards see through vague commitments. Regulators assume bias unless you prove otherwise. For compliance teams and executives, the challenge sharpens: bias must be hunted, challenged, and neutralised as a default operating principle.

Where Does Discrimination Hide? The Unseen Entrances

You don’t need a rogue coder for bias to make a home in your system. It arrives through subtle cracks:

- Selection bias: Gaps in data, or patterns that exclude certain groups, quietly lock out candidates or customers before anyone notices.

- Label bias: Building models on old, unjust outcomes embeds outdated thinking and bakes it into the next cycle.

- Compounding feedback loops: One small error, left unchecked, gets recycled-and magnified-across every new model. Suddenly, disadvantage multiplies.

The fix is not another committee or an annual model review. The real defence is relentless vigilance-living controls and real-time evidence, not dusty policy binders.

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

Why Static Controls Fail-and How Audit-Ready Became the Real Cost of Entry

Most organisations still hope annual assessments, compliance certifications, or “see no evil” documentation will hold. This hope rarely survives a serious challenge from a procurement board, regulator, or even a sceptical journalist. Frameworks now require mapped, dynamic controls that are tested and ready for evidence on demand.

- ISO/IEC 42001: transformed “trust” from a marketing promise into a structure: every decision, control, and remediation must be traceable, explainable, and justifiable.

- EU AI Act: exposes organisations to fines and public scrutiny for unfair or biassed outcomes. Intent is irrelevant; legal compliance and defensible evidence take first place.

- NIST AI RMF: and sector-specific overlays demand fresh, ongoing proof-tick-box comfort is dead.

- Operational overlays: (healthcare, HR, banking) layer privacy, explainability, and rescue plans on top. You need to surface live evidence before the market or regulator asks.

Living evidence-logs, dashboards, immutably mapped actions-is now the only shield. The static compliance binder is a relic.

A key question: If challenged, can you-or any member of your compliance or technical team-link your AI controls, evidence, and remediation to every relevant framework? Can you hand over time-stamped logs (not PowerPoints) in minutes, not weeks? If not, you’re flying without a net.

Trust by Design Means Proof, Not Promises

A twenty-first century AI “playbook” builds in controls to surface, log, and defend against bias right at the core:

- Create evidence-not hope.: Document the datasets, data journeys, and every reason they were chosen.

- Map every input, every override, and every human decision.: Build a chain a regulator can follow without guesswork.

- Treat every decision as a logged event, not a one-off.: Only then does “trust by design” have substance.

Anything less is vapour when you’re questioned on your past-or your leadership is compared to a more transparent competitor.

What Does “Proof of Fairness” Require Now? Building Evidence as a System, Not a Slogan

Annual policy statements or “we take fairness seriously” won’t cut it any longer. The gold standard is enforceable controls that shift from talk to evidence-turning every AI-triggered action into a logged event, flagging bias risk before it goes live, and producing testable proof for procurement or regulatory eyes.

- Data lineage: On-demand tracking of every data set, every transform or decision, and justification for use in live models.

- Model cards: Each model is matched to a detailed profile-its strengths, limits, purpose, and audit trail.

- Immutable logs: Every tweak, retrain, override, and result is logged, signed, and tamper-proof.

Fairness is not the storey you tell-it’s the evidence you show, on demand, to any board or regulator.

The bar for “audit ready” is live, not theoretical. Leading organisations now automate the capture, routing, and surfacing of evidence-documenting mitigation, showing exactly how the system responds, better and faster than most competitors.

Habits and Tools That Define Modern AI Leaders

- Bias flagging at every launch, with automated alerts to the exact stakeholder with accountability.

- Retraining triggers activated by drift or flagged risk-not by a dusty schedule.

- Platforms that join human oversight and technical controls, ensuring remediation is context-aware and defensible.

Leadership isn’t hoping the system works; it’s demonstrating to all-inside and out-that fairness is defensible, monitored, and transparent.

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

Is “Good Enough” Security Still Exposing You? Why Real-Time Controls Define Compliance Now

The shift from “last certification” to “real-time readiness” is simple: the world doesn’t wait for you to catch up-audits, attacks, and supply chain scrutiny are constant. Bias that was “safe” at launch begins to grow, adapt, and shift as soon as your AI powers on.

- Live dashboards: Surface bias, drift, and anomalies-minute to minute-instead of waiting for quarterly surprises.

- Stress-testing: Models validated not just for routine correctness, but for edge cases and unexpected data.

- Subgroup tracking: Not just “accuracy,” but detection and analysis of every impact across groups-by gender, age, location, circumstance.

Today’s microscope is always on. Quarterly compliance won’t survive next week’s risk.

The loop has tightened: bias is found, logged, and remediated-evidence and remediation both surfaced to those who need it in real time. The old compliance cadence is too slow for today’s threats.

How to Build a Defence That Responds, Not Just Reports

Technical controls need to empower fast and transparent remediation. The core must be built for speed and proof, not comfort:

- Real-time flagging and mitigation, logged with full detail.

- Updates, overrides, and fixes are all time-stamped, mapped, and attached to specific users or contexts.

- The board, the regulator, and your critical customers can always see improvements or remediation-never left wondering if policy fits practice.

The outcome: proactive transparency. Today, hiding behind “the system worked before” is not a viable argument.

Why Explainability, Human Veto, and Oversight Are Now Non-Negotiable

If you can’t explain your AI’s choices in a way your grandmother would understand, expect trouble-if not from the regulator, then from the market, public, or activist investor. Black-box logic draws fire and legal action. Transparency-across every output-has become a legal and operational necessity.

- Plain-language justification of every material AI judgement.: Board, customer, or user should never be left guessing.

- Escalation and override practised and working.: Human oversight can veto an outcome-and every instance is captured in the log.

- Audit trails for every override, challenge, and fix.: “We have it covered” means little if the proof is invisible when challenged.

Decisions made by invisible logic don’t last long in court, the media, or the market.

The staff responsible cannot “trust the tech” by default; they must know the escalation protocol and be ready to use it. Regular drills, captured logs, and public proof of real overrides-not just hypothetical policies-are evidence you actually walk your talk.

Embedding and Testing Real Oversight

- Train and drill every staff member involved in oversight-simulate live incidents, don’t settle for e-learning.

- Regular, logged tests of your escalation and override systems-show that fail-safes aren’t theoretical.

- “Escalate and log” should be muscle memory, not a memo nobody reads.

Failing to embed genuine human oversight means your regulatory and reputational risk compounds daily.

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

Radical Transparency: How Top Firms Prove-and Publish-AI Fairness

The last decade’s “trust us” era is dead. The leaders now measure, publish, and invite scrutiny-not just for fairness when things go well, but when failures and remediations happen. Procurement, boards, and the public want to see the log, not just the success storey.

The real standard is radical transparency-log it, measure it, and let others check your work.

What Boards and Auditors (and Critics) Now Demand

Beyond dashboards and metrics for show, genuine evidence must surface live. The benchmark? Fast evidence, open reporting, credible response.

| Fairness Metric | What’s Expected | Checked / Published |

|---|---|---|

| 80% Rule Compliance | Procurement, regulators | Quarterly |

| Disparate Impact Logs | Board, audit, market | On demand |

| Actioned Remediation | Brand, regulator, board | Real-time |

Leaders map every event from detection to fix, show who acted, what happened, and keep this chain audit-ready. Everything else is theatre.

How to Tie Everything Together-for Proof, Audit, and Leadership

- Publish fairness reports and up-to-date audit logs for all critical models.

- Tie every remediation to a logged event-so learnings go public, not just into a report nobody reads.

- Invite external review-confidence comes from tested, not claimed, controls.

When procurement asks, “How do you handle bias?” or the regulator calls, your answer isn’t “We try our best.” It’s, “Here’s the evidence.”

Automate Trust: Why ISMS.online Puts Compliance and Fairness Defence on Autopilot

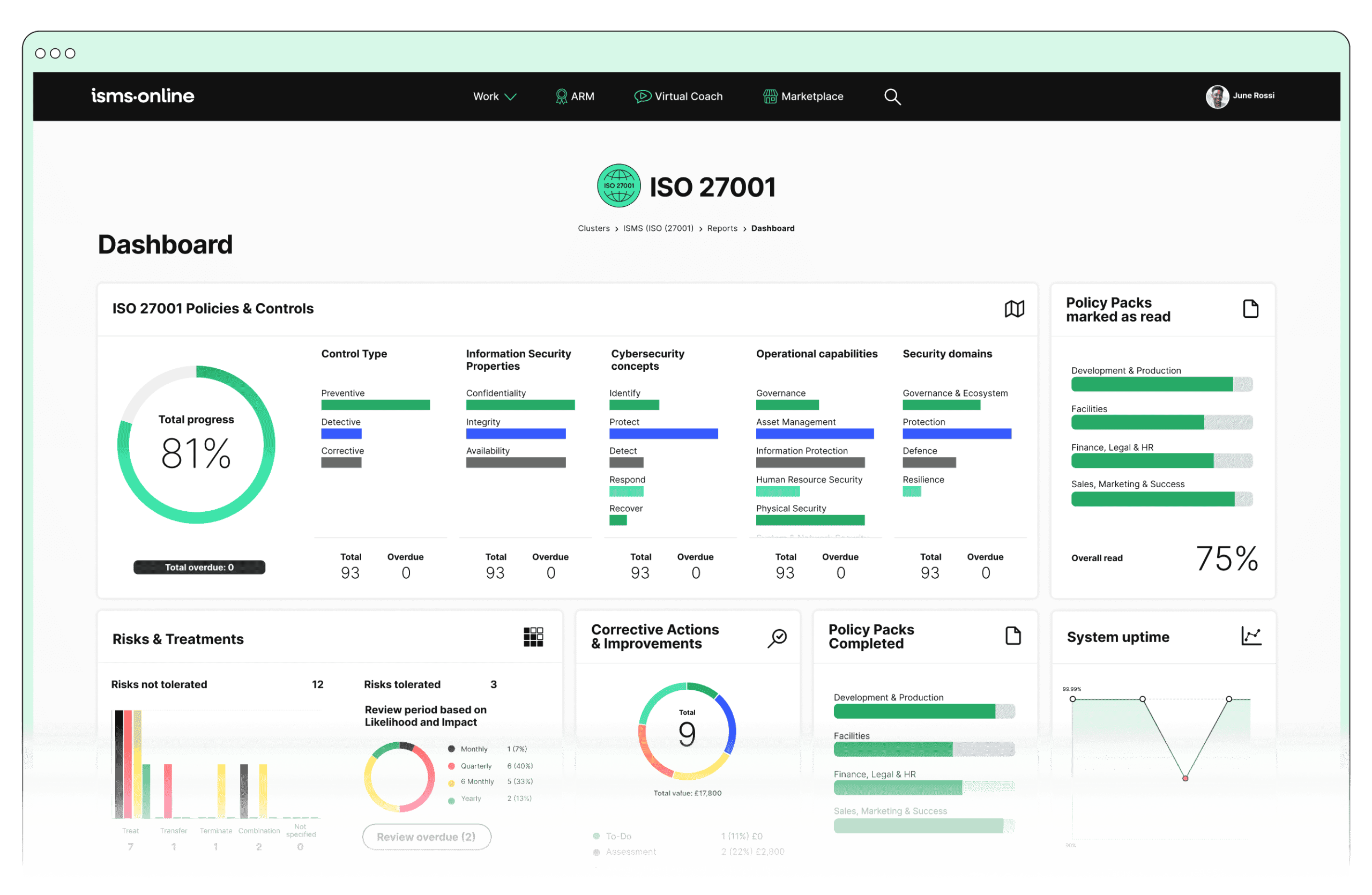

In a regulated space, spreadsheets, emails and heroics don’t keep you out of the crisis zone. Modern leaders automate surfacing and mapping every bias control. ISMS.online unites defences, logs, and evidence-no more lag, no more missing paperwork, no more ambiguous who-did-what. Your claim of fairness is matched by real-time controls, compliance-mapped logs, and a system that makes both board and regulators nod.

Compliance isn’t a side job or a special review-it’s a full-time process, and automation is your shield.

Through ISMS.online you:

- Surface and link all controls, detection, and remediation for any system, without friction.

- Map controls to global, sector, and legal frameworks-so you’re always audit-ready, not scrambling post-hoc.

- Turn logs and discovery into board-perfect evidence-no excuses, instant trust currency.

You shift from fighting fires to showing defensible, living trust. No “hope” required: every bias surfaced, every fix mapped and logged.

Take Ownership: Unrivalled AI Trust Is a Competitive Weapon-Own It with ISMS.online

There’s no “wait and see” for AI trust-operational, regulatory, or market risk is a moving target, not a “maybe someday” problem. Transparency, evidence, and accountability must be active, automatic, and always on. With ISMS.online at your back, your team controls the risk, owns the process, and builds trust-minute by minute, from boardroom to end user.

- Real-time logs and controls, never out of date.: Surface, catalogue, and share at a moment’s notice.

- Bias defence and evidence on automatic-no gaps, no second-guessing.: Every change, trigger, and fix is logged and mapped.

- Adapts as frameworks shift.: Stay ahead of new and changing requirements-across AI, privacy, and industry rules.

- Enables instant trust with stakeholders, not just regulators.: The board, buyers, and critical customers see evidence, not just claims.

The uneasy reality is that risk and scrutiny aren’t optional-they’re guaranteed. What distinguishes tomorrow’s leaders is delivering proof, not apology.

The organisations setting tomorrow’s trust standards aren’t waiting. Equip your team before the headlines hit. Let ISMS.online make trust your biggest asset-live, automated, and defensible.

Frequently Asked Questions

Who decides what counts as bias in AI, and why isn’t “just fix the code” good enough?

Bias in AI lands on your doorstep the moment outside forces demand proof your models behave fairly-not just according to your software tests, but by how they shape outcomes for real people in the world. It’s not a programmer’s side quest; it’s now set by a matrix of regulators, standards writers, auditors, and-when things fall apart-lawyers and journalists. The ideal of “just patch the code” breaks down, because bias reflects choices baked deep into data, objectives, or unchecked feedback loops, not bugs that crash apps. Regulators look for a live trail: who flagged a risk, what data was excluded, when a fix was applied, and if the end result benefited or quietly hurt a protected group.

What allows bias to take hold before it makes headlines?

AI bias doesn’t enter with a loud alarm. It starts with overlooked detail:

- Outdated datasets reflecting previous hiring or lending policies;

- Model tuning that improves accuracy overall but harms edge cases;

- Automated feedback loops that amplify subtle prejudices over time.

Red flags often surface before outright failure:

- Sudden dips in subgroup performance well before accuracy nosedives;

- Support cases clustering around a certain language or demographic;

- Steady model drift flagged by third-party audits.

The shield isn’t more testing alone: it’s documenting what you test, why, and whose interests were reviewed at every turn.

What practical controls impress real regulators-and go further than generic AI policies?

Today’s auditors and regulators are finished with slogans. They seek controls that make risk both visible and remediable:

- Data and Label Traceability: Maintain an auditable chain for every training sample-what changed, who decided, and why.

- Live Model Cards: For every system in production, maintain continuously updated, reviewer-attributed cards: objectives, edge failings, overlap with regulatory demands.

- Immutable Audit Trails: Apply cryptographic or role-blocked logs for every major event-parameter shifts, retraining, human overrides. Lost or alterable logs kill trust instantly.

- Bias Response Workflow: Capture, escalate, and map any bias event directly to owners and regulators; track response times, resolutions, and notification logs.

- Standards Map Cross-Linking: Each test or control should reference its hook in ISO 42001, NIST RMF, or the EU AI Act-a single source of truth for every audit.

ISMS.online anchors these processes into daily operations so your team isn’t scrambling after the fact. When controls are living, review-ready, and mapped, you shift from compliance firefighting to credible trust at every touchpoint.

Why do legacy “responsible AI” checklists quietly sabotage trust?

Flat checklists grow obsolete between reviews-real trust relies on proof that never stops updating. Evidence must be available in real time, mapped to active standards, and easily shared across regions or regulators. Reputation hinges on a living system, not a folder of old checkmarks.

How do ISO 42001, EU AI Act, and NIST RMF shield your organisation, and what is the one proof even seasoned players sweat?

True protection comes from stacking rigorous frameworks-each with unique strengths-so that no single weak link can undo your compliance:

- ISO/IEC 42001: Demands you operationalize risk, document policy changes, assign responsibility, log all exceptions, and keep evidence versioned.

- EU AI Act: Raises the bar to legal necessity-traceability, ongoing risk assessment, periodic audits, and enforceable human involvement, especially for high-impact or high-risk AI.

- NIST RMF for AI: Fills in the granular “how-to”-methodical breakdowns of risk, explainability, subgroup metrics, and mitigation tactics.

The control everyone dreads? Perpetual, role-attributed traceability. You are expected, at any point, to hand over a versioned log of what was changed, who approved it, how the fix mapped to external requirements, and how affected parties learned of the change. “Ready for audit” isn’t a milestone; it’s your permanent state.

Where do mature teams routinely drop the ball?

Not integrating their controls-when code fixes live in one silo, policies in another, and compliance evidence in a third, gaps multiply. Systems like ISMS.online dissolve those boundaries so every control, policy, and proof lives in one, reviewable map-saving not just reputation but hours.

When do most AI trust projects unravel-before evidence is needed, during scrutiny, or later?

The breakdown is rarely obvious until it’s too late, but failure patterns are clear:

- Choice fatigue: Trust operations relying on annual monitoring or revisiting stale checklists miss real drift. Boardrooms see promises, not progress.

- Untracked exceptions: Configuration tweaks, manual interventions, or stakeholder requests go unrecorded, leaving your logbook a patchwork.

- Scrambling for proof after the event: If you can’t export logs that narrate what, who, when, and why, scrutiny turns to suspicion.

A rare few organisations invert this: every anomaly, fix, and stakeholder notification is logged and mapped to its impact. Their audits build confidence, not anxiety.

Which metrics and artefacts silence boardroom doubt-and what’s the most overlooked lever?

Boards and external auditors expect proof that can be shown, not just explained:

- Outcome Fairness Rules: Monitor for “80% parity”-if decisions for any group fall below four-fifths of the benchmark, trigger an alert and investigation.

- Disparate Impact Tables: Present group-specific result rates over time, flagged for anomaly and mapped to decision points.

- Versioned Remediation Logs: Capture not just that a fix happened, but who initiated, what evidence drove it, and its mapped effect-auditable and exportable.

- Instant Readable Dashboards: At any time, present executive-friendly fairness trends, incidents, and fix lifecycles, not just technical outputs.

- Model Fact Sheets: Ready-to-hand summaries for every deployed algorithm-risk profile, change history, audit findings-without waiting for data teams to translate.

Most skipped lever? Consistent, two-way mapping between technical metrics and regulatory controls, ensuring any question-no matter the audience-has a direct, verifiable answer.

How do organisations automate trust at scale, and how does ISMS.online transform this from struggle to strength?

Manual trust operations are relics-automated, living systems set the new bar. ISMS.online routes every signal, event, challenge, and fix through unified dashboards:

- Automated fairness and bias evaluation trigger alerts, mapped instantly to responsible staff.

- Every major artefact-factsheets, logs, model cards-is versioned and mapped across frameworks (ISO 42001, EU AI Act, NIST), keeping proof current even as rules evolve.

- Audit-readiness becomes a live, always-on capacity-proof delivered the instant it’s requested, not after a document hunt.

- Leadership looks different: when your dashboards surface evidence mapped to every stakeholder’s lens-board, regulator, or partner-you lead not just on compliance but on operational confidence.

By automating the living map between model, action, and outcome, trust becomes both a shield and a competitive asset. With ISMS.online, you step ahead of standards-and show, instantly, that trust isn’t talk. It’s built into your daily operating system.