Why Do So Many AI Improvement Initiatives Collapse Before They Deliver Any Value?

Ambitious AI initiatives rarely fail from lack of vision-they fizzle because the real work of continuous improvement is underestimated, fragmented, or locked in a tally-sheet mentality. Leadership invests, expecting transformation. Yet months in, pressure mounts: compliance lapses multiply, model blind spots widen, and security teams are cornered by questions that should have been anticipated. Boardrooms seek answers; all they see are more “improvement plans,” none of them systemic, few of them delivering evidence.

Unseen threats exploit the gaps in half-built improvement cycles-the real cost appears when you least expect it.

Continuous improvement in AI is not a policy-it’s a living, always-on discipline. Most efforts fail because improvement gets siloed, delegated to periodic checklists or championed by well-intentioned heroes with no integrated process. The result? Risk signals go unnoticed, post-mortems become routine, and competitive advantage slips even as effort increases. The illusion of progress is seductive, but the lack of adaptive, system-embedded mechanisms leaves you perpetually one incident away from headlines or sanctions.

True resilience demands more than intent: it requires an environment where every anomaly, regulatory shift, or emerging threat automatically triggers a traceable correction-and where improvement is the default, not a crisis-triggered scramble.

Organisational inertia is dangerous in AI. Every time improvement is treated as a finish line, not a feedback loop, compound risks and compliance debts grow quietly until they erupt. The only way to outpace this is by building “improvement by design”-where security, trust, and compliance are not acts of faith, but continuous, provable processes.

The Price of Passive “Improvement”

When teams equate improvement with annual audits or reactive fixes, the cumulative result is risk migration, not reduction. Regulators accelerate, attackers adapt, and customers lose faith in vaporware assurances. By the time your audit logs or incident reports catch up, the narrative has already moved on-often without you.

In the AI era, untreated improvement isn’t neutral. It’s negative. The choice is ruthless: either embed improvement or accept that vulnerabilities, regulatory heat, and reputation breaches are only a matter of timing.

Book a demoHow Do You Spot the AI Risks That Everyone Else Misses-Before They Trigger a Crisis?

AI risks are nothing if not stealthy. Model drift, subtle algorithmic bias, surreptitious shadow deployments, a spike in regulatory activity-these are faint signals, rarely caught by static controls or manual reviews. By the time the alarm sounds, the cost is high and explanations wear thin. What distinguishes the resilient from the reactive is an improvement layer that continuously monitors, surfaces, and corrects before risk escalates.

Relying on annual controls or ad hoc check-ins is a losing bet. Your only win condition is an always-on feedback loop-where risk detection is automated, regulatory updates are mapped in real time, and anomalies translate directly to action.

Smarter Surveillance: Automated Threat and Compliance Monitoring

Getting ahead of AI risk means flipping the script:

- Automated, real-time monitoring: Go beyond dashboards. Use model drift detection, statistical flagging, and constant code integrity tests to surface issues the moment they emerge-not after someone complains.

- Live regulatory feeds: Monitor EU AI Act, ISO 42001, GDPR, NIST, sector rules, and immediate legislative updates-integrate them natively into your controls, not tucked away in compliance folders.

- Change intelligence: Audit for shadow AI deployments, codebase mutations, and environment creep that traditional GRC tools can’t spot. Feed these signals directly into your improvement cycle.

The critical insight: if your detection mechanisms aren’t as dynamic as the systems they’re protecting, you invite silent drift and invisible debt.

AI compliance failures are traced to unnoticed monthly changes in 65% of cases. (PDCA – Wikipedia)

Delay is defeat in AI compliance-automate risk discovery, or surrender readiness.

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

Feedback Loops: Are You Capturing Actionable Intelligence or Just Collecting Noise?

The myth: collecting more feedback equates to better improvement. The reality: most organisations drown in bug reports, user complaints, and audit tickets that never convert into change. Quantity is not value-what matters is an integrated, escalation-ready loop that turns every credible signal into visible progress.

Designing Feedback Systems That Drive Change

To build a genuinely effective improvement system:

- Unified input pipelines: Aggregate regulator notes, stakeholder concerns, and technical anomaly signals into a single, prioritised dashboard instead of letting inputs splinter across tools.

- Early warning detection: Set up drift sensors, recurring tiny-error trackers, and user query probes to escalate weak signals before they evolve into high-impact crises.

- Built-in escalation logic: Ensure ambiguous signals (subtle shifts, unreviewed feedback) are automatically flagged for expert triage, not left festering in backlogs.

If input vanishes into a black hole, contributors stop raising issues. Risk grows unnoticed. The only way to embed improvement is to route all feedback-manual or automated-into documented actions, visible to every contributor and every stakeholder.

Every missed signal is both a lost edge and a ticking time bomb.

“Sophisticated feedback loops embedded in tools like ISMS.online empower continuous refinement.” (womentech.net)

True AI maturity is evidenced by what you fix-silently, early, and over and over.

Is Your AI Improvement Engine Agile Enough to Survive Regulatory Change?

Compliance isn’t a one-time event. Laws, best practices, and industry standards now shift monthly, not annually. Failing to integrate regulatory feeds into your improvement system is the fastest way to lose face with auditors-and worse, the market.

Top-tier organisations embed regulatory mapping and live compliance tracking at the root of their AI improvement, not as a compliance afterthought. This means every obligation, every update, and every fix is logged and export-ready-and your audit trail never needs a “do-over.”

Elevating Compliance Above Minimum Viable

- Dynamic compliance mapping: See every regulatory duty-AI Act, GDPR, ISO 42001, sectoral overlays-in a single, live framework.

- Automated rule and guidance updates: As legal requirements change, your system prompts for review and action before anyone outside notices. No lag, no scramble.

- Exportable audit logs: When a board, regulator, or customer requests evidence, it’s a click away-itemised, time-stamped, traceable.

Automated regulatory watchlists and audit log generation are vital for compliance at scale. (ITPro)

Continuous, system-integrated compliance builds a reputation for reliability-one regulation at a time.

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

Can You Spot and Correct AI Bias and Model Drift Long Before Trust Erodes?

Bias and drift are AI slow-thorns-they inflict the most pain when left undetected. Once a customer files a complaint or a regulator flags an outcome, damage has already been done. The only way to substantively manage bias and drift is through deliberate, recurring, and transparent correction cycles built directly into your system.

Baking Bias Detection and Drift Mitigation Into Your Improvement Process

- Scheduled fairness audits: Define and document a cadence (monthly, quarterly, event-driven) for bias evaluation-no skipped cycles, no ambiguity.

- Automated data and model drift monitoring: Systematically scan for distributional shifts and silent error surges using statistical and AI-native techniques, not just manual spot checks.

- Action logs on remediation: Every fix, from training tweaks to parameter shifts, is logged, time-stamped, and exportable to prove what was fixed, why, how, and by whom.

Continuous, real-time bias detection and mitigation pipelines help prevent silent drift and disparities. (kotwel.com)

If you can’t prove detection and mitigation, you can’t claim control. Closing the loop between visibility and action is the only currency that counts.

Are Your Improvement Mechanisms Repeatable, Transparent, and Built for High-Stakes Audits?

AI teams often stumble into heroics-midnight code hacks, rushed mitigations, one-off interventions with no documentation. The result? Successes that can’t be replicated, system dependencies no one can explain, and audit readiness that vanishes under scrutiny.

Lasting improvement emerges from versioned workflows, documented retraining, systematic reporting-and an audit trail immune to surprise. Boards want one thing: proof over promises.

Industrial-Grade Improvement: Version Control and Guidance Evidence

- Versioned system retraining: Every pull request, parameter update, and code merge is versioned and tied to specific incidents or feedback triggers.

- Continuous improvement cycles: Borrow from PDCA-every incident, discovery, or suggestion spins into a repeatable verify-fix-review loop, not a one-time patch.

- Ready evidence for stakeholders: Logs, charts, and data change reports are exportable on demand-for execs, customers, and regulators.

You can shortcut many things in AI, but never your audit trail.

“Tools like DVC and Jenkins X drive controlled, audit-proof retraining and versioning from code to data.” (DVC – Wikipedia)

If your improvement steps can’t be replayed and your evidence isn’t exportable, your risk posture is only as strong as your last crisis.

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

How Do You Build Hard-Earned Trust With Boards, Customers, and Stakeholders?

In AI, trust evaporates the moment feedback disappears into silence. Mature organisations make trust visible-every stakeholder sees their signal surfaced, acted upon, and documented. Trust isn’t conferred by intention, but by repeated, visible, auditable proof that every concern closes a visible improvement loop.

The Building Blocks of Live Trust

- Feedback-action integration: Every stakeholder, from regulators to users, sees their concerns appear in prioritised improvement queues and sees evidence of action (not just a reply).

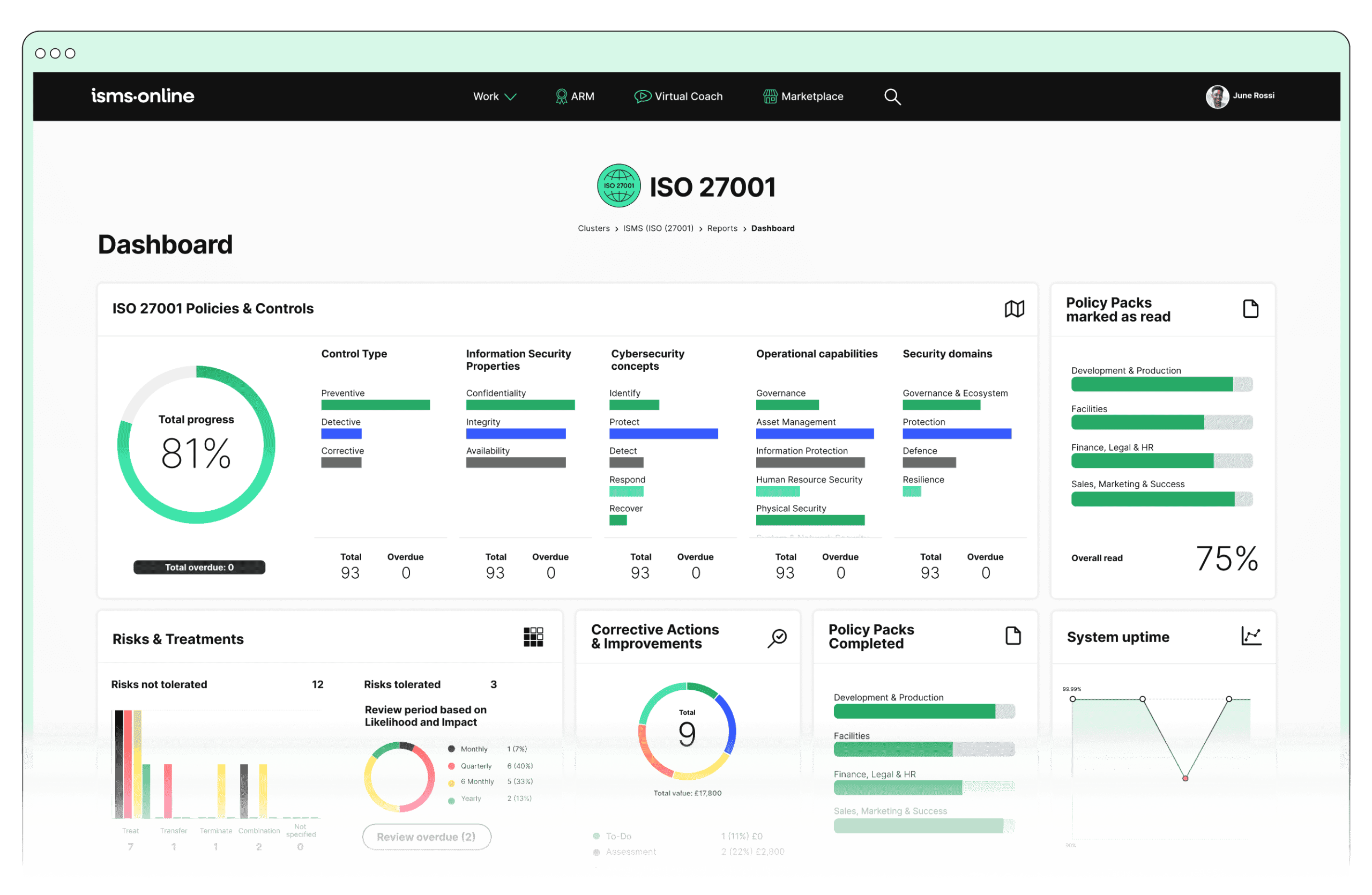

- Self-serve dashboards: Stakeholders access real-time views of system health, compliance status, and improvement cycles-no waiting, no red tape.

- Portable performance records: Risk overviews, bias indicators, improvement logs are one click away-useful for reputation, buyer confidence, and third-party audits.

True trust is gained when upskilled teams and stakeholders see feedback reflected-fast. (womentech.net)

Trust compounds where feedback is actioned, improvement is logged, and evidence is always ready-without hesitation or concealment.

The ISMS.online Advantage: Making Continuous AI Improvement Relentless and Routine

The old guard fights fires; the leaders shape their future. ISMS.online equips your teams with a living platform-always-on improvement, integrated compliance, and unitized risk feedback that aligns with the realities of today’s threat and governance landscape.

With ISMS.online, you make continuous, adaptive improvement standard-whether that’s for the EU AI Act, GDPR, ISO 42001, NIST, sector overlays, or tomorrow’s regulations. You deliver:

- Real-time risk and compliance mapping: -your board and your teams have a living overview of risk, compliance, and improvement cycles.

- Instantaneous, exportable audit evidence: -no scrambling for logs, always audit-ready, always transparent.

- Feedback-action pipelines: -every stakeholder signal, from regulator to end user, produces direct, traceable improvement.

- Bias and drift controls by default: -your models, data, and processes pass not just today’s fairness test, but tomorrow’s as well.

ISMS.online is the gold standard for continuous improvement: automating controls, streaming regulatory updates, and integrating feedback for every AI governance need. (isms.online)

Abandon security theatre. Sidestep the crisis hamster wheel. Step into a feedback-powered routine that earns not just compliance-but respect, trust, and market advantage. The future belongs to those who systematise improvement; the boardroom and marketplace will remember who acted before the risks appeared.

Lead the standard. Show not just that you improve, but how, how often, and why it matters. Let ISMS.online show you what continuous really means.

Frequently Asked Questions

How can your organisation embed genuine, always-on improvement in AI systems?

Genuine, always-on improvement in AI isn’t a matter of promises or buzzwords-it’s about systems that surface trouble fast, act fast, and keep receipts. The organisations that thrive establish feedback engines, automated checks, and auditable decision logs as standard operating hardware-not as loose intentions. Every action is evidence, every problem a trigger for smarter defence. That means you build muscle memory for adapting to threats, model drift, and regulatory curveballs in real time.

Silencing error is impossible; catching it so quickly it can’t damage you is the operational art. Automated monitoring surfaces every anomaly-model performance wobbles, data input shifts, small compliance breaches-so your team isn’t left discovering issues the hard way. Feedback pulses in continuously: user complaints, bias flags, audit signals, all pouring into combined channels that are impossible to ignore or lose. Each incident leads to a closed loop-documented, timestamped, with actions and results logged for boardroom or regulator eyes.

Real improvement means error never hides-every trigger becomes evidence and every fix is part of a living audit storey.

Where most teams stall is the loop from signal to fix: signals die in neglected inboxes, para-annual reviews miss emerging risks, evidence is scattered or missing. Leading organisations treat every feedback action-patch, tweak, retrain-as a step in their defence log. When ISMS.online is woven into this rhythm, proof of improvement becomes automatic, not afterthought, and your organisation’s reflexes matter as much as your original plans.

What critical routines convert improvement talk into everyday ops?

- Embedding real-time drift detection and alerting for every deployed model.

- Turning operational changes and fixes into timestamped, board-ready audit trails.

- Requiring every analyst, engineer, or stakeholder action to generate a visible, traceable entry-so nothing drops off the radar.

- Automatically routing unresolved tickets to higher scrutiny, closing the feedback loop with proof.

Continuous improvement stops being a leadership slogan and becomes your operational identity, measurable by how few surprises you face when the next audit or attack hits.

What daily feedback loops distinguish a defensible AI improvement cycle?

A defensible AI improvement cycle hums along with a tempo set by live systems, not after-the-fact post-mortems. Sensors sit inside workflows-catching everything from model drift and security quirks to user bias complaints and odd operational patterns. What matters is the routing: every trigger hits triage, with automated classification of urgency and risk. The right eyes or systems respond next, with every move documented and reviewable in real time.

The backbone is a cycle you can spell out, prove, and repeat: Plan (spot issues, set fixes), Do (deploy updates or retrain), Check (verify the outcome, log proof), Act (adjust protocols if lessons echo elsewhere). The key difference between leaders and laggards? Granular evidence trails, instant traceability, and dashboards that surface the “why,” “when,” and “who” for each action.

What counts is not zero errors, but zero-escape-when every anomaly is caught, routed, fixed, and evidenced on the record.

For compliance-heavy teams, especially under rules like the EU AI Act or ISO 42001, these cycles supply the artefacts you need: precise records of every drift detected, every mitigation and update, every signature on every change. When the regulator or board asks, “Prove your improvement,” your answer should be as short as a filtered dashboard export.

Why do most organisations trip over feedback cycles?

- They treat post-market monitoring as an event, not a living process.

- They bury closed-loop evidence in ad hoc docs or emails-nothing’s ready for outside review.

- They miss change lineage: “What was fixed, why, and what else did it touch?”

With a platform like ISMS.online in place, every feedback-to-action loop is defensible by default-no more last-minute panic when the audit window opens.

What tools and platforms automate AI improvement and secure regulatory credibility?

The software stack matters as much as the process: improvement needs to be engineered into the landscape of your tools, making evidence, repeatability, and auditability the default. Modern organisations blend open-source flexibility (wildly customizable, but more hands-on) with specialised, compliance-ready platforms that guarantee traceable action.

Table: Which Stack Fits Which Need?

There’s no “best” tool-only best fit for your maturity and sector. Here’s the landscape:

| Automation Area | Platform/Stack | Payoff |

|---|---|---|

| End-to-end model tracking | MLflow, Kubeflow (OSS) | Every model and dataset change, versioned |

| Drift/bias monitoring | TFX, SageMaker Monitor | Alerts push retraining and review |

| Compliance and audit | ISMS.online | Each change mapped to a real control |

| Live deployment & rollback | Jenkins, Seldon, GitHub Actions | CI/CD plus instant rollback on error |

| Documentation and reporting | ISMS.online | Exportable logs match board/regulator need |

Use open tools for scale and tweakability. Rely on platforms like ISMS.online for direct compliance mapping and zero-stress audit output. Smart teams knit them together-technical change triggers, compliance surfaces action, and every fix, update, or rollback is instantly evidence for both improvement and operational defence.

Audit is no longer a once-a-year event-it’s the byproduct of a well-instrumented improvement engine that never sleeps.

Why is always-on improvement essential for AI compliance and risk leadership?

Risk moves faster than paperwork; compliance standards mutate quarterly. For risk and compliance officers, “continuous improvement” is the difference between managing threats on your schedule and being blindsided by them in public. It means model drift and bias don’t snowball into silent crises. Whether it’s the EU AI Act, ISO 42001, or fast-changing US state laws, proof is expected on demand.

Miss a regulatory update or a shift in input data, and minor errors quietly metastasize-leading to fines, revoked licences, lost client accounts, and public debacles. Stakeholders no longer tolerate hope-boards, investors, and customers demand to see the records: “What did you know, what did you do, and how do you prove it worked?” Relying on stale “change logs” or once-yearly audit logs is not only risky; it leaves your organisation exposed when new threats or rules hit.

When leadership can show every action-for every risk, every control, every change-they not only satisfy oversight but turn operational proof into a competitive strength. That’s the goal: turn compliance from a scramble into a source of trust that markets, partners, and even regulators recognise.

What transforms feedback and complaint handling into real, cyclical improvement for AI systems?

The difference between a stagnant and a maturing AI system is how it turns whispers into action. It’s easy to instal a “suggestion box” or bury complaints in shared inboxes. Harder-and more crucial-is to engineer a feedback system that catches every type of signal, routes it for review, triggers change by design, and closes the loop with audit-proof evidence.

A strong feedback system:

- Collates user complaints, bias flags, operational quirks, and even regulator notes into one surface-a single pane of glass visible to every accountable party.

- Applies triage rules to classify urgency, assign stakeholders, and propose initial fixes automatically.

- Logs every step: issue detection, action taken, effectiveness review, timestamp, and responsible owner.

- Supports full closure-every “fix” is actually evidenced, cross-linked to risk controls, and visible for review, not parked or orphaned.

- Periodically reviews and analyses closed loops, identifying patterns for proactive improvement cycles.

Improvement only matters if every complaint, bug, or anomaly leads to a record-documented, routed, and closed-with nothing slipping between the circuits.

Platforms like ISMS.online and integration with ticketing (Jira, ServiceNow) let organisations map every feedback loop directly to compliance and operational controls. The result: chaos gives way to controlled improvement-issues don’t just vanish; they leave a breadcrumb trail proving your system matured because of them.

What operational practices set apart organisations leading in AI system improvement?

True leaders aren’t defined by checklists; they have processes that publish their discipline out loud and make every fix count for the next review. Every intervention is logged, every audit is predictable, every control is in plain view, and every improvement is ready to become next quarter’s best practice.

Key routines:

- Monthly (or issue-triggered) audits that hit everything from model fairness and drift to live compliance mapping-no relying on last year’s paperwork.

- Retraining and deployment cycles that don’t simply “happen” but are versioned, reproducible, and saved for audit or rollback any time.

- Bias and drift scans run on automatic, with escalation for human review where needed-no anomalies under the carpet.

- Transparent dashboards that show internal and external stakeholders where your improvement efforts stand, issues closed, actions pending.

- Feedback and complaint loops cross-wired to risk and compliance controls-so every fix, every learning becomes institutional knowledge, not tribal memory.

| Best Practice | Returns | ISMS.online Role |

|---|---|---|

| Monthly, targeted audits | Smoother inspections, faster fixes | Scheduling & traceability |

| Automated bias/drift scans | Early-warning, lower legal risk | Trigger integration |

| Stakeholder dashboards | Increased trust, easier oversight | Live data surfaces |

| Closure-reviewed feedback | Real improvement, not PR | Looped evidence trail |

To lead, track, and continuously harden AI systems, you need more than intentions. You need infrastructure-automated, public, repeatable-anchored by platforms like ISMS.online that don’t just catch problems, but generate institutional proof every step of the way.

Close the loop: Make improvement your competitive asset, your operational bedrock, and your team’s leadership storey-start powering your organisation’s AI with ISMS.online.