How Does the EU AI Act Actually Transform Your ISO 42001 Compliance Reality?

The EU AI Act drives a wedge between “paper compliance” and true operational readiness in the realm of high-risk AI. For organisations used to managing risk through documented policies and certifications like ISO 42001, this change isn’t cosmetic: it’s a mandate to deliver evidence under real-time scrutiny. The Act compels organisations to prove their controls work, not just that they exist. For any compliance officer, CISO, or CEO, this means your role isn’t simply to maintain a tidy management system-it’s to ensure your high-risk AI survives its next audit, news storey, or incident without unravelling the organisation’s trust, reputation, or revenue.

Your certificate’s worth evaporates the moment your controls falter under real-world stress.

ISO 42001 provides a management framework. The AI Act is a legal regime-with teeth. If your AI application can affect someone’s job, health, money, or basic rights, the new bar is operational defensibility. That means you must produce live, granular evidence that your system works as intended, can withstand attacks or biases, and puts vital controls in action every day. This shift isn’t theoretical. It’s materialised already, with the Act enforceable and penalties looming.

Most organisations have grown comfortable inside a perimeter of paperwork: risk registers, improvement cycles, and well-archived policies. The EU AI Act throws out comfort zones and exposes what lies beneath: unless you can operate your controls “in the wild,” your certificate is just wallpaper. The real answer to this new compliance reality? Treat every system, process, and person as if tomorrow they’re on the front page-because they might be.

What Makes an AI System “High Risk” Under the EU AI Act, and Why Should It Reshape Your Strategy?

“High risk” means more than a compliance sticker. It’s a trigger that places your system, your evidence, and your team under active legal surveillance. The AI Act designates as “high risk” any AI that can seriously impact a person’s health, safety, finances, or their access to essential rights and services. That means the loan approval tool you use, the HR recruitment AI, the automatic patient triage system, or even the smart gate in your building could be swept up-sometimes overnight-into a web of legal obligations you can’t ignore or postpone.

Once classified as high risk, your AI is under continuous legal surveillance-intent isn’t enough, only demonstrable control will suffice.

The risk is not abstract. An algorithm that shapes who gets a job, credit, housing, or medical care lands you in a regime where authorities expect live, real-time proof: who interacted, what happened, and what you did about it. No one cares how good your PowerPoint is-what matters is whether your system proves fairness, explainability, and adaptability with zero lag.

Typical High-Risk AI Use Cases

- Patient diagnostics and triage

- Algorithmic recruitment and promotions

- Automated loan and insurance decisions

- Biometric identification (e.g., facial recognition, fingerprint)

- Law enforcement, migration, or border control analytics

- Tools influencing access to core utilities or education

If your AI application touches the levers for opportunity, security, or equity, the only safe assumption is it’s or will soon be regulated as high risk. No “awareness session” or signature from a manager will carry much weight if your live records, logs, and incident controls are missing when demanded.

How High-Risk Designation Triggers a New Compliance Mindset

Once your AI crosses the “high risk” line, the rules multiply-and so does the weight of evidence needed:

- Continuous risk mapping: High-risk status is not a once-a-year box-tick. You must update inventories and controls every time you shift your business or tweak an automation.

- Legal traceability: Every relevant operation must be logged in near real-time. There can be no delay from data to evidence-regulators expect a straight line.

- Stakeholder transparency: Anyone affected can demand direct evidence of risk management, fairness, or reasoning. The era of “we’re not ready” is over.

Leaders betting on static documentation or infrequent reviews expose themselves-and their organisations-to escalating liabilities. The true risk is not just regulatory penalty, but the collapse of trust and control if an incident, audit, or media surge hits and the evidence isn’t ready.

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

Why Doesn’t ISO 42001 Guarantee Compliance for High-Risk AI Under the Act?

ISO/IEC 42001:2023 is invaluable for introducing discipline to AI management-it corrals risks, clarifies policies, and orients your business around improvement. But ISO, by design, is a voluntary management system. The EU AI Act brings compulsion, not consensus, especially when your system is classed as high risk.

| Compliance Myth | Regulatory Reality |

|---|---|

| “ISO 42001 fully shields our high-risk AI” | **It organises, but doesn’t fulfil, every Act-mandated control.** |

| “Policy documents are enough for compliance.” | **Only operational, real-time proof is legally valid.** |

| “Annexes check all technical boxes.” | **Critical areas (bias monitoring, human oversight, transparency) exceed ISO’s written scope.** |

Here’s the core gap: ISO 42001 tells you to manage AI risk, but the Act tells you how you must prove safety, fairness, and accountability, and when you must do so (now, on demand). It’s not enough to have good intentions or periodic audits. When authorities arrive, only live, audit-trail evidence in response to their demand meets the standard.

Having a system in place isn’t compliance-showing it works, and adapts in real time, is the standard now. (EU AI Act Regulatory Commentary, 2024)

The lesson is clear: Your ISO-based management system is a platform, not a shield. Only an operational, evidence-driven approach makes your compliance real and audit-proof.

Where Do High-Risk Controls Under the EU AI Act Surpass What ISO 42001 Requires?

The AI Act remaps your controls landscape. Where ISO 42001 says “document your risk,” the Act says “prove, right now, that you mitigated bias, logged every override, and issued user notices as required.” The result: a blueprint for continuous, forensic-level evidence, not just a paper trail.

Act-mandated areas where ISO 42001 falls short:

- Immutable, tamper-evident logs: Not just process documentation, but access-controlled, cryptographically verifiable records of every relevant AI transaction.

- Live human oversight: Concrete records-time, reason, and responsible person-every time a human steps in or overrides the system.

- Bias and accuracy audits: Repeatable, ongoing report chains-no more “annual validation.” Auditors want to know what’s happening now.

- On-demand user transparency: People can request explanations, see which data influenced outcomes, and review model logic.

- Incident and risk reporting at real speed: No buffer time; you’re expected to deliver evidence as events unfold, not as a post-mortem review.

- Complete lifecycle control visibility: The chain of custody must be clear from data sourcing to final decision, with each handoff recorded.

Policy on paper is cold comfort. If you can’t evidence real-time operation, you’re exposed-legally, reputationally, and financially. (isms.online expert panel)

These new controls turn “high risk” from theoretical classification to an operational marathon. Your evidence must move as fast as your algorithms-because the regulator, journalist, or customer won’t wait.

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

How Do Leading Teams Operationalize Controls Beyond ISO 42001-Raising the Bar?

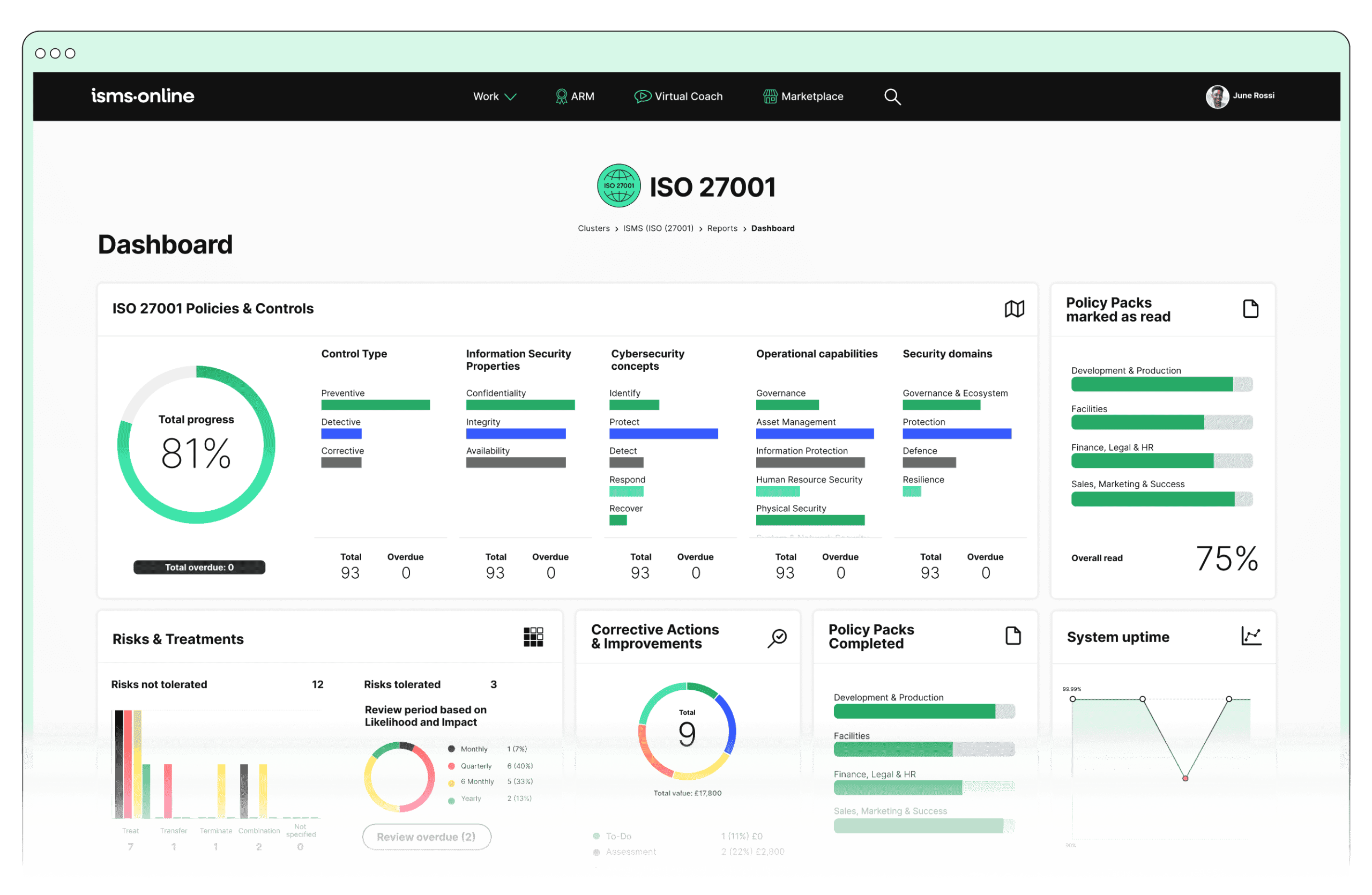

Top-tier organisations understand compliance isn’t waiting in an Excel sheet; it happens in their cloud platforms, risk dashboards, and incident workflows. The winners are those who “operationalize” compliance: making it demonstrable, live, and impossible to fake when challenged.

How are these leaders raising the bar?

- Automated risk inventories: Every change to a system updates risk assessments in seconds, not months.

- Tamper-proof activity logs: Not just file-keeping, but engineering-level logging that ransomware, malicious insiders, or even well-intentioned staff cannot alter.

- Role-based accountability: Clear mapping of who did what, when, and why, for every piece of the AI lifecycle.

- Continuous bias and fairness checks: Scripts and processes run before and after deployment, feeding fresh data to risk profiles.

- Self-serve transparency: Allowing users, executives, and auditors to pull proof whenever they need it.

- Dashboards tuned for “now”: Compliance isn’t annual-it’s live. Incidents, bias shifts, or new legal clauses update controls automatically.

- Cross-walk to the Act: Building a living table that maps each new Act mandate onto your operational toolkit, identifying and closing gaps at pace.

No matter the size of your business, the formula is the same: compliance as a living function, not an annual checkbox. Every uptick in regulatory, technology, or business change triggers a compliance review-because anything else puts you one incident away from exposure.

What’s the Playbook for Evolving From ISO 42001 to Legally Defensible, High-Risk AI Controls?

Surviving and thriving in this landscape means fusing your ISO groundwork with new, real-time, operational controls demanded by the Act.

1. Treat ISO 42001 as Your “Control Core”

Begin with ISO 42001 to organise your policies, assign roles, schedule risk assessments, and establish a quality baseline. But that’s your first step, not your finish line.

2. Layer in Act-Specific Tools and Evidence

Upgrade your toolkit with controls the Act makes mandatory:

- Audit-grade, automated logging: at every “decision event.”

- Data lineage systems: that trace source data straight to output, linking every risk assessment, bias review, or user option.

- User interface upgrades: -allowing users to see, challenge, or opt out of AI-driven outcomes.

- Dynamic technical files: Instant, always-current documentation pulled by event, use case, or incident instead of delayed reports.

- Human-in-the-loop records: Flag and record any instance of manual override, real-time monitoring, or exception handling.

3. Build Live, Automated Compliance Dashboards

Turn governance from “policy-on-a-shelf” to operational muscle: dashboards and workflows that flash alerts, trigger evidence chains, and reassign controls with the speed your board expects.

4. Schedule Quarterly (or On-Demand) Legal Reviews

Make “gap analysis” a rolling window, not an annual spiral. As the Act, your business, or AI tech evolves, so does your compliance map.

5. Drill Audit Readiness with All-Hands Access

Everyone in scope-from data scientists to legal to support-should be able to evidence action, interventions, or readiness for review at a moment’s notice.

Our clients close audit and reputation gaps before anyone else spots them-turning risk into resilience, with ISMS.online’s live operational controls. (isms.online)

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

How Can You Deliver Real-Time Proof-Not Just Paper Claims-For High-Risk AI?

When the pressure’s on, regulators and stakeholders don’t want to see “intent.” They demand live, operational proof. You must be able to retrieve logs, explain interventions, and show bias remediation-instantly, no questions or excuses.

What does this look like at the coalface?

Automated Proof Engines

- Compliance mapping: Your AI control system should alert you the instant a legal update or use-case shift creates a compliance gap.

- Operational dashboards: Streaming, live views of decisions made, interventions executed, and biases detected or corrected.

- Unified evidence chains: Combining logs, notifications, and incident records so you can satisfy any query, anywhere in the system.

- Role-adaptive workflows: Automatically funnel new controls and alerts to the right person, speeding up action and proof delivery.

The penalty for delay isn’t just a fine. It’s brand erosion, board anger, and the personal risk of being caught flat-footed.

When the regulator or public questions your AI, “let me check with IT” or “we’re still investigating” is obsolete. The only real defence is a system so live, so well-evidenced, that its operational health sells itself.

Table: Where ISO 42001 Meets or Misses the Mark Under the EU AI Act

Here’s a quick map of how ISO 42001 aligns-and, crucially, where operational upgrades are now non-negotiable to meet the Act’s demands.

| EU AI Act High-Risk Requirement | ISO 42001 Status | Additional Upgrade Needed |

|---|---|---|

| Immutable, tamper-evident logs | Process documented | Automated, audit-grade logging with access controls |

| Data provenance & bias monitoring | Lifecycle policy | Continuous testing/recording with instant recall |

| Evidence of human oversight | Policy assigned | Logged, timestamped human interventions/overrides |

| Real-time risk/incident reporting | Planned procedures | Proactive, real-time notification and investigation |

| Transparent user communication | Policy declared | Active user portals and dynamic file disclosure |

| Dynamic technical documentation | Manual reports | Real-time, role-aware, user-facing technical files |

ISO 42001 puts your house in order. The AI Act inspects your walls-and hands you a list to fix, not just annotate.

Why Legal-Grade, Real-Time Compliance Is the New Minimum for High-Risk AI

Relying on static paperwork, periodic audits, or “intentions” is an open invitation to business disruption and stakeholder doubt. The Act isn’t a theoretical exercise; it’s a binding standard that shifts the question from “Did you mean well?” to “Can you prove, live, that your high-risk system is safe and fair?”

To compete, defend revenue, and protect your own reputation, you must:

- Continuously map and re-map your exposure: across teams, partners, and AI models.

- Produce live evidence on demand: -no lag, no complex explanations, just clicks to demonstrate real stewardship.

- Earn trust with displayable integrity: -making your AI risk controls so transparent and defensible that regulators and customers alike choose you over “just compliant” competitors.

Compliance is now a moving target-yesterday’s certification is tomorrow’s evidence gap.

True compliance now means a culture where operational evidence is the default, not the afterthought. Only those who adapt live-technically and psychologically-own the future of high-risk AI.

Secure Real-Time High-Risk AI Readiness with ISMS.online Today

With every new regulation, breach, or public shock, the margin for error narrows. ISMS.online closes the distance between “good enough” and leadership-grade compliance. Our platform upgrades your ISO 42001 foundation with the operational muscle, dashboards, and rapid-response workflows that the EU AI Act makes essential. Don’t just aspire to compliance-engineer it for audit, for customers, for your board.

- Unified management: Controls for both ISO and EU AI Act, tested, upgraded, and mapped continuously, not annually.

- Evidence on demand: Proof at your fingertips-when the regulator or board comes knocking, you’re ready.

- Transparent readiness: Dashboards and reports designed to serve a regulator, not just a routine auditor.

- Resilience by design: Tools and playbooks adapt as laws and AI models evolve, so your compliance always stays ahead of the curve.

You don’t win by hoping your controls hold; you win by knowing they’ve been tested before your rivals, before the press, before an incident. With ISMS.online, real-time, legal-grade compliance is not tomorrow’s aspiration-it’s your shield today.

Frequently Asked Questions

What triggers the “high-risk” designation for an AI system under the EU AI Act, and how does ISO 42001 equip your defence?

An AI system enters the “high-risk” category the moment it can affect someone’s health, legal status, access to essential services, or fundamental rights. The EU AI Act draws a legal red line-if your tech diagnoses cancer, manages recruitment, controls electricity grids, or leverages face recognition in secure locations, regulators consider it high-risk by default. The expectations then shift from hopeful governance to operational discipline: not just written risk policies, but living evidence of what your system has done and what it’s doing right now.

Every algorithm that reshapes someone’s opportunity or safety instantly changes the compliance game-the law expects operational proof, not gentle assurances.

ISO 42001 responds with more than documentation. It sets a rhythm: assigning compliance roles, mapping out repeating risk reviews, keeping technical records audited and organised. But remember, crossing the high-risk threshold means you must show your system’s defences are always active, with intervention logs and incident records ready for forensic review the second a regulator looks. ISMS.online bakes these controls into daily reality, allowing your team to pull up evidence at a moment’s notice-no frantic search required.

Typical high-risk AI categories

- Clinical diagnostics and triage automation

- Recruitment, promotion, or disciplinary algorithm decisioning

- Credit risk assessment tools in banking or insurance

- AI-powered utilities, transportation, or critical process controls

- Biometric identification in sensitive or public areas

If you’re operating in these domains, regulators expect not only strong policies, but a living audit trail for every key event, override, and technical update.

How does ISO 42001 shift compliance from static policies to operational defence for high-risk AI?

ISO 42001 begins by structuring your governance-clarifying exactly who runs compliance controls, how evidence gets documented, and which cycles drive continuous risk review. This brings order to complexity, ensuring every system change or data update is handled under a repeatable, auditable protocol. Yet, while this groundwork is essential, it’s not enough for high-risk scenarios under the EU AI Act. The law expects continuous, not occasional, evidence: live logs, up-to-date technical files, and immediate incident documentation.

A certified ISO 42001 framework lets you track responsibilities and force regular check-ins, reducing the risk of old code or datasets slipping through. But success means going further-automating event capture, linking every dataset change to the compliance record, and giving third parties access to operational evidence without delay. ISMS.online is engineered for exactly this fusion: real-time event capture and compliance reporting built around the rhythm of risk.

ISO 42001 strengths at work

- Delivers governance that clarifies “who handles what”

- Forces proactive risk assessment as models, tech, or laws change

- Demands data and model quality controls-removing guesswork

- Drives continuous improvement, catching problems faster than traditional annual cycles

This structure alone doesn’t close the gap unless every event flows into an operational, searchable history-ready before audit calls or external probes.

Where does the EU AI Act set the bar higher than ISO 42001, and where do the frameworks reinforce each other?

Both ISO 42001 and the EU AI Act require you to embed compliance in daily operations-not just when certification is due. They agree on the need for clear accountability, systematic risk analysis, and ongoing model and data review. But the Act demands more immediacy and depth. You’re expected to present “living” technical files, tamper-evident event logs, and granular records of every human override, usually in real time or close to it. Annual review cycles and static policies are now considered the floor, not the ceiling.

| Key Requirement | ISO 42001 Coverage | EU AI Act Additional Demand |

|---|---|---|

| Live, immutable logging | Mandated, but often periodic | Continuous, instantly accessible |

| Dynamic technical records | Audit-focused, annual | Always-current, on-demand |

| Incident escalation and reporting | Policy-driven, cyclical | User/stakeholder notification in set timeframes |

| Human interventions | Policy/manual log | Every action logged with detailed traceability |

| Fairness, bias, and safety proof | Process, periodic check | Continuous auditing, externalizable evidence |

ISO 42001 helps build the bones-roles, records, and routines-while the Act applies the muscle: “Prove your control, now, with evidence.” ISMS.online merges both, embedding live compliance checks and automated reporting across your organisation’s tech stack.

Does ISO 42001 certification fully satisfy EU AI Act obligations for high-risk AI systems?

Certification helps: it shows you’ve mapped controls, documented risks, and trained personnel in responsible operation. But alone, it’s not enough. The EU AI Act sets an expectation for operational, event-driven compliance: daily, even minute-by-minute, proof that your system is lawful, safe, and running within assigned parameters. A certificate validates your governance design, but only living technical files, access logs, and real-time response evidence protect you under regulatory scrutiny.

A credible platform like ISMS.online takes you from “policy on file” to “proof in the system,” merging continuous event capture, incident escalation, and audit dashboards designed for real-world probes-not just periodic certification.

A badge on your wall won’t protect you in court; only living, operational evidence can take the heat.

ISO 42001 alone brings:

- Tangible risk and reliability frameworks

- A map of governance and responsibility

- A structure for transparent, ongoing improvement

But to satisfy the AI Act, you must deploy:

- Automated, immutable logs for every critical event

- Technical files that update dynamically as models or data shift

- Interfaces for instant incident reporting and regulatory access

- Audit dashboards that bridge policy and daily action in real time

How can compliance leaders align ISO 42001 routines with the EU AI Act’s high-risk requirements?

Start by mapping control to obligation: identify where ISO 42001’s controls already satisfy the Act, and where they fall short-especially regarding live, actionable evidence. Automate the collection and protection of event logs and technical documentation; every intervention, override, and change should generate a traceable record linked to the compliance audit trail. Make sure your system supports real-time alerts, escalations, and reporting to both internal and third-party actors.

Compliance isn’t a project. It’s an immune system that should adapt and defend at the speed of risk.

Immediate actions for leaders

- Crosswalk every ISO 42001 control with a line-item from the EU AI Act

- Deploy technology that automates event and evidence capture across the stack

- Link technical documentation so every update or override triggers a compliance trace

- Assign, define, and regularly test override chains-these must be logged and explainable by role and event

- Expose operational status and audit evidence to select external parties-regulators expect transparent visibility, not just PDF policies

- Treat gap analysis as a living cycle, not an annual fire drill-initiate it after every significant tech, data, or legal update

ISMS.online excels in this role: bridging the living system of your controls with the unbroken evidence trail needed when the spotlight shifts rapidly from policy to proof.

Which ISO 42001 clauses most directly address EU AI Act requirements for high-risk AI oversight?

Certain ISO 42001 clauses are especially relevant:

- Clause 6: Planning: – Comprehensive risk assessment, mitigation, and lifecycle controls, echoing Article 9 of the EU AI Act.

- Clause 7: Support: – Focus on staff roles, competence, communication, and clear override chains (“human-in-the-loop” assurance).

- Clause 8: Operation: – Mandates ongoing monitoring, incident detection, technical documentation, and closed-loop feedback-direct parallels to the Act’s live evidence bar.

- Clause 10: Improvement: – Drives responsive, continuous remediation and post-market surveillance-key for showing missed risks are spotted and fixed in short cycles.

| High-Risk Compliance Stage | ISO 42001 Anchor | EU AI Act Demand |

|---|---|---|

| Deep risk assessment | Clause 6 | Responsive, event-tied risk management |

| Real-time model monitoring | Clause 8, Annex A | Audit-ready, always-on technical files |

| Human factor accountability | Clauses 7.2, 8.1 | Logged interventions and override chains |

| Incident command/reporting | Clause 10, Annex A.8 | Evidence delivered inside notification window |

The highest standard isn’t the certificate-it’s being able to show, at any instant, how a key event was safeguarded, what happened, who solved it, and how the process improved as a result. With ISMS.online, you aren’t scrambling for old files or hoping last year’s report is enough. Your compliance-like your system-is always on.

The new audit starts when the issue hits, not when the calendar says it’s time.