Are You Building Responsible AI Into R&D-or Hoping to Survive a Headline?

Trust in artificial intelligence is rarely lost because of a single bad model. It’s lost in moments: the first audit you can’t support, the procurement question you stumble through, the regulator’s knock when “responsible AI” is paper-thin. In practice, responsible AI isn’t a sparkling press release or a line in the annual report. It’s an engineered discipline-one that has to be lived, documented, and demonstrated from the initial idea to every launch.

For research and development teams, the era of “good intentions” is over. With the emergence of new regulatory frameworks-especially the ISO/IEC 42001:2023 standard and enforcement pressure from both the EU and US-responsible AI is table stakes for market access, contract wins, and reputational survival.

Responsible AI neglected in R&D means fixing trust only once it’s fractured-auditable controls, not crisis PR, are what keep you in business.

“Ethical AI” rhetoric hasn’t stopped regulators, buyers, or courts from scrutinising organisational claims. Twenty percent of AI litigation in Europe now centres on gaps or vagueness in R&D’s audit trail-process evidence that never existed, or was bolted on as a reaction after the fact (ITPro, 2024).

Organisations with a systematic, embedded approach to responsible AI don’t just avoid trouble-they get to market faster, close bigger deals, and set the standards that competitors must chase.

What Makes ISO 42001 a Game-Changer for Operationalising Responsible AI in R&D?

Unlike policy checklists or vague “AI principles,” ISO 42001 was created to routinize responsible AI into the daily practice of R&D-turning compliance from a marketing slogan into a competitive advantage. It transforms the notion of “being ethical” into a rigorous, repeatable management system that spans process, proof, and continuous evolution.

ISO 42001 is not a high-level code of conduct; it’s a living system:

- Specific process requirements for fairness, transparency, privacy, and safety are mapped directly into R&D workflows, not left for end-of-cycle scramble.

- Continuous improvement, evidence logging, and top management oversight aren’t asks-they’re recurring, auditable expectations.

- Buyers and regulators are no longer told “We intend to be responsible.” Instead, they’re shown dashboards, logs, and review evidence that speak for themselves.

Responsible AI, built on ISO 42001, is your licence to operate: audit-proof, market-ready, and board-approved.

Conforming your R&D to ISO 42001 doesn’t mean tying up innovation in red tape. Instead, it means innovation is continually protected from reputation loss, litigation risk, and last-minute compliance chaos. It powers a defensible posture-every stakeholder, from the CISO to the board, gets real-time assurance that AI risk is proactively managed, not retroactively justified (KPMG, 2024).

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

Where Responsible AI Most Often Breaks-And How ISO 42001 Secures the Gaps

Responsible AI fails when you trust hope over structure. The trouble isn’t usually a catastrophic coding error. It’s process slippage. R&D teams skip or rush operational and ethical checks due to velocity pressure, unclear ownership, or misplaced optimism.

Three repeat offenders haunt most organisations:

- Tokenistic bias or fairness reviews at the project’s tail-end:

- Documentation scattered or left until audit panic:

- Ethics left to individuals, not encoded in repeatable systems:

Auditors and buyers see through post-hoc attempts to piece together a process. The fix? ISO 42001 demands documented controls and proof at each R&D phase:

- Risk mapping, stakeholder analysis, legal checks, and fairness requirements shift from being “random good practice” to standard operating procedure-owned, scheduled, evidenced.

- Bias testing, impact reviews, and transparency checks are no longer leftovers-they’re recurring, worked into dev sprints and change releases.

- Every control-whether a requirement, test result, incident, or redress-is linked to a named owner, recorded with escalation and action-history.

Most public compliance failures are the result of invisible process drift-ISO 42001 stops the rot before it starts, forcing every step to be visible, traceable, and subject to improvement.

ISO 42001’s lifecycle controls prevent silent decay between releases and team transitions. In practice, this means R&D can keep pace with evolving law, stakeholder demands, and a market that’s grown weary of AI-buzzword promises (AWS, 2024).

What Controls Should Operate in Every Step of R&D?

ISO 42001’s impact is practical, not philosophical. It sets clear, context-aware demands for each R&D stage-mapping responsible AI expectations into the real-world workflow.

Early Strategy and Planning

- Ownership and accountability: Assign and document owners for ethics, compliance, and regulatory scanning.

- Stakeholder analysis: Assess impact and risk-who benefits, who could be harmed, where does the law bite?

- Objective and rationale record: Document AI project objectives, design justifications, and explain where controls are included or excluded for audit clarity.

Data and Model Development

- Data lineage: Maintain clear, auditable trails for data sources, representativeness, preparation, and fairness logging.

- Bias and explainability reviews: Audit and document these at every milestone. Rely on repeatable, scheduled reviews-not ad hoc.

- Change logs and sign-off trails: Every alteration, anomaly, and risk incident gets a documented, owner-verified path.

Deployment and Operationalisation

- Template-driven enforcement: Embed responsible AI requirements within management systems and CI/CD pipelines.

- Access, monitoring, and alerting: Protect R&D infrastructure with live drift alerts and automated escalation to risk owners.

- Incident integration: Incidents, complaints, and redress actions trigger direct feedback to the development lifecycle-with clear documentation.

Monitoring and Continuous Learning

- Fairness, bias, and drift metrics: Surface live dashboards for ongoing detection and intervention.

- Feedback loops: Harvest all exceptions and complaints for root-cause analysis and structural improvement.

- Living evidence for audits: All process proof is evergreen, ready for buyer, regulator, or internal scrutiny-no more “documentation day” panic.

By making these requirements operational, ISO 42001 shifts responsible AI from management philosophy to competitive product.

A strong process is a selling point, not a cost-sink. Your buyers, board, and regulators appreciate fast, meaningful R&D answers over hopeful assertions.

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

How to Make Fairness, Bias, and Ethics Actionable-Not Empty Promises

There’s no “ethics-officer-of-the-month” award for patching bias after release or improvising transparency statements on the fly. Responsible AI must become muscle memory for your teams.

If you still handle fairness as a quarterly afterthought-or leave transparency for the legal review-your R&D invites both compliance risk and competitive irrelevance.

To operationalise responsible AI:

- Integrate fairness into automated build and test cycles: -not just annual policy updates.

- Assign clear accountability lanes: Each area (fairness, transparency, incidents) needs a named, empowered owner able to block go-live or escalate.

- Report every redress and near-miss: Capture issues as stories and metrics-actual process refiners, not just compliance marks.

Your goal? Provide real-time evidence of responsible AI-not a dusty compliance log for auditors only.

Live dashboards, instant fairness and drift metrics, and a direct redress pipeline separate leaders from laggards in the responsible AI era (GRSee, 2024).

When customers or authorities call, your answer is a click, not a hunt for last year’s forgotten spreadsheets.

Turning Continuous Audit-Readiness Into an R&D Moat-Not a Stress Test

Compliance that lives at the end of the cycle wears out your people and your business. ISO 42001 creates a compliance environment that operates continuously-evidence, audit, and response are alive and always improving.

- Proactive monitoring: Dashboards surface issues before they escalate-compliance teams see drift and bias as soon as they emerge.

- Audit trails built into workflow: Every requirement, approval, change, and resolution is documented, visible, and ready for validation.

- Incident response as proof: Playbooks aren’t theoretical; R&D’s investigations and remediation are centrally tracked, close every incident, and stand up to external review.

- Agility with regulation: As law or buyer need evolves, so does your process-no dead documents, only live, versioned procedures.

ISO 42001 is not just compliance-it’s reliability, streamlined audits, and operational comfort for teams and external partners (ODIC, 2024).

Buyers and boards see embedded compliance and operational resilience as signals that separate market leaders from the “just get it shipped” crowd.

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

Why ISO 42001-Aligned R&D Is Your Trust Dividend-Not Extra Overhead

The business case for embedding responsible AI-at ISO 42001 conformance-is direct and quantifiable.

- Enterprise customers require auditable fairness, bias, and incident evidence: from every supplier-no more taking ethics on trust.

- Regulators expect demonstration, not intention: -your controls must show, not promise, compliance.

- Boards focus on continuous improvement: -they trust R&D less when risk management looks like “see no evil, patch when caught.”

Every documented iteration, captured complaint, and transparent fix makes your company easier to buy from and harder for competitors to challenge.

Continuous improvement and real-world AI governance underpin contract wins and public trust in the era of new tech regulation. (KPMG, 2024).

This is more than defence. ISO 42001-certified R&D gives you a head start-every audit-ready control you build becomes a trust asset, not a documentation burden.

How ISMS.online Powers Responsible AI In R&D-Without Manual Compliance Pain

You don’t have to choose between innovation velocity and proof of responsibility. ISMS.online brings operational confidence to your R&D pipeline. By aligning daily workflows, documentation, and automated logs with ISO 42001, our platform turns compliance from a drag into an operating advantage.

Using ISMS.online, your teams can:

- Automate documentation, fairness and bias logs, and process evidence: for every project cycle or procurement event.

- Streamline third-party audits and regulatory checks: -auditable signals are integrated, not pieced together under duress.

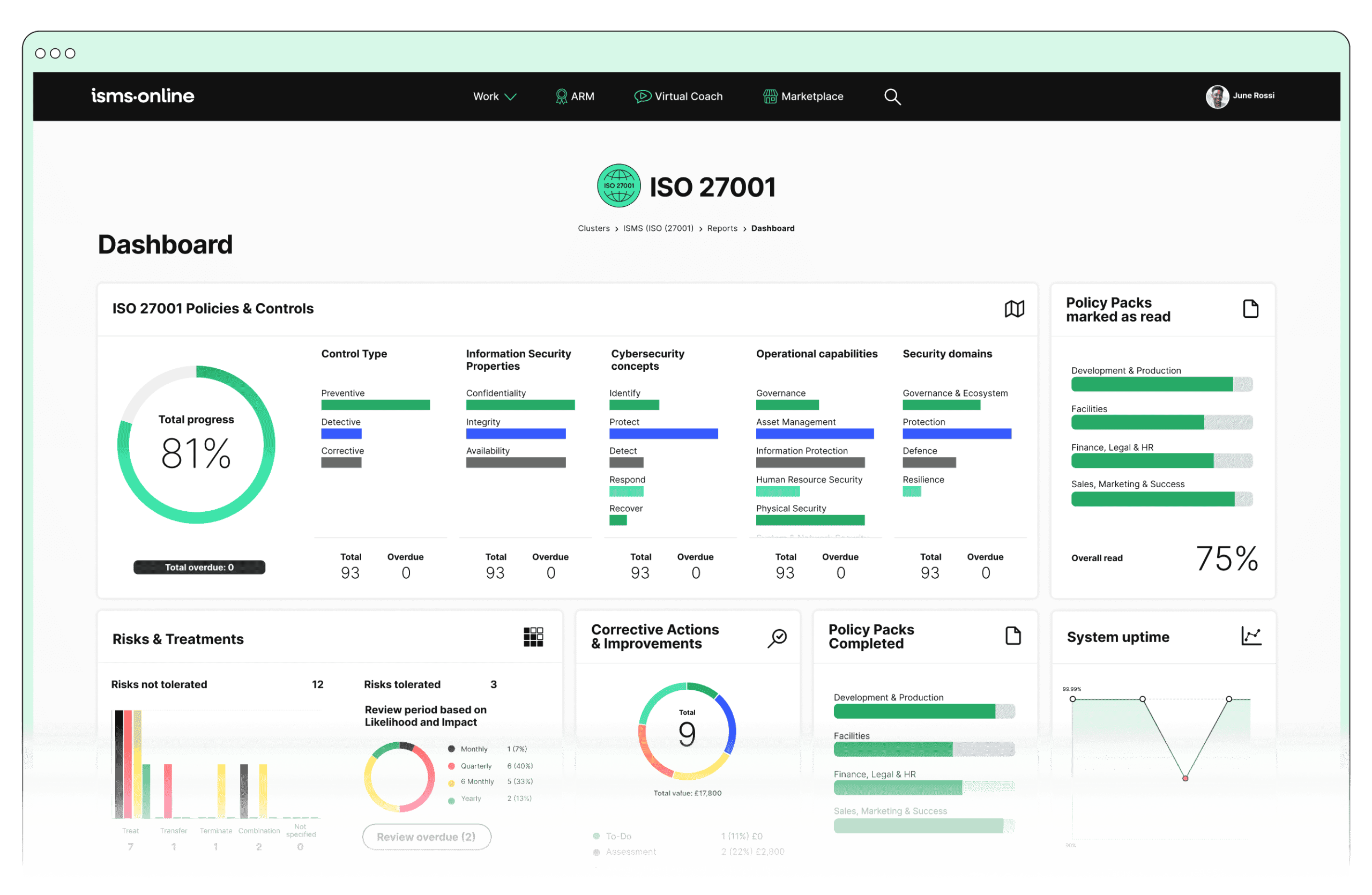

- Give board members and key buyers live, dashboard-based visibility: into responsible AI practice, not after-the-fact reports.

Responsible AI shifts from aspiration to operational advantage-the new baseline buyers and regulators expect, and the new floor for trust.

Future-ready R&D teams use ISMS.online not just for risk avoidance, but to claim the trust premium-regulators, customers, and the board can all see your controls, your improvements, and your readiness for tomorrow’s scrutiny.

Build your advantage now. Trust isn’t given. With responsible AI, it’s always earned, proven, and continuously renewed.

Frequently Asked Questions

How does ISO 42001 move responsible AI from theory to daily R&D operations?

ISO 42001 turns responsible AI from a promise into a working discipline by making every design, build, and deployment decision visible, owned, and reviewable. In practical terms, that means no more “trust us” or hand-waving about ethics-instead, every AI project is tracked with explicit roles, process trails, measurable criteria, and trigger points for review. Fairness, transparency, and risk are embedded in your team’s workflow, not retrofitted after the fact.

Translating principles into results

- Ownership tied to action.: If a system fails, you know exactly which checklist was missed and who is responsible-not in theory, but in a signed audit record.

- Documented evidence at every step.: Data choices, risk scans, explainability tests-each leaves a digital fingerprint. Internal reviews can surface systemic issues before clients or regulators ever spot a problem.

- Fixed before shipping.: Compliance isn’t a panic move after a press storey breaks. It’s woven into design sprints and code commits from day one.

- Bias and impact handled up front.: Team members can’t sidestep potential issues; mitigations and their rationales are required, not optional.

- No accidental drift.: Regular review cycles ensure best intentions don’t rot on the shelf as the project matures; everything stays locked to current expectations.

Which ISO 42001 controls provide unambiguous trust in research and development environments?

ISO 42001’s most potent R&D controls eliminate the ambiguity plaguing “soft” responsible AI policies. Their design makes ethical lapses traceable, while surfacing accountability gaps before they become liabilities.

The controls that change the game

- Policies and roles (A.2 / A.3): Every major design action-dataset choice, model deployment, risk acceptance-has a named responsible party and a verifiable paper trail.

- Impact and bias analysis (A.5): No new system launches without a documented review of risks, privacy, group exposure, and clear bias countermeasures. Fail the test-no green light.

- Data quality governance (A.7): Every dataset carries metadata on where it came from, who vetted it, and when it changed. No “mystery sources” leaking bias or errors.

- Ongoing explainability and redress (A.6 / A.9): Transparency tools run alongside code. Stakeholder disclosures, incident logs, and update histories are on tap-never hidden or outdated.

- Iterative fairness (Annex C): R&D teams define, measure, and refine outcome fairness alongside business goals. Improvements and setbacks are both tracked.

Teams that internalise these controls find audits become routine, customer trust becomes standard, and regulatory shifts stop feeling like threats.

R&D roles become clear, not interchangeable

- Responsible parties are listed by name, not “team.”:

- Audit logs are auto-generated, not reconstructed after the fact.:

- Stakeholder challenges are documented, handled, and visible-shrinking PR risk and operational confusion.:

What operational habits drive genuine fairness and transparency for AI under ISO 42001?

Ensuring fairness and transparency under ISO 42001 means building them into daily routines, not quarterly checklists. Real progress comes from systemic habits your team can measure.

Habits that move the fairness needle

- Define what fairness means-for your context.: Bring business, legal, and technical leaders together to set evidence-based metrics and red lines. These become your live reference points, not afterthoughts.

- Bake assessments into every model change.: Each time a dataset is swapped or a model is retrained, your pipeline triggers an impact check. This doesn’t slow development; it rescues you from blind spots.

- Pin explainability to release criteria.: If a model prediction can’t be explained, it cannot ship. Pass/fail is tracked and audited.

- Automate, don’t depend on memory.: Use live bias detectors, drift alerts, and structured logs to catch issues before they multiply.

- Share more than you’re comfortable with.: Continuous updates to customers, partners, and oversight bodies-especially when something goes wrong-build trust out of uncomfortable truth, not spin.

How do you prove responsible AI to boards and regulators without stalling innovation?

ISO 42001 doesn’t just place more hurdles in R&D’s way; it arms your team with prebuilt evidence streams. The trick is converting this rigour into business speed-not more paperwork when scrutiny intensifies.

Four proof layers you can show, instantly

- Current policy logs: Live documentation of every approval and AI use policy, ready for review.

- Metrics dashboards: Numbers update in real time-auditors and execs get clear fairness, drift, and explainability scores, not 40-page PDFs four weeks late.

- Time-stamped incident chains: Every misstep, fix, and exception is tracked, making continuous improvement visible, not a promise.

- Engagement archives: Feedback, complaints, and action logs from every stakeholder-no more lost or contested suggestions.

When evidence is a tap away, your team isn’t paralysed by the next “show me” request. You move forward with confidence-and can prove it at any buyer, auditor, or boardroom table.

What measurable business advantage does ISO 42001 operational compliance unlock for R&D?

The shift from “checkbox” compliance to daily discipline flips risk from threat to differentiator. Teams that weaponize ISO 42001’s controls turn audits and buyer reviews into growth levers, not gating burdens.

Tangible levers for R&D performance

- Continuous compliance as sales fuel: Buyers bypass teams with lagging controls. Being ready, all the time, is a trust magnet.

- Shorter deal cycles and fewer blockers: When procurement asks for evidence, you respond today, not “by quarter-end.”

- Boardroom confidence, not last-minute panic: Live audit logs and incident visibility mean no surprises for executives or partners, even under cross-examination.

- Faster shipping, smarter iteration: Ongoing feedback and review cycles become muscle memory, letting you outpace rivals rather than chase them.

How does “living” ISO 42001 compliance transform R&D into a market leader year-round?

The difference between compliance fatigue and competitive leadership comes down to rhythm. ISO 42001, internalised, means improvement isn’t an event-it’s your default stance.

Key routines for perpetual advantage

- Schedule risk and performance reviews before problems appear.:

- Run scenario drills so response speed is second nature, not a scramble.:

- Bring in outsiders for regular, adversarial checks-think “white-hat” audits.:

- Channel every lesson straight back into requirements and launches.:

- Make progress public-let stakeholders and buyers see evidence of your improvements, not just headlines about failures.:

When readiness, trust, and results become your visible baseline, you’re not playing defence. R&D, supported by ISMS.online’s discipline, leads from the front-every review, every launch, every day.