Is Artificial Intelligence and Machine Learning Directly Regulated by the NIS 2 Directive?

As AI and machine learning (ML) systems take on critical decision-making roles across the European economy, a core question arises for boardrooms and compliance leaders: Does the NIS 2 Directive currently regulate AI/ML technologies-today, and what might tomorrow bring? For now, the answer is blunt: NIS 2 does not explicitly list or define artificial intelligence or machine learning as regulated technology categories. Instead, the directive applies functionally-targeting cyber and operational resilience for essential and important service providers named in its Annex I and II (energy, health, digital infrastructure, banking, and more).

However, look beneath the surface: AI/ML systems that underpin or enable essential services are unambiguously “in-scope” by function, not by technology type. For instance, an AI-powered fraud detection engine at a bank, or an ML-based grid optimization tool at a power utility, becomes regulated under NIS 2 not because it’s “AI” but because it is essential. Conversely, general research, beta products, or non-production AI pilots outside those core sectors sit outside NIS 2’s scope-at least for now (NIS 2, Article 2).

Compliance risk emerges not from the name on the technology-but from the impact it has if it fails.

The directive’s text (notably Recital 51) even encourages innovation, recommending use of “technologies such as artificial intelligence” for cyber detection and response. Still, there are no mandated AI-specific security controls or reporting protocols in the text. All risk management, incident notification, and supply chain requirements apply to the regulated entity holistically. AI is pulled into scope only when it is essential to a regulated digital service.

This means:

- You don’t report an “AI incident”; you report a major service incident as required under NIS 2.:

- There are no ML-specific obligations for assurance, documentation, or transparency-those still sit in sector guidelines and the forthcoming AI Act.:

Today’s compliance expectation: If AI powers your essential service, treat it as in-scope for NIS 2, even if the word AI never appears in the law.

How Are NIS 2 Obligations for AI and ML Set to Evolve?

Europe’s regulators and cyber authorities have signalled a major shift: explicit, harmonised AI/ML controls are coming to NIS 2-via technical guidance, amendments, and cross-referencing with the AI Act and ENISA frameworks. The gradual move from “generic coverage” to “named-and-mapped obligations” is already underway.

The Roadmap: From Implied to Explicit AI/ML Governance

- ENISA and standards bodies (CEN, CENELEC, ETSI): are spearheading initiatives to link AI/ML-specific operational security directly to NIS 2’s core requirements (ENISA NIS2 Technical Implementation Guidance 2024). This includes guidance on risk assessment, assurance, supply chain scrutiny, and auditability for “high-risk” AI systems.

- Sectoral mapping is accelerating: If AI/ML is deployed in health, energy, finance, or digital infrastructure, expect requirements like:

- AI/ML asset cataloguing and risk documentation (SBOM, supply checks)

- Auditability and failure logging for models

- Transparency and explainability when human safety is involved

- Bespoke incident reporting covering AI model failures or attacks (poisoning, adversarial manipulation)

- AI Act (2024/2149/EU) Interlock: As soon as the EU AI Act is in force, its “high-risk” systems will trigger automatic NIS 2 duties when deployed inside regulated sectors. This is not duplicative-it’s harmonised alignment.

- Formal NIS 2 Review in 2026: is scheduled as the key intersection: technical standards, AI-specific controls, and incident reporting protocols are scheduled to converge for revision.

Regulatory sandboxing is ending. Organisations are expected to audit and document their AI/ML exposures now, not just when revisions become law.

• Regulatory stack: NIS 2 forms the compliance base, with AI Act above it, and ENISA/standards as supporting struts. A marked arrow points to the upcoming 2026 review-a date for your compliance calendar.

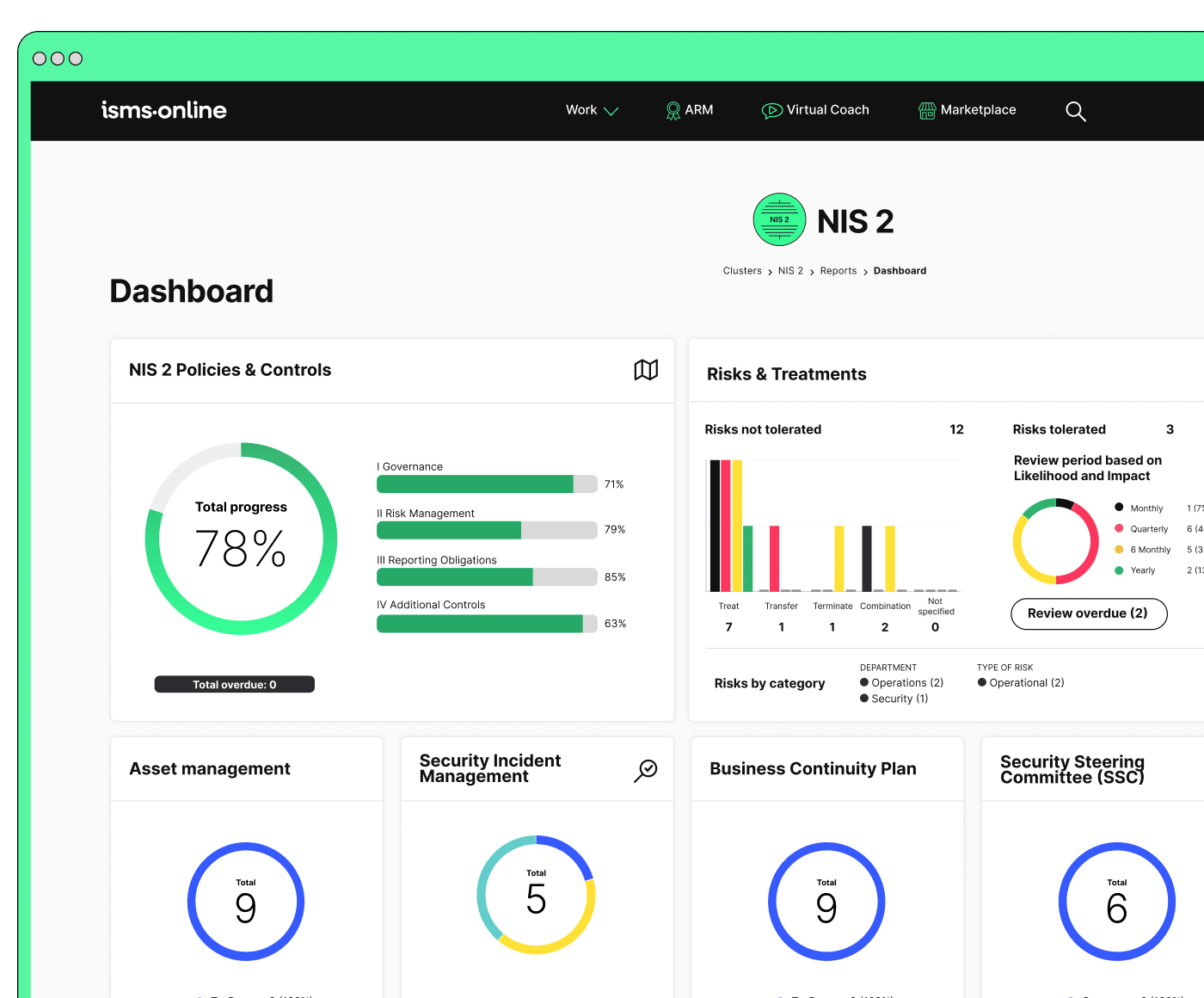

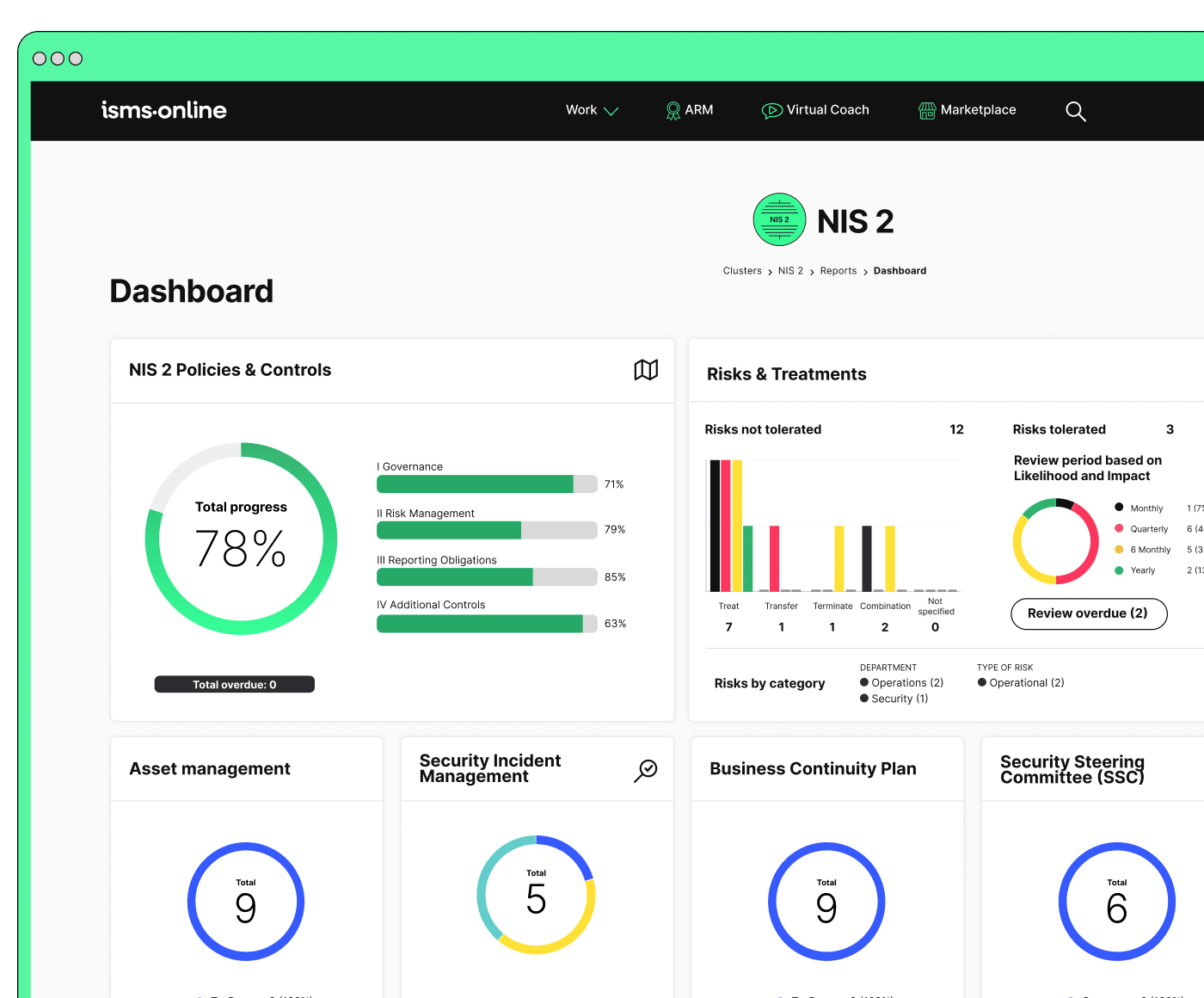

Master NIS 2 without spreadsheet chaos

Centralise risk, incidents, suppliers, and evidence in one clean platform.

How Will NIS 2, the EU AI Act, and ISO 42001 Work Together on AI/ML Compliance?

In practical terms, European AI/ML compliance is being structured as a governance trinity: NIS 2, the EU AI Act, and ISO 42001. Each shapes part of the risk and assurance cycle-this is not a siloed regime, but a layered one.

Compliance Integration: Beyond Siloed Controls

- NIS 2: Sets foundational requirements-risk and asset register, incident notification, supply chain resilience, with AI systems falling into scope by sectoral function.

- EU AI Act: Defines “high-risk” AI/ML systems, with attention to explainability, human oversight, lifecycle management, and robust documentation. “High-risk” status in your sector becomes a flag for NIS 2 application as well (AI Act Overview).

- ISO/IEC 42001:2023: Provides a structured management system for AI governance–expanding ISO 27001/ISMS best practise to encompass AI-specific asset tracking, stakeholder responsibility, risk controls, and audit trails.

| Framework | Core Focus | Key Compliance Activities |

|---|---|---|

| NIS 2 | Cyber resilience | Risk register and AI asset mapping, supplier due diligence, IR plans |

| EU AI Act | System governance | Human oversight, documentation, explainability, lifecycle management |

| ISO/IEC 42001 | Management system | Asset listing, risk owner assignment, SoA controls tied to model assets |

Tomorrow’s regulators will expect ISMS, privacy, and AI governance to be mapped in a single system of record.

Case Study Example:

A hospital deploys an AI diagnostic engine, which directly impacts patient care.

- NIS 2: Hospital is “essential,” system is critical-so all AI-linked incidents and controls are in-scope.

- AI Act: Model is “high-risk,” demanding transparent logging, human oversight, and robust audit trails.

- ISO 42001: Model is registered as an asset within the ISMS, tied to risk, incident, and review procedures.

What Are the Cyber-Security and Compliance Risks for AI/ML Under NIS 2?

AI/ML expands the digital attack surface-and NIS 2 expects regulated entities to anticipate and safeguard against these risks alongside regular IT threats.

Priority Risk Domains

- Model/Data Poisoning: Malicious manipulation of data or model weights causing downstream harm. Mitigated by data pipeline controls, model versioning, and integrity checks-NIS 2 Article 21.2a/f.

- Supply Chain Exposure: AI/ML models often incorporate third-party/open-source code or pre-trained models. SBOMs (Software Bill of Materials), supplier security audits, and signature checks are required-NIS 2 Article 21.2d/l.

- Black Box / Explainability Gaps: A lack of traceability complicates incident response and regulatory reporting-addressed via logging, playback of model decisions, and periodic reviews-NIS 2 Article 21.2j/k.

- Continuous Model Drift: Models adapting in unforeseen ways can amplify risk if monitoring is absent-ISMS/ISO 42001 periodic review triggers are vital.

- Insider Misuse: Weak access controls among data engineering, AI, and infrastructure staff may facilitate tampering or data leakage.

| AI/ML Risk | NIS 2 Article 21 Duty | Best Practise Control |

|---|---|---|

| Model Poisoning | Secure Dev (21.2a), Testing (21.2f) | Data provenance logs, threat models, approvals |

| Supply Chain Risk | Supplier Mgmt (21.2d/l) | SBOM, signed supplier attestations, audits |

| Explainability/Lack | Logging (21.2j/k) | Model decision logs, IR playbooks |

Each risk needs an audit trail with a clear asset → risk → control → evidence chain.

ENISA’s official AI Threat Landscape and AI Supply Chain Guidance (ENISA 2024) further break down the most urgent scenarios and mitigation blueprints.

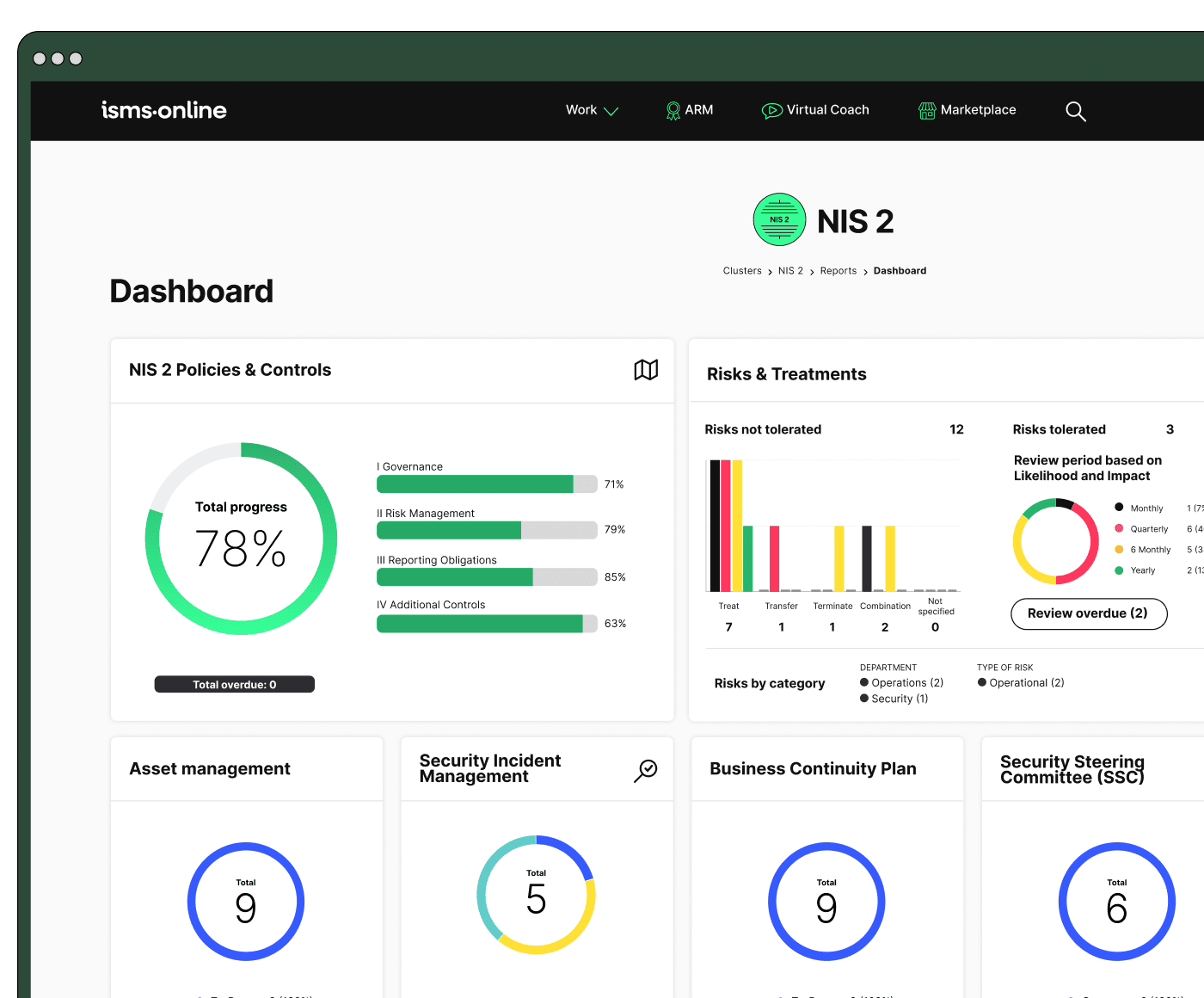

Be NIS 2-ready from day one

Launch with a proven workspace and templates – just tailor, assign, and go.

How Can You Future-Proof NIS 2 Compliance for AI/ML Deployments?

True future-proofing is not about box-ticking for current audits, but about building a system of traceability, documentation, and scenario rehearsal that can scale with regulatory expectations.

Six-Step Proactive Compliance Playbook

- Catalogue All AI/ML Assets: List every model, pipeline, dataset, and supporting application as registered assets in your ISMS.

- Update the Risk Register Routinely: Explicitly log threats like poisoning, drift, supply chain, and black-box exposures. Assign risk owners and auto-triggered reviews.

- Upgrade Supplier Assurance: Demand SBOM, attestation, and periodic audit evidence for all third-party models and suppliers. Document every assessment.

- Automate Linkage (Traceability): Leverage ISMS.online’s linked work features to join asset, risk, control, and evidence into a transparent, auditable chain.

- Upskill Staff for Incident Readiness: Train not just CISOs but DevOps, data science, and privacy teams in AI-centric incident response and periodical rehearsal.

- Map Controls Across Frameworks: For each AI/ML asset, link specifics to NIS 2 (function), AI Act (governance), and ISO 42001 (management). Keep every mapping live in your GRC ecosystem.

| Trigger Event | Risk Register Update | SoA / Control Ref | Evidence Logged |

|---|---|---|---|

| AI release / update | Poisoning, drift, supply chain exposures | NIS 2 21.2a/l, AI Act, 42001 | Threat model, SBOM, test results |

| Vendor code update | Third-party risk, model integrity | 21.2d, 21.2l | SBOM, audit report, approval log |

| Model drift incident | Performance/black box risk | 21.2k, A.5.7 | Incident report, IR log |

Building traceability now is cheap insurance for the regulatory storms of tomorrow.

Where Should You Begin: Immediate Steps for Cross-Persona Compliance Teams

Take action before requirements harden into fines or lost revenue:

- AI/ML Inventory: Pull together a full register of all models, supporting data, and critical APIs.

- Live Risk Register: Integrate explicit model threats and assign live risk owners.

- Supplier Evidence: Collect SBOM, contracts, and attestations as standard procurement checkpoints for all model/code suppliers.

- Test Evidence Chains: Simulate an incident report-can you trace from asset to risk to control to evidence log in under an hour?

- Drill and Update: Quarterly refresh scenario plans (compromise, poisoning, drift) and retrain teams.

- Stay Engaged: Monitor ENISA releases, join sector consultations, and participate in policy development to keep ahead.

Audit readiness is fast becoming a competitive asset-it’s no longer just about cost or avoidance but about shaping trust with every stakeholder.

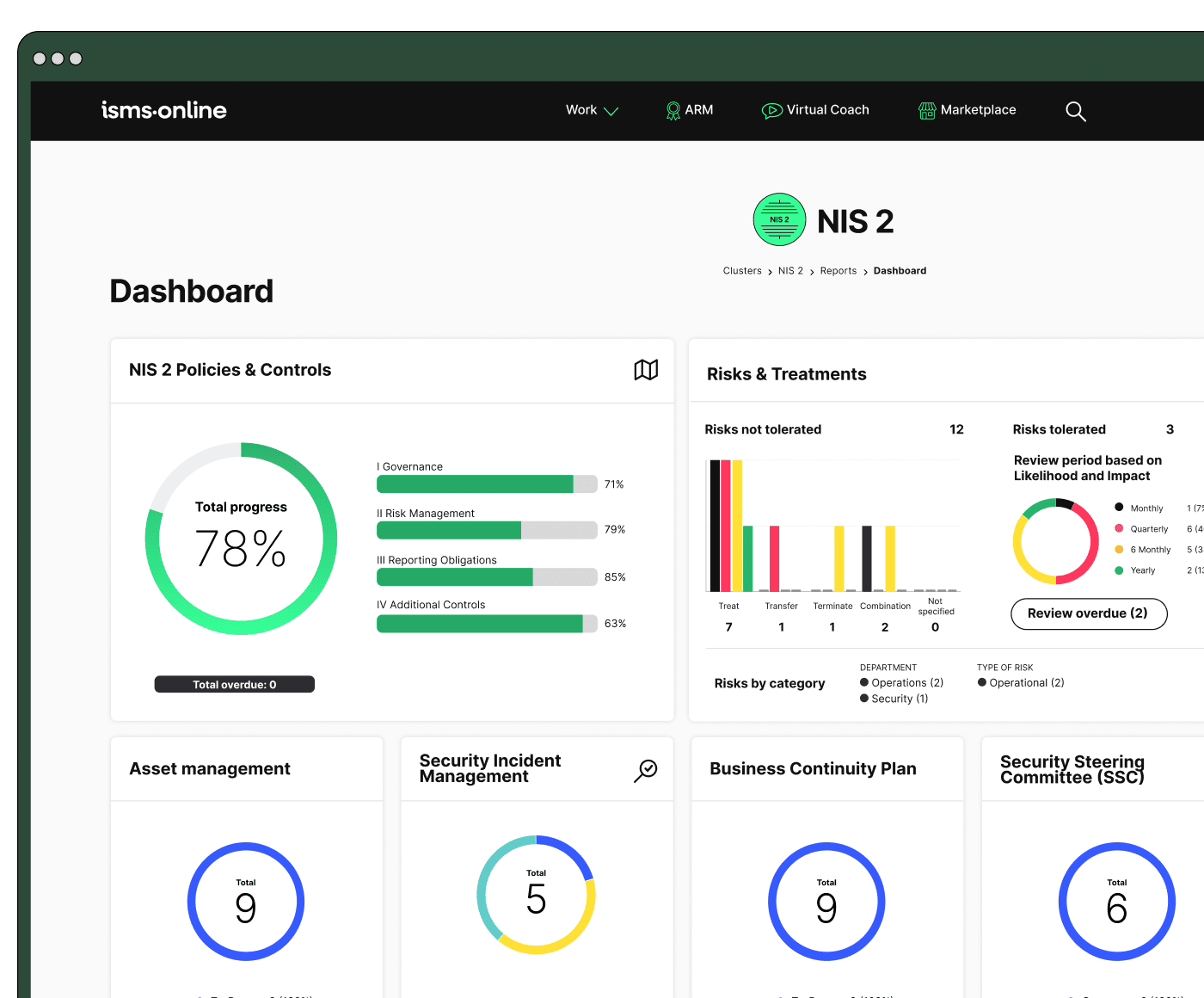

All your NIS 2, all in one place

From Articles 20–23 to audit plans – run and prove compliance, end-to-end.

Why Proactive Compliance Leadership Is Now a Boardroom Imperative

The convergence of AI governance and cyber compliance (under NIS 2, the AI Act, and ISO 42001) is transforming compliance from an IT afterthought into a new category of trust capital. Leadership teams that catalogue, risk-map, and trace all AI/ML assets today are winning at audit speed, regulatory trust, and business velocity-putting themselves ahead of the perpetual compliance scramble.

With ISMS.online, you can:

- Register every AI/ML model, data pipeline, and API as an ISMS asset, tying it to NIS 2, AI Act, and ISO/IEC 42001 requirements.

- Automate documentation, supplier management, risk/control chaining, and evidence logging in a shared hub.

- Prove explainability, audit readiness, supply chain integrity, and cross-regime compliance-before it’s specifically demanded in regulation.

Being ready for what is coming beats scrambling to react when it lands. Audit-ready, mapped, and future-proof: that’s the new standard.

ISO 27001–Annex A Bridge Table: AI/ML Operations Traceability

Expectation → Operationalisation → ISO 27001 / Annex A Reference

| Expectation | Operationalisation | ISO 27001 / Annex A Ref |

|---|---|---|

| AI/ML Asset Inventory | All models, pipelines, APIs logged as assets | Clauses 8.1, 8.2, 8.32, A.5.9 |

| Risk Register | Explicit model risks, mapped controls, owners | Clauses 6.1, 8.2, A.5.7 |

| Supplier Evidence | SBOM, periodic audits for every supplier | Clauses 8.10, 8.11, A.5.19 |

| Control Linkage (SoA) | Tag AI assets to NIS 2/AI Act/ISO 42001 controls | Clause 6.1.3, Annex A |

| Evidence Chain | Log every deployment, update, incident, owner | Clauses 7.5, 8.15, 10.1 |

Sample Traceability Table for NIS 2 and AI/ML

| Trigger | Risk Update | SoA/Control Link | Evidence Logged |

|---|---|---|---|

| Model deployed | Poisoning, supply chain | A.5.7, A.5.19, A.8.32 | Asset log, SBOM, test results |

| Vendor update | Supply chain, drift risk | A.5.19, 8.11, A.5.21 | SBOM, approval trail |

| Model drift event | Performance/black box risk | A.5.7, A.5.9 | Incident report, IR log |

Take Charge: Set the Compliance Pace with ISMS.online

Your organisation’s reputation, audit velocity, and regulatory standing depend on crossing the chasm before it becomes a fissure. Use ISMS.online to map every AI/ML asset to a live control, risk, and evidence chain-automate what is coming under NIS 2, AI Act, and ISO 42001. Don’t wait for new rules to catch you off guard; lead, and be seen as audit-ready, traceable, and future-proof.

Your customers and regulators are already asking for proof. Set the standard. Be the reference.

Frequently Asked Questions

How does the NIS 2 Directive currently address artificial intelligence (AI) and machine learning (ML) systems?

NIS 2 doesn’t explicitly name AI or ML, but once your artificial intelligence or machine learning system shapes a regulated service, it’s treated as a critical asset subject to full compliance. It’s the operational placement-powering healthcare diagnostics, energy forecasting, or financial anti-fraud routines-that triggers inclusion, not whether it’s labelled “AI” or “ML” in your documentation.

As soon as your AI/ML models underpin production systems in energy, healthcare, banking, or similar sectors, you’re expected to inventory them, log model-specific threats in your risk register, govern supply chain and vendor dependencies, and demonstrate readiness through incident rehearsals and control effectiveness. Pilot or “sandboxed” AI/ML models that never impact in-scope business functions may stay outside periphery, but as soon as they drive or support essential or important entity workflows, NIS 2 compliance operationalizes-no exceptions.

The instant artificial intelligence becomes part of your live control environment, it must be visible, risk-managed, and included in incident playbooks-regulator scrutiny will follow where impact arises.

Practical Integration (today)

- Asset Inventory: Add AI/ML as formal assets; log vendors/models as well as endpoints.

- Risk Register: Document unique risks (poisoning, adversarial input, explainability gaps) for every AI/ML asset.

- Incident Readiness: Simulate failures or model drift in real-world drills and recovery plans.

- Evidence Mapping: Trace each model’s controls and risk reviews to ISO 27001 and NIS 2 obligations (examples: A.5.9, A.5.24, A.8.8).

| Expectation | Handling Requirement | ISO 27001/Annex A |

|---|---|---|

| AI runs core business process | Inventory, test in drills | A.5.9, A.5.24 |

| Model influences operations | Risk review, oversight assigned | A.5.2, A.5.14 |

| Vendor model in production | Supply chain, contract controls | A.5.19, A.5.20 |

Is NIS 2 likely to introduce direct, AI/ML-specific rules in upcoming updates?

Yes-NIS 2 is rapidly evolving and direct AI/ML governance is on the 2026 regulatory horizon. Current best practise is set to become baseline as EU policy catches up with the rapid AI deployment wave. ENISA, CEN/CENELEC, and ETSI have all published AI risk frameworks and cyber resilience guidance, notably referenced in sector-specific ENISA Threat Landscape reports.

Anticipated regulatory moves include:

- Formal AI/ML asset inventories: Requiring detail on provenance, ownership, and version.

- Incident and failure reporting: Mandated for “high-impact” model anomalies or security failures.

- Vendor transparency and SBOMs: Full disclosure of model lineage, third-party risk, and contractual audit rights.

- Explainability and audit logging: Ensuring model decisions can be retraced during incidents or regulator reviews.

- Forensic readiness and human oversight: Documenting correction/override logic and response workflows.

By the time formal amendments land, compliance leaders will already treat AI/ML as indispensable to risk and operational resilience.

-ENISA’s latest sector guidance recommends treating AI/ML as “critical digital supply chain components” requiring the same rigour as legacy IT controls.

How should organisations synchronise NIS 2, the EU AI Act, and ISO 42001 for robust AI/ML governance?

Think of NIS 2, the EU AI Act, and ISO/IEC 42001 as interlocking layers for responsible AI/ML operations-cyber resilience, legal mandate, and management system:

- NIS 2: Requires live asset and risk registers, systematic supply chain vetting, and regular incident testing for all operational tech-including AI/ML-supporting critical services.

- EU AI Act: Introduces risk-tiered classification for models (not just “high-risk” but also “limited” and “unacceptable”), dictates data governance, and codifies human oversight for sensitive AI deployments.

- ISO 42001: Provides the management blueprint, mapping how risk, controls, and leadership accountability flow through every AI lifecycle stage, dovetailing cyber and legal requirements.

| Standard | Focus | Core Activities |

|---|---|---|

| NIS 2 | Cyber/operational risk | Asset/risk register, incident/test drills |

| EU AI Act | Systemic/model governance | Classification, explainability, oversight |

| ISO 42001 | Management system | Unified risk/control, traceable evidence, SoA |

Aligning all three means documenting every AI/ML asset, linking to risk/control mapping, and ensuring you can evidence coverage-whether the audit trigger is cyber, AI harm, or supply chain concern.

Which AI-related risks most urgently demand controls under NIS 2?

AI and ML add surface area for both cyber and operational risk, all falling under NIS 2’s umbrella as soon as they become production-relevant:

- Poisoning/contamination: Targeted insertion of false or malicious training data, biassing outcomes.

- Adversarial manipulation: Crafted inputs aimed at tricking models into misclassification or flawed prediction.

- Model drift and decay: Loss of accuracy or reliability when production data diverges from training assumptions.

- Vendor/model supply chain exposure: Unvetted code or models, especially from third parties, can import hidden flaws.

- Opaque (“black box”) logic: Gaps in transparency make incident root-cause harder to prove-problematic for audits.

- Insider or privileged misuse: Poorly controlled model access raises fraud, sabotage, or data leakage threats.

| AI/ML Risk | NIS 2 Citation | Control Strategy |

|---|---|---|

| Data/model poisoning | 21.2a/f, A.8.8 | Audit input, threat model |

| Adversarial attack | 21.2a, ISO 42001 | Simulation/pen testing |

| Model drift/failure | 21.2k, ISO 42001 | Scheduled review/logging |

| Supply chain weakness | 21.2d/l, A.5.19 | SBOM/contractual attestation |

| Black box explainability | 21.2k, A.5.26 | Audit logs, SoA linkage |

Strengthening AI/ML controls today isn’t just best practise-it’s what stands between a near miss and a public regulatory event.

–

How can organisations future-proof their compliance as rules for AI/ML evolve?

To future-proof, treat AI/ML asset traceability as a non-negotiable backbone, rather than a nice-to-have. This means:

- Catalogue all operational AI/ML assets and models, including vendor models and deployments.

- Document risk and control for each model, tied to a real, named risk owner.

- Vet all AI/ML suppliers with SBOMs/contracts, maintain evidence for review or incident response.

- Automate asset-risk-control-evidence chains in systems like ISMS.online, directly linking models to risk control libraries and audit logs.

- Conduct quarterly “AI incident” drills-testing what happens if input is poisoned, a model drifts, or a supplier changes upstream logic.

- Map every control to multiple rulebooks-for every live asset, show where it lands in NIS 2, AI Act, and ISO/Annex A.

| Trigger event | Risk Registered | Control Mapped | Audit Evidence |

|---|---|---|---|

| Launch new model | Poisoning, drift | 21.2a, AI Act | Threat models, IR scripts |

| Vendor update | Supply chain risk | 21.2d/l | New SBOM/contract record |

| Detected failure | Black box/incident | 21.2k, ISO 42001 | Log, incident workflow |

Teams who link every asset, risk, and control in real time can turn regulation into competitive advantage-and respond to incidents with confidence, not panic.

–

What’s the best way to start aligning your team for NIS 2 and AI-ready controls?

Begin with a single, unified AI/ML asset risk and control inventory: list every model, endpoint, and vendor asset in use across your production environment. Then:

- Fill your asset register with every operational model and input/output.

- Assign owners and review cycles for every model risk.

- Collect all contracts, SBOMs, and test logs for each AI/ML supplier or third-party model.

- Run “traceability drills”: Pick an asset at random-can your team map its risk, controls, and audit trail in under an hour?

- Schedule quarterly incident rehearsals: Involve both technical and business teams in model failure or adversarial testing.

- Stay on regulatory watch: Monitor ENISA, sector authorities, and regulatory consultations. Iterate and expand your inventory and incident scenarios as guidance shifts.

Demonstrating live asset-to-risk-to-control linkage for AI/ML is now a board-level trust signal and a sign of operational excellence.

–

To convert compliance into advantage, catalogue every AI/ML asset and connect it-risk to control to evidence-in a living chain. When the auditor or regulator arrives, your proof is always up to date-and your team is never caught off-guard.