Does Your AI Program Have Real Objectives—Or Just Empty Promises?

If your organisation’s responsible AI “objectives” are really just slogans, you’re not managing risk—you’re stockpiling it. The stakes for AI oversight are no longer hypothetical: boards, regulators, and customers now demand proof, not platitudes. ISO 42001 Annex A.6.1.2 isn’t window dressing. It’s the tripwire separating teams with system-wide, enforceable, auditable objectives from those still stuck on good intentions.

Words mean little. The record of your actions decides whether AI earns trust or triggers scrutiny.

Any ambiguity in your responsible AI objectives is financial and reputational dynamite. When you can’t prove your intent lives in daily practice, trust evaporates—and so does budget, time, and market opportunity. The cost of rebuilding confidence always dwarfs the cost of making objectives real from day one. This is why organisations leading in compliance don’t just publicise intent: they wire responsible AI objectives into the bones of their architecture, workflows, and dashboards.

Today’s frameworks destroy any refuge in “aspirational” language. Objectives that remain notional—or live only in a policy binder—are an engraved invitation for fines, exposures, or a compliance-fueled PR disaster. If your answers to scrutiny stop at “mission-aligned,” you’re not ready. You’re exposed.

Why Annex A.6.1.2 “Objectives for Responsible AI” Is a Board-Level Pressure Valve

Annex A.6.1.2 is not about “values alignment.” It is a technical and policy blueprint for building AI systems that can withstand legal discovery, audit, or headline-level public scrutiny. The requirement: responsible AI objectives must be explicit, assigned, measured, and continuously evidenced across the AI system’s lifecycle. This isn’t philosophy—it’s infrastructure (ISMS.online on ISO 42001 Annex A controls).

If you can’t produce objective-based evidence, your organization stands defenseless against scrutiny, sanction, or market distrust.

Boards and regulators are converging on the same question: can you show, not just claim, that your AI is governed by defensible objectives? Europe’s GDPR, NIS2, and DORA; the US’s CCPA and NYDFS; and the UK’s own regimes are aligned on one thing—trust requires chain-of-evidence, not trust-me language.

The Fast-Moving Risk of Staying Vague

If your compliance program lets objectives drift into generalities, three pressure points grow fast:

- Legal Repercussions: New laws insist objectives must be traceable. Fines are rising, and ignorance is no longer a defence.

- Reputation Risk: Every failure to evidence “responsible AI” becomes tomorrow’s headline.

- Operational Blindness: If objectives don’t reach practitioners—engineers, devs, or customer support—your AI operates on guesswork, not guardrails.

Every process without a tied objective is a trust gap. Every “aspiration” that can’t be mapped to control, log, or metric is a liability waiting for the wrong call or the wrong crisis.

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

What Happens If You Don’t Codify Responsible AI Objectives?

When responsible AI objectives are either missing or too generic, your organisation is walking a risk minefield. Good intentions on glossy PDFs don’t neutralise threats—or convince regulators, for that matter.

Objectives kept vague or generic are governance landmines—about to be stepped on when you least expect it.

Teams without real, living objectives fall into four predictable risk traps:

1. Invisible Bias

Unchecked model bias sneaks in and stays—if you haven’t built objectives (and controls) to measure and fix it. This isn’t just a technical lapse; it’s a regulatory and reputational time bomb.

2. Opaque Decision-Making

Decisions that can’t be mapped to objectives become black-box threats. Customers and partners demand to know why an AI outcome happened. If your system can’t trace its reasoning to an objective, you lose trust. Regulators see this as unfair—and unenforceable.

3. Diffused Ownership

Objectives without clear owners lead to responsibility no-man’s-land. When everyone owns a risk, no one does.

4. Failed Auditability

Auditors expect clear, measurable traces from objective to action—and from action back to objective. If you can’t surface this connection, your compliance program collapses under its own weight.

Jurisdictions with GDPR, DORA, NIS2, or imminent AI acts all now cite “demonstrable outcomes” as the proof point. Failure to codify means teams spend more time firefighting after the fact instead of building robust, trusted systems from the ground up.

Why Responsible AI Objectives Must Be More Than Philosophy

Stakeholders now want receipts. “Responsible AI” isn’t proof—unless it’s attached to metrics, ownership, and policy. A value isn’t operational until it leaves a mark on how your system acts, measures, and gets fixed in real time.

What Separates Real AI Objectives from Empty Claims

Responsible AI objectives are:

- Specific: Tied to distinct technical or business outcomes—not a blanket statement.

- Measurable: Developed as testable KPIs or audit data (e.g., “Loan approval bias below 2% annually”).

- Accountable: Linked directly to a documented owner—not just “the team.”

- Operationalised: Embedded in process, controls, and incident-handling.

A principle without an objective is a hope. An objective without a metric is a liability.

The alternative? Statements like “We support fairness” that immediately fold under audit or adversarial discovery. Only live objectives—“alive” in logs, dashboards, and controls—let you withstand scrutiny. Those are the only objectives regulators, insurers, and future investors will care about.

What’s at Stake When Objectives Are Foam, Not Foundation

If objectives can’t be traced from build to audit, the silence will cost your team both money and influence. From hidden bias, to unexplained actions, to operational drift—risks multiply under the radar. As industry shifts to evidence-first, demanding “responsible by default,” you can’t plead ignorance—only show outcomes.

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

Four Outcomes That Separate Real Responsible AI from Cosmetic Compliance

Annex A.6.1.2 draws a clear boundary between whiteboard ideals and boardroom realities. There are four testable outcomes that define a real responsible AI objective:

1. Fairness and Bias Mitigation

Aspiration isn’t enough. Your governance must include explicit bias checks and remediation:

- Example: “Approval gap for protected groups must not exceed 2% per quarter.”

- Evidence: Use regular statistical tests, tie every review to an owner, and log fixes.

2. Explainability and Transparency

If your outputs can’t be explained, they’re on shaky ground. Modern regulators require:

- Logged explanations for every key decision.

- Flags on any anomalous or unexplained outcome (for mandatory review).

- Accessible audit trails—model cards, explainability dashboards, etc.

- The EU AI Act, US frameworks, and UK rules all now expect tangible proof of explainability.

3. Accountability and Ownership

Objectives must be mapped to living people, not phantom “champions.” Audit logs, incident reviews, and exceptions must always reference a real, named owner.

4. Tangible Societal and Organisational Value

Tie your objectives to measurable impact—on accessibility, sustainability, social good, or concrete business returns. Run regular impact reviews; surface positive change as well as areas for course correction.

Organisations that deliver on all four will find audits smoother, sales cycles faster, and internal morale measurably higher.

Codify the AI Lifecycle: Objectives Embedded, Audit-Proven

Genuine responsible AI objectives are not a “project phase” or a management convenience—they’re the DNA of the entire lifecycle. If your objectives can’t be traced through every system change, deployment, or real-world incident, you’re not audit-ready.

Embed Objectives the Whole Way Through

- Requirements: Make objectives the first checkpoint during requirements-gathering—before a single engineering or model decision.

- Architecture & Design: Bake objectives into every technical and workflow design, with clear links to controls like explainability tooling, bias detection methods, and incident escalation.

- Development & Testing: Automate collection of live, reviewable logs so stakeholders see compliance status in real time.

- Deployment & Production: Link all release and performance KPIs directly to stated objectives—backed by incident and exception logs.

- Audit & Alignment: Connect each objective to internal and external evidence, ensuring your governance can stand up to investigation or investor due diligence.

If an objective can’t be traced from requirements to live dashboard, it’s a liability masquerading as a value.

The SMART Litmus Test

Every boardroom now expects SMART objectives:

- Specific: No ambiguity or weasel words.

- Measurable: Must yield pass/fail evidence.

- Achievable: Real targets, not wishful thinking.

- Relevant: Directly mapped to risk, mission, and regulatory demand.

- Time-bound: Set with review frequencies and clear deadlines.

A weak statement like “Reduce bias” isn’t enough. “Lower model-driven approval bias by 8% across all customer groups in 12 months, tracked by quarterly dashboard reviews tied to owner X”—that holds up under audit.

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

What Executives, Boards, and Regulators Expect—And How You Deliver

You’ll be asked for credentials, but you’ll be measured by live evidence. To supply it, you need four lines of defence:

- Are your AI objectives mapped to controls and dashboards?

- If you lack traceability, you lack trust—and you’re at risk of failing any serious audit.

- Can you demonstrate ongoing KPI monitoring with live evidence—not just static reports?

- Real-time dashboards show oversight; stale reports invite scepticism.

- Are intervention points real control gates, or vestigial review steps?

- Can teams actually halt or remediate, or do “review steps” just tick compliance boxes?

- Do objective owners have authority to adapt processes in response to risk—not just put their name on a file?

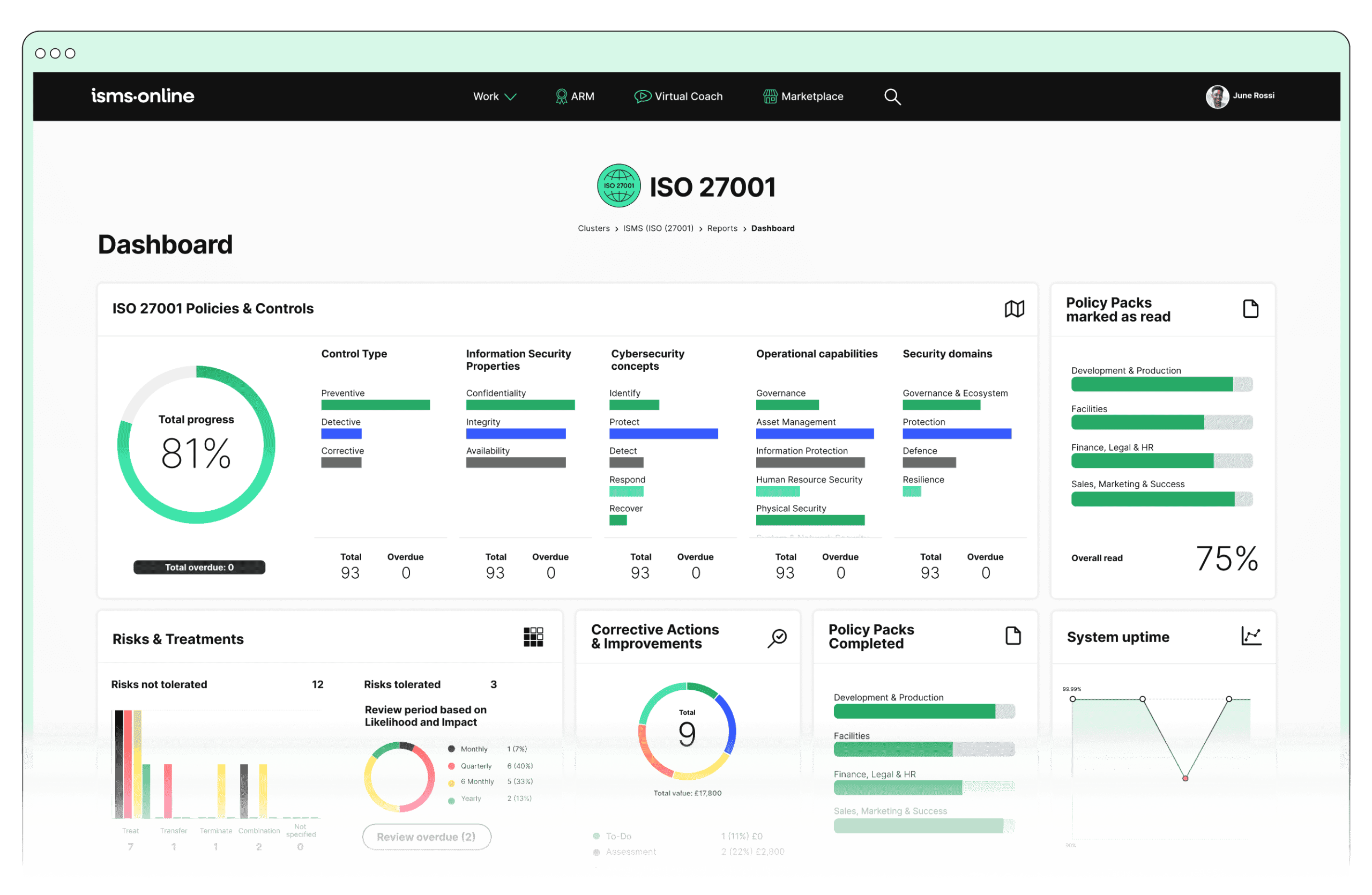

The ISMS.online platform arms compliance, cybersecurity, and technical teams with:

- Pre-built templates: Structured “from day zero,” stripping ambiguity from every objective, audit log, and control mapping.

- Objective-KPI-control dashboards: Live status and risk exposure—ready for internal review or instant external evidence.

- Closed-loop workflows: Link policy, risk, and technical plumbing, removing process drift or handoff ambiguities.

With this arsenal, you face investors, auditors, or regulators with confidence—evidence in hand, with no need to rely on “we tried” narratives.

Responsible Objectives: The Trust Engine for AI Compliance and Competitive Growth

When your responsible AI objectives are living facts, not paperwork—everything speeds up. Incidents close faster, audits get lighter, and major clients start trusting you more by default.

Firms able to show responsibility-by-design aren’t slowed down—they outpace the pack with every regulatory shift.

Firms that operationalize proof—rather than defend intent—reduce risk exposure, minimize compliance overhead, and accelerate procurement approval.

Organisations setting the pace:

- Translate international frameworks into visible KPIs and plain dashboards.

- Build trust by sharing live evidence with customers and partners.

- Slash both headline and hidden risks through early detection and rapid remediation.

- Show that compliance investments drive measurable value—from productivity to reputational lift.

If you treat responsible objectives as an operational asset—not a legal minimum—you win faster in every dimension.

Objectives as Defensible Asset: The End of “Optional” for Responsible AI

“Responsible AI” as afterthought is over. Annex A.6.1.2 is the robust starting line, aligning regulatory and market expectations with operational controls and measurable results. In a board environment that scrutinises every claim, a defensible trail from objective to outcome marks the difference between companies that merely comply—and those that thrive.

ISMS.online turns this requirement into opportunity. Your objectives become living, dynamic proof—tracked across the entire lifecycle, visible to every stakeholder, and refined with every review.

In a world where everyone claims responsibility, only you can prove it, line by line and action by action.

Build Responsible AI Objectives with ISMS.online Today

Back up every responsible AI claim with actionable, evidence-backed objectives—operationalised from blueprint to dashboard. ISMS.online equips you to automate control mapping, demonstrate live compliance, and adapt with speed as expectations shift. No more “just in case” reporting or patchwork afterthoughts: you lead with clarity, your board breathes easy, and markets recognise your progress before competitors have even started to draft theirs.

Turn responsible AI from a compliance checkbox into your most strategic asset. See ISMS.online in action.

Frequently Asked Questions

What moves ISO 42001 Annex A Control A.6.1.2 from theory into actionable leadership for responsible AI?

A.6.1.2 serves as the hard checkpoint between AI optimism and operational mastery—giving leaders an engine to scalp risk, assign live responsibility, and match technical controls to documented ethical values. It’s no longer enough to claim “AI ethics”; this control translates those claims into quantifiable, owned objectives that shape real-world outcomes. As frameworks like the EU AI Act and DORA intensify scrutiny, and procurement teams interrogate proof—not policy platitudes—A.6.1.2 becomes the backbone of business resilience, customer trust, and boardroom credibility.

Accountability isn't in the slide deck—it's carved into your systems, visible the moment an auditor asks where your controls live.

Why has the baseline shifted for compliance officers and CISOs?

- Investors and regulators now inspect, not just expect, proof of live risk controls tied to every AI deployment.

- Customers raise the bar: vague principles no longer protect supplier relationships or win high-impact contracts.

- Public trust swings on hard evidence: visible chain of ownership and incident-triggered improvement cycles are non-negotiable.

Mastery of A.6.1.2 equips your organization to spot design drift, escalate before damage compounds, and own the market narrative on digital responsibility.

Which responsible AI objectives does A.6.1.2 demand, and how are they framed to survive audit scrutiny?

Organizations must commit to objectives that lock in clarity, drive measurable outcomes, and assign unambiguous ownership. Each objective must bridge leadership’s ambitions with implementation teams’ realities—binding technical checkpoints, regulatory hooks, and clear accountability.

Hallmarks of robust objectives:

- Tangible policy anchors: Don’t settle for “be fair”—instead, specify “demonstrate zero disparity above 2% in outcomes; anomalous results must trigger escalation within the same quarter.”

- Full-lifecycle assignment: Each objective draws a line from initial requirements to end-of-life reviews, never drifting into ambiguity as models evolve or get deprecated.

- Live role mapping: Objectives routinely revisit ownership; reviews, version logs, and training records tether actions to real people, not faceless teams.

- Explicit regulatory fidelity: Controls are mapped to current frameworks—GDPR, sector codes, NIS 2, emerging local laws—translating compliance from checkboxes into board-ready defense.

Practical framing in action:

- “All model retrainings are accompanied by bias audits logged to the compliance dashboard and disputed by technical and legal roles.”

- “User explanations for every AI output are generated within 24 hours and reviewed monthly; lapses are tracked and reported direct to executive risk.”

- “Data minimization checks, deletion logs, and evidence trails are reviewed every audit cycle, with non-conformance escalating to senior compliance.”

Objectives structured like these survive deep-dive interrogation, not just annual self-assessment.

How can your organization weave A.6.1.2 objectives into daily practice—without letting them fade into procedural afterthoughts?

Start with a cross-functional workgroup—compliance, tech leads, and business stakeholders. Drag policy language into sunlight: convert each value or legal requirement into an actionable system checkpoint. Document objectives directly within AI project artifacts; version-control every amendment.

Pin every objective to a single, named owner. Automate reminders, reviews, and assigned actions using platforms like ISMS.online, which surface audit trails in one place. No “loose docs” or spreadsheet sprawl.

Adopt dashboards that show live status—bias rates, explanation coverage, incident containment times. Schedule cyclical reviews, but also trigger immediate updates on detection of new threats, changes in law, or post-incident critique.

Checklist for systemic embedding:

- Each AI system features a mapped objective log and owner record.

- Every review or incident leaves an indelible mark—objectives get timestamped, rationale recorded, and changes tracked from origin to present.

- KPIs for fairness, safety, and transparency are visible both internally and to external stakeholders when needed.

Organizations relying on ad-hoc, siloed files will be left exposed. Integration is as much a process discipline as a technology choice.

What universal AI objectives reliably pass auditor and buyer scrutiny—and how do leading organizations maintain them?

Certain objectives have become de facto gold standards, vettable by regulators across sectors:

| Objective Type | Live Example | Evidence Mechanism |

|---|---|---|

| Bias Intervention | “Flag and remediate outcome bias >2% by next quarterly cycle.” | Bias audit logs, escalation records |

| Explanation Coverage | “≥98% of major user decisions have retrievable explanations within 2 days.” | Explanation logs, executive review |

| Ownership & Response | “Each production model is assigned to a role with incident playbook and retraining authority.” | Assignment records, training outcomes, incident playbooks |

| Privacy Control | “All personal data use, deletion, and override logs are auditable and reviewed biannually.” | Privacy audit logs, deletion certificates |

| Safety/Reliability | “Performance dips >5% trigger an automated rollback and board notification within 48 hours.” | Ops dashboards, board review minutes |

Maintenance discipline—real-world practices:

- Quarterly auto-reviews for every objective; ISMS.online handles automated notifications and evidence capture.

- Immediate feedback cycles: significant incidents or new laws force a full objective and owner recalibration.

- Integrated governance portfolio: objectives are tracked not only for compliance but for executive and market optics—proving resilience, not just readiness.

How is ethics, transparency, and safety transformed from value statements into operational controls under A.6.1.2?

Ethics comes alive only when every promise leaves a system trace. This control demands records, drill logs, and role-based auditing for values once sequestered in board reports. Examples:

- Bias detection: Scheduled bias scans, root-cause escalations, action audits, and signed logs cap the claim of fairness.

- Transparency: Model cards, user-facing explanation tools, and stakeholder challenge logs are built into operational routines.

- Safety: Live monitoring, failover tests, and rigorous rollback rehearsals are mapped to incident response, never left to hope.

- Versioned accountability: When a regulator asks “Who knew, and when?” every update, handover, and playbook revision is instantly retrievable.

If your policies are locked in version control, but daily evidence withers in endless archives, your systems remain theater—not assurance.

What specific evidence wins compliance, audit, and buyer validation for A.6.1.2?

Auditors (and increasingly, buyers) look for a living system of proof—not retrospective patches. You’ll need:

- Versioned, policy-mapped objectives: —each change linked to a decision record and mapped back to regulatory requirement.

- Chain of custody: Bias tests, privacy audits, explanation logs, owner assignments—all signed, timestamped, and woven through the AI’s lifecycle.

- Continuous monitoring artifacts: Live dashboards that reflect incident trends, explanation success rates, and triggered escalations.

- Incident correlation: Audit logs showing how near-misses or breaches update objectives and owners in real time.

- Organizational learning: Executive review minutes, improvement records, retraining documentation, and playbook revisions that display a culture of ongoing adaptation.

Platforms like ISMS.online consolidate these measures—unifying evidence, automating version updates, and translating improvement from compliance burden into brand asset.

Market trust is engineered at the intersection of relentless evidence and human oversight—your system doesn’t just pass audit, it sets the standard buyers aspire to.

How do top organizations measure, audit, and evolve A.6.1.2 accountability over time?

- Quantitative progress tracking: Metrics for bias detection rates, incident response velocity, and user explanation success are benchmarked quarterly, not annually.

- Adversarial and blind audits: Engage both internal and independent reviewers; challenge controls for resilience, not just documentation.

- Incident feedback loops: Each anomaly, law change, or strategic pivot iteratively reshapes objectives, tightening your defense posture and aligning to fresh threats.

- Cross-role debate: Force engagement across compliance, technical, legal, and business leadership to surface holes, reveal unconscious risk, and push for stronger benchmarks.

- Transparent dashboards: Let executive and operational stakeholders view evidence and progress—celebrate improvement and learn from exposed vulnerabilities.

Organizations who treat A.6.1.2 as a dynamic framework—never a fixed requirement—aren’t just playing catch-up; they’re leading by example, driving confidence through visible, rapid, and honest adaptation.

Real resilience emerges when your objectives evolve before your threats do—confident leadership is built where auditable evidence and operational oversight move faster than risk.