Why Your Operation’s Reputation Hangs on ISO 42001 Annex A Control A.6.2.6

For companies embedding AI into their core operations, visibility and trust aren’t slogans—they’re survival. ISO 42001 Annex A Control A.6.2.6, “AI-system operation and monitoring,” is the control that defines whether your AI is defensible, traceable, and bulletproof when the board, regulators, or your toughest customer demand answers. You can invest in models, cloud, and compliance documentation—but the moment you lose sight of live AI behaviour, you lose control of your organisation’s storey.

The costliest failures are the ones you didn’t see coming—until users or regulators tell your board before you do.

Any security or compliance veteran knows: operational risks don’t wait for a gap analysis—they mutate. Classic infrastructure health checks catch what’s easy: is the server on, is latency inside a healthy range, is disc usage sane? AI, though, is its own class of creature. You face drift, unintended bias, hallucinated results, adversarial threats, and silent decay—failure modes that flip from theoretical to headlines in a single oversight.

If your organisation treats AI monitoring as an IT afterthought—confusing “uptime” for “assurance”—you’re gambling with trust, compliance, and reputation. Control A.6.2.6 mandates you move past comfort metrics and show live evidence your AI is fair and explainable, its failures are caught early, and your learning process is continuous.

Why This Control Is Now a Trust Differentiator

- Anyone can claim robust security. Only those who can prove it—at speed, with logs, evidence, and improvement trails—win trust.:

- Boards, customers, and regulators demand not just forensic audits after the fact, but real-time evidence of control: “Show us the metrics, escalation history, and learning actions.”:

- Silent failure isn’t silent for long—one missed bias report or anomaly is enough to trigger regulatory penalties or lose vital client relationships.:

What Sets AI-System Monitoring Apart From IT Monitoring?

Ask your best network engineer what success looks like; you’ll hear uptime, p99 latency, or disc utilisation. Now, ask your compliance officer or CEO how they know your AI is safe, fair, and trustworthy for every user, every minute, every market. Traditional IT metrics don’t touch the risks—real-world or reputational—that only AI-specific monitoring exposes.

You can’t prevent what you don’t watch—and what you watch for AI is rarely what IT expects.

The Distinctive Tasks of AI Monitoring

- Beyond Health Checks:

Uptime is binary. AI safety is never binary. AI systems can be up but delivering skewed, unfair, or dangerous outcomes—silently.

- Bias and Drift Are Moving Targets:

Model performance shifts gradually or instantly, and discrimination can hide almost invisibly inside familiar workflows.

- Explainability Gaps Cost Real Money:

When clients or regulators demand, “Show us why this happened,” the data must be ready—traceable, audit-logged, and human-reviewable.

The Layers You Can’t Ignore

| Monitoring Target | Example Metric | Why It’s Essential |

|---|---|---|

| System Uptime | API response monitoring | Baseline, not enough |

| Model Health | Accuracy, drift, input outliers | Detects decay or error at ground level |

| Fairness Metrics | Equal error rate, bias flags | Spots discrimination before it erodes trust |

| Explainability | Local explanations, flagged cases | Satisfies legal, customer, and audit requirements |

Even if your dashboards look normal, without dedicated AI outcome and fairness checks, you’re drifting toward compliance, PR, or audit disaster.

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

Behind Every Alert: Ownership, Response, and the Cost of Delay

AI system failures are rarely pure tech. Ownership confusion, broken escalation paths, or “wait and see” responses do more damage than an outage ever did. ISO 42001 Control A.6.2.6 spells out clear accountability. Your monitoring chain is only as strong as your fastest escalation—and the quality of your documentation.

An undelivered or uncleared alert isn’t a minor slip; it can turn a one-user glitch into tomorrow’s headline problem.

How to Make Your Response Chain Unbreakable

- Map precise owners: for every monitoring stream—no exceptions, no fudge.

- Automate urgency: Escalation should be time-bound and workflow-triggered, never reliant on “hopeful” emails.

- Document like your licence depends on it: Everything from incidents to postmortems—all traceable to the source.

- Prove your feedback loop: Show not just what happened, but how you fixed it and what you changed operationally.

Auditors want to see a clean, full chain from detection to remediation. And your leadership wants assurance that mistakes become lessons and safeguards, not public failures.

AI Failures Are Organisational Failures: Support, Escalation, and the Trust Premium

The truth most organisations avoid is this: users, suppliers, and partners can—and do—find issues your monitoring misses. The art is not in suppressing complaints, but in capturing signals the minute they arise, routing them to accountable owners, and proving every handoff is timely and effective.

Trust isn’t destroyed by the first incident, but by poor follow-up and unresolved complaints that fester in inboxes.

The Core of Real-Time Support and Escalation

- Zero-loss signal logging: Every issue, complaint, or anomaly is logged, tracked, and visible for audit—no exceptions.

- Escalation by design, not accident: Time-based, automated workflows route the right issue to the right owner.

- Service level transparency: Deadlines for incident review and fix are published and adhered to, not negotiated ad hoc.

- Lifecycle audit trails: You can reconstruct the storey behind every reported episode, from opening through fix, to process change.

Support is not a bolt-on; it’s an explicit compliance and reputational buffer in the eyes of ISO, regulators, and customers.

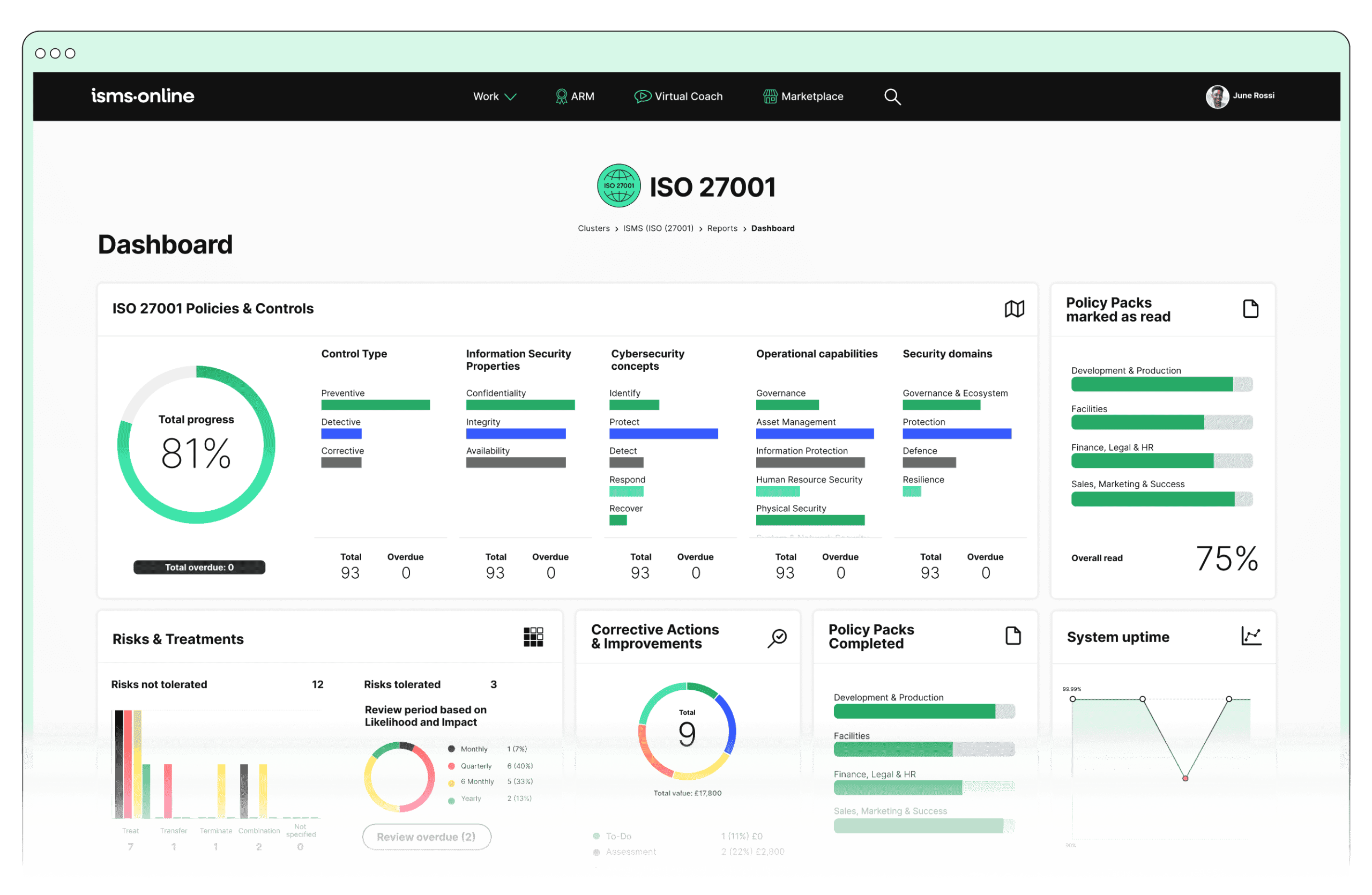

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

Fairness and Transparency Must Be Lived, Not Laminated

In security, “proof” always beats “promise.” The same is now true for AI fairness. Annex A.6.2.6 isn’t an invitation for policies or training wheels—it’s a requirement for live, ongoing bias and explainability checks, with a mandatory route for complaints to become verified fixes.

Fairness policies without continuous evidence are worse than nothing—they convince no one and fail audits instantly.

The Evidence-Driven Fairness Toolkit

- Bias dashboards: Live, always-on anomaly and discrimination monitors, tailored to high-risk cohorts.

- Explainable outputs: Storage of feature-level or rationale chains for suspect decisions—traceable for audit or user challenge.

- End-to-end ticketing: You must show, not assert, that user or stakeholder input was logged, routed, remediated, and fed back into a learning loop.

When fairness and explainability are present only on paper, you haven’t reduced your compliance risk—you’ve branded your operation as untrustworthy.

Why Classic IT Incident Response Fails for AI—and How to Survive Scrutiny

Generic “runbook” approaches to incident management break down fast when the threat is a hallucinating model or a rapidly shifting pattern of user harm. AI brings problems of speed, opacity, and complexity that challenge IT-centric plans.

A real test of readiness is when your compliance, ops, and AI specialists can drill a scenario, learn, and adapt—under stress, not with hindsight.

Building Real AI Crisis Readiness

- Schedule mixed-team incident drills: —bias, explainability, and outcome danger included.

- Full documentation, always: No fix or change without a chain of evidence, root cause, and process learning.

- Monthly (or faster) reviews: If you’re not learning from every signal, you’re falling behind on both compliance and operational excellence.

Auditors, customers, and regulators all want to see not perfection, but readiness and an ever-improving posture under pressure.

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

Turn Monitoring and Logging Into a Strategic Asset, Not Dead Paperwork

The best logs aren’t badges—they’re weapons. Logs must prove uptime, anomaly remediation, bias control, and learning—not feed a dead archive. Strategic operators review, share, and act on log data, turning compliance into measured progress.

If logs exist only to be archived, you’re not compliant—you’re vulnerable.

How to Drive Real Value

- Review logs at least quarterly: —preferably monthly. Every alert must lead to an action item.

- Socialise lessons learned: Summaries must go to all relevant teams, not just compliance or IT.

- Link logs to KPIs: Show leadership how problem-finding drives lower risk and greater performance, not just audit-readiness.

Annex A.6.2.6 is fulfilled not by form-filling, but by visible, evidence-driven improvement that elevates your operation’s standing.

Win Confidence for Your AI-System Operations and Reputation—Start With ISMS.online

No compliance function can afford “maybes”—especially when auditors and boards are raising the bar. ISMS.online brings all the framework, tools, and evidence chains demanded by ISO 42001 Annex A Control A.6.2.6 into one unified ecosystem.

With ISMS.online, your company’s AI operation and monitoring is provably robust: every metric, complaint, and fix is logged, trended, and instantly available for scrutiny or process learning. You gain defensible compliance, live trust curves, and a support infrastructure that doesn’t let incidents sit or complaints vanish. When the spotlight hits, you demonstrate operational maturity, evidence-backed progress, and a brand reputation that earns trust—not just in audits but in the marketplace.

If your most important customer, toughest auditor, or sceptical stakeholder called for evidence right now, could you guarantee that every AI-system issue has been tracked, resolved, and woven into your company’s improvement fabric? With ISMS.online as your operating backbone, you don’t just answer “yes.” You show the proof—on demand, every time.

Every signal matters. Every lesson counts. Operational strength and trust are built, monitored, and continuously improved—by your actions, not your intentions.

Make ISMS.online part of your compliance backbone and position your organisation as a leader on AI-system control, trust, and future-proof resilience.

Frequently Asked Questions

Why is explicit accountability essential for AI system monitoring under ISO 42001 A.6.2.6?

Explicit accountability transforms AI system oversight from “shared” wishful thinking to verifiable, audit-ready security. ISO 42001 A.6.2.6 compels you to tie every monitoring and intervention step—from model performance checks to incident remediation—to a named person or defined role, banishing the comfort of collective ambiguity. If a complaint surfaces or drift slips by undetected, regulators and stakeholders demand not just policies but clear evidence showing who acted, who missed, and who made final decisions.

When accountability is structured, minor oversights have fewer places to hide, and emergent threats are surfaced fast. Your logs, assignment schedules, and escalation records become the proof, mapping each action and handoff to a timestamped owner. The days of “the process owned itself” excuses are over; now, every incident trail leads to the individual or team with live oversight—and that forensic clarity becomes your defence, not your liability.

The invisible hand of ownership is a myth—true oversight shows its fingerprints on every system log and action check.

Platforms like ISMS.online hardwire this into daily operations: any flag, review, or escalation is instantly assigned, logged, and surfaced in real time. The result? You move risk out of the shadows and into open air, making both compliance and organisational trust provable rather than promised.

Core requirements for role-based accountability

- Each monitoring, escalation, or root cause review is mapped to a specific person or defined role.

- Assignment charts and evidence logs are export-ready for audit or regulatory scrutiny.

- Escalation trails, sign-offs, and remediation actions are visible at every handover point.

- Audit pathways close the plausibility gap, ensuring no incident is “nobody’s” responsibility.

Companies that outpace regulatory pressure have accountability embedded at every layer—turning oversight from a compliance tax into an operational habit that builds trust.

How should organisations prove that AI monitoring is active and effective—not just a theoretical claim?

Active AI monitoring proves itself in daily operation, not policy binders. ISO 42001 A.6.2.6 demands evidence you’re not just watching but detecting, acting, and closing the loop—moment by moment. This means merging real-time system logs with role-linked alerts, so that every flagged anomaly, model drift, or bias event automatically enters a defined escalation path, visible to the right person, with each action documented.

Stakeholders and auditors now expect monitoring proof to go beyond tech uptime and accuracy. They want clear records of bias scans, user feedback, explainability checks, and workflows that show not just that a policy exists, but that it drives rapid, accountable action. If a drift anomaly is flagged, the trail must show who was notified, what they did, and when it was resolved.

Monitoring that can’t surface its own record is monitoring that never really happened.

ISMS.online operationalizes this layer: model performance, bias incidents, and support tickets all stream to a central dashboard, with event records and assignment logs ready to share with auditors or regulators at a moment’s notice.

Building operational monitoring evidence

- Automated process logs linked to named action owners at every event point.

- Dashboards that reveal live system health and incident status, accessible by compliance and business leaders.

- Escalation chains and feedback channels integrating technical, business, and ethical signals.

- Exportable, immutable audit trails showing lifecycle steps from issue to fix.

When you can surface a complete, real-world monitoring storey—rather than promise theoretical oversight—you move compliance from bureaucratic burden to board-worthy asset.

What is the optimal command chain for repairing, updating, and supporting production AI under ISO 42001 A.6.2.6?

Production AI resilience hinges on a command chain where everything is mapped, assigned, and auditable—not strung together by best guesses, or lost in inboxes.

When a drift event, user complaint, or bias alert hits, predefined escalation triggers route the issue to the correct technical owner, aligning with support or compliance specialists as needed (not whichever engineer is “on”). Repairs and patches are carried out by role-designated individuals, with every stage—diagnosis, change, validation, sign-off—timestamped and reviewed. No step can be skipped, and each handoff is recorded in case of future scrutiny.

This structure isn’t just about efficiency. It’s failure-proofing. Most AI disasters trace back to neglected handoffs, missed assignment, or informal patching far from the eyes of compliance. With the right command chain, every incident becomes an opportunity for defensibility and operational improvement.

Every unchecked handover creates a blind spot where root cause and accountability evaporate.

ISMS.online supports this workflow: detection triggers automatic logging, routing, and live status updates, ensuring the repair cycle is not only faster but entirely reviewable by regulators or third-party assessors.

Essential elements of a production support command chain

- Each incident (technical, ethical, or operational) triggers a unique, mapped response path—no improvisation.

- Incident logs, repair steps, and validation cycles tie directly to named participants.

- Service-level commitments (SLA adherence, escalation timelines) are tracked by system—not memory.

- Root cause reviews and lessons learned are captured in the same evidence base for audit and improvement.

When operational defence is systematised, you weather regulator challenge, partner audit, or even public incidents with proof—not spin.

What new detection and logging procedures must organisations implement for AI bias, drift, and explainability?

Bias, drift, and explainability gaps expose you to risks that often escape classic IT surveillance. ISO 42001 A.6.2.6 requires rigorous, methodical detection and logging steps, each documented and mapped for review.

For bias and drift, automate scheduled scans with statistical thresholds defined for outcomes—flag events not just on overall accuracy loss, but on group fairness metrics or feature distribution shifts. Events crossing these thresholds must be logged, assigning the case to a named reviewer who is accountable for diagnosis and root cause exploration. An explainability failure—where an outcome cannot be fully justified to auditors, users, or regulators—follows the same workflow: reported, documented, triaged, and tethered to a responsible owner until resolved.

User complaints, third-party concerns, and anomaly alerts should feed into a unified intake channel (dashboard, web form, or monitored email), where they’re captured and assigned within minutes, never lost or ignored.

An unlogged bias breach or unexplained model output is a lawsuit waiting to happen.

ISMS.online embeds these controls: bias, drift, and explainability events are tracked and linked directly to owners, auto-alerting both technical and compliance roles. The audit trail is not just for handshake moments—it’s live insurance.

Key detection and logging steps

- Automated, threshold-driven scans with immediate flagging for bias/drift anomalies.

- Real-time case assignment for every flagged event, with reviewer and status recorded.

- Central channel for logging complaints and out-of-band explainability issues.

- Resolution, root cause, and sign-off data captured for each logged item.

This approach not only plugs gaps—it positions your team for audit, resilience, and genuine learning, not repeat mistakes.

Why do AI incidents require fundamentally different response strategies than traditional IT events?

AI incidents don’t just crash code—they threaten ethics, legal standing, and public trust, steps classic IT incident response was never built to address. Standard IT playbooks miss the human and regulatory complexity of a model that quietly biases against a group, loses explainability, or evolves outside compliance’s reach (the “shadow AI” problem).

AI-specific events must be classified for their impact across business, regulatory, ethical, and technical axes. A dedicated escalation framework sends incidents to hybrid teams: engineers, privacy experts, risk officers, and—often—legal or ethics advisors for genuinely cross-border crises. Each response is documented at every handoff, creating an unbroken evidence thread from first detection to board notification and post-mortem reform.

Platforms like ISMS.online automate this sequence, embedding customizable, AI-centric playbooks and audit-first evidence capture, so you avoid the chaos that comes from merging technical only with operational only responses.

Classic uptime and restore doesn’t save your reputation when explainability fails or bias emerges.

This all-in approach ensures that not only is the issue contained, but every lesson cycles back for process improvement—turning each new edge case into a risk-reduction asset.

What makes AI incidence truly different?

- Incidents cross legal, ethical, technical, and reputational boundaries—demanding cross-disciplinary action, not just engineering fixes.

- Hybrid response teams are assigned on detection, with roles and actions recorded in real time.

- Documentation is protected from edit or loss, supporting both regulatory defence and system learning.

- Lessons are fed directly into future prevention, not banished to post-mortem PDFs.

Organisations relying on old-school IT incident models risk overlooking tomorrow’s biggest failures—by building AI-ready response, you secure both the technical and ethical future of your operation.

How does ISMS.online deliver real-time, irrefutable evidence of ISO 42001 A.6.2.6 compliance?

ISMS.online takes compliance out of the realm of paperwork and converts it into ongoing, actionable proof. Automated logging of every control—bias checks, system updates, incident escalations—links directly to real people, dates, and outcomes. Live dashboards visualise your AI landscape for compliance, with audit trails exportable at a click for regulator or partner review. The platform integrates support ticketing, escalation logic, monitoring, and root cause analysis—eliminating scattered evidence and manual reporting.

When challenged, your team answers not with intent but with clear records: who detected, who assigned, who fixed, and who closed. This readiness isn’t just for audits—it builds partner and leadership confidence and sets your company ahead in a market where reputational hits occur as fast as technical ones.

Evidence isn’t a stack of reports—real-time logging, audit dashboards, and role-based trails create living proof your AI governance is more than talk. That’s the new contract with your customers and board.

ISMS.online’s automation means nothing falls through the cracks:

- Every action trail is signed, timestamped, and export-ready.

- Alerts and evidence are surfaced to named managers in seconds, not after the fact.

- Centralised dashboards keep compliance visible—not buried in records.

With this infrastructure, compliance no longer interrupts your business. Instead, it becomes a leadership signal: you can defend every operational choice with transparent, living proof—building stakeholder trust even as regulations evolve.