Does Weak Data Quality Put Your AI Ambitions—and Your Reputation—on the Line?

Every major breakthrough in artificial intelligence is secretly built on an invisible, often-overlooked foundation—data quality. If your controls are passive, your results aren’t simply “inferior.” They’re dangerous: flawed decisions, missed compliance triggers, and a silent leak of trust with your most important partners. These failures don’t shout; they accumulate until the fallout is public and business-critical.

Clean data prevents silent disasters. Unchecked, errors compound and become tomorrow’s scandal.

Companies that treat data like a background task are stalling their own AI future. Investors and boards don’t forgive preventable errors. Regulations now demand documented, auditable proof—not empty optimism—that your information is accurate, current, complete, and relevant. The hard truth: over 60% of AI project failures stem from unchecked data quality gaps. When results can’t be trusted, audits become penalties and every shortcut carves a permanent scar on your organisation’s standing.

ISO 42001 Annex A.7.4 cuts through excuses. As of its release, data quality isn’t negotiable—standards, processes, and records must hold up to legal and client scrutiny at any stage of the AI lifecycle. Letting this slide isn’t just risk; it’s an open invitation for failure that could cripple your growth, security, and the faith your partners place in your results.

What Does ISO 42001 Annex A.7.4 Actually Demand—And Where Do Most Firms Fail?

ISO 42001 A.7.4 is not subtle. “Good intentions” no longer protect you from gaps, audits, or breaches. The standard imposes rigorous, operational criteria that need to be alive, traceable, and provable—down to the finest details.

You’re Obligated to Define, Prove, and Monitor Quality—Continuously

Walk through what the standard now forces every organisation to perform:

- Customised Criteria: “Accuracy,” “completeness,” and “consistency” aren’t generic checkboxes. ISO 42001 requires you to spell out what each of these means for every deployment, model, or supplier dataset. Vague statements are red flags.

- Versioned, Living Documentation: As data sources evolve, your documented standards and their implementation must be revised, timestamped, and demonstrable. Static documentation or “annual reviews” count as non-compliance in a live environment.

- Audit-Ready Evidence Chains: You must be able to present logs, change histories, breach trigger reports, and recovery actions—immediately. If you can’t, any external audit is a liability.

Firms get exposed, not because they’re unaware of requirements, but because they treat quality control as paperwork—disconnected from data updates, drift, or model retraining. The standard expects the opposite: a process that’s as dynamic as your threat landscape.

Data Quality Controls—Obligations, Actions, and Their Audit Signals

Here’s how successful organisations operationalize the Annex A.7.4 mindset:

| Obligation | What You Must Deliver | Audit-Visible Signal |

|---|---|---|

| Criteria Defined | Context-specific, written quality standards | Versioned, accessible records |

| Actively Monitored | Validation logs for every batch & change | Timestamped, traceable entries |

| Escalation Ready | Pre-defined breach triggers and processes | Evidence of escalation/review |

If any cell above is empty, your compliance is built on hope, not defence.

If show us your latest quality breach and fix triggers a scramble, your system is incomplete.

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

How Do You Set—and Defend—AI Data Quality in Practice?

Your gap isn’t technical; it’s cultural. Most failures occur when data quality is assumed, not demonstrated. The audit doesn’t forgive “almost.” Only precision, reinforcement, and active reporting actually close the loop.

Build Specifications That Survive Audit and Legal Review

Start by nailing the basics:

- Quantitative Attribute Standards: For every dataset, define explicit accuracy, completeness, and update thresholds. “High quality” means nothing without numbers.

- Measurable Thresholds, Not Adjectives: Use concrete goals—e.g., “label completeness ≥97%,” “error rate under 0.5%,” or “updated within 24 hours of event.”

- Triggered Escalation: Define specific events (threshold breaches, irregular batch logs) that force investigation, not merely alerting.

- Interval-Based Checks: Schedule regular reviews and testing cycles instead of reactive “fire drills.”

The regulator’s first question won’t be “are you trying?” It is always: “Where is your evidence?” Every claim needs a log entry, timestamp, and chain of approval.

Embed Quality into Every Data Flow—Automation and Human Intelligence

True compliance means data quality controls are embedded into every transformation and inference—not left to annual reviews or team intuition.

Make Validation Continuous—Automate but Never Forget Human Judgement

- Automated Checks: Execute validation at every ETL pipeline and ingest. Each transformation, model train, or source change logs pass/fail status, warnings, and exceptions.

- Mandatory Human Review: Automated checks catch structure; context and subtle domain bias demand trained review—especially for ambiguous, novel, or evolving data sources.

- Full Traceability: Every time criteria tighten or relax, or new risks surface, the review process leaves a digital fingerprint—who checked, what was found, and the resulting action.

If a regulator asks you to reconstruct why a data batch was accepted two months ago, your system should instantly recall the signed review and the automated checks at the time. “We’re working on it” is an audit failure.

Book a demoDo You Treat Bias and Fairness as Core AI Risks… or Optional Extras?

Data quality in Annex A.7.4 isn’t just about “numbers.” If you’re missing documented bias checks and fairness corrections, you’re exposed to modern regulatory fire.

Make Bias and Fairness Testing Non-Negotiable—Document, Not Just Detect

- Comprehensive Contextual Assessment: Bias risk isn’t just a recruitment or lending issue. Scrutinise procurement, operations, marketing, and health datasets—including at model retrain.

- Versioned Remediation Evidence: For any fix—whether you remove data, adjust weighting, or augment samples—the before-and-after impact, reviewer sign-off, and context notes must be stored and instantly accessible to audit or stakeholder review.

- Defensible by Design: Document the pipeline so you can prove bias testing isn’t sporadic or ad hoc; it happens at predefined intervals and is part of each release.

Fairness controls aren’t “nice to have”—a single missed step is both a legal and reputational risk, especially where your sector is flagged as high-risk or society-facing.

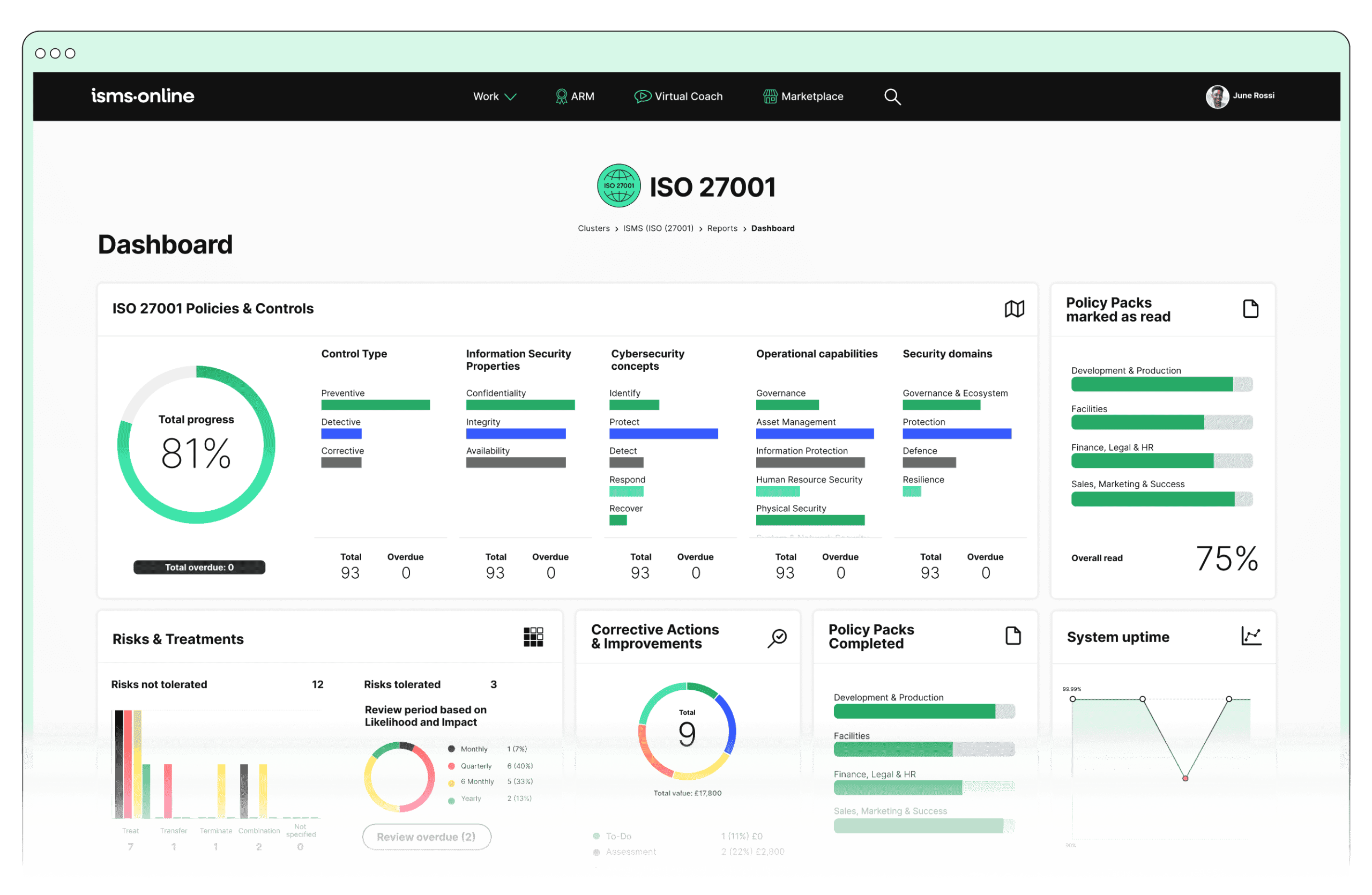

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

What Artefacts Will an ISO 42001 A.7.4 Audit Actually Require From You?

Intentions and technical explanations don’t meet the standard. Only evidence, mapped to the regulation, does.

Build a Chain of Evidence—From Spec to Remediation

Every dataset and every model must leave an unbroken trail of:

- Sub-Attribute Mapping: For each field, control status and monitoring logs are mapped against the A.7.4 requirements—proving your awareness and active management.

- Threshold Logs and Change Requests: When something crosses a red line, the decision, trigger, review, and fix are all documented, versioned, and available for review.

- Sign-Off and Approval Chains: Evidence of human intervention—who approved, when, why—anchors your controls in real-world accountability.

When the audit window opens—or a client or regulator asks for proof—your organisation should be able to respond in minutes, not days.

Auditors don’t care what you intended—they care what you can prove, on demand, without scrambling.

What Are the Strategic Benefits of Robust AI Data Quality—Beyond the Next Audit?

Treating data quality as a “compliance project” is a losing tactic. Business leadership understands that superior processes and proof are signals: to your clients, your partners, and the market.

Raise the Ceiling on Trust, Speed, and Lasting Advantage

- Accelerates Delivery, Cuts Risk: Automated pipelines, clear quality controls, and rapid remediation eliminate rework—so projects ship faster without sacrificing defensibility.

- Builds Trust with Clients and Regulator: audit-ready controls foster transparency and readiness, disarming the scrutiny of even your toughest stakeholders.

- Future-Proofs Against Regulatory Change: Documented and actively managed records let your AI and compliance teams pivot swiftly when new requirements, risks, or technologies hit—while others are still playing catch-up.

Those who treat ‘best efforts’ as policy trade away speed and trust; the new market leaders wield evidence like a weapon.

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

How ISMS.online Pushes Data Quality from “Project” to Standard, At Scale

Getting data quality right isn’t an optional extra for your AI reputation. Boards, auditors, and major partners expect living controls, artefact trails, and expert support as standard—in context and in minutes.

- Instant Diagnostic Review: Our platform’s workflow empowers you to map every data quality obligation in A.7.4, uncover gaps, and sequence remediation actions for audit or strategic analysis.

- Specialist Guidance—Not Just Software: You can reach confidentially to compliance specialists who tailor your controls to your sector’s actual risks—bridging the gap between internal policies and live external demands.

- Relentless Artefact Management and Automation: Store, manage, and version every checklist, log, and sign-off, ready to surface on demand so every audit is a process of performance, not panic.

Audit readiness isn’t a document you file away. It’s a living system—with evidence at your fingertips.

With ISMS.online, your compliance isn’t just “covered.” Your reputation, delivery, and partner trust ascend to the next level.

Can You Defend—Right Now—Every Data Quality Decision in Your AI Estate?

Reality check: evidence gaps burn reputations faster than data leaks. Your compliance checklist isn’t just a formality; it’s an ongoing self-test that closes the gap before regulators or clients open it up for you.

Ask yourself, and your team:

- Is every data set mapped to explicit, updated quality requirements?

- Can you instantly (not “soon”) produce automation and manual validation logs for any model or process?

- Are every fix, exception, and review versioned and stored—never lost in an inbox?

- Are change logs and explanation files complete for special, edge-case decisions?

- If an auditor or board requests proof, can you supply the artefact within five minutes?

If you hesitate anywhere, your exposure is real—and your next audit, tender, or market move may collapse on the missing proof.

AI model failures rarely surprise those who trace the root cause—they build up, quietly and invisibly, inside unmonitored, untested data. ISO 42001 A.7.4 was written because hope, memory, and ‘best efforts’ aren’t proof. Raise your bar—test, log, and fix before failures announce themselves.

Lead the Field: Strengthen Your AI Data Quality with ISMS.online

Your organisation’s peak potential—credibility, growth, defensibility—is limited by the rigour and traceability of your data quality controls. With ISMS.online, you fortify the entire process:

- Live ISO 42001 A.7.4 Diagnostic: Map and patch data quality exposures before they morph into audit failures or business disruption.

- Private Consultation with Compliance Specialists: Get sector-tuned guidance and practical planning so your controls are audit-ready and immediately trusted by partners.

- Persistent, Automated Documentation: Automatic artefact versioning, audit triggers, and evidence management keep your readiness real and relentless.

Choose to lead with proof, not hope. Outpace regulatory shifts, cut time-to-audit, and turn every quality obligation into a brand asset. ISMS.online transforms compliance from burden to business advantage.

Frequently Asked Questions

What does ISO 42001 Annex A Control A.7.4 demand for data quality in AI systems?

A.7.4 requires you to define, enforce, and prove measurable data quality for every AI dataset, always with evidence—never implication. This means your team must set explicit standards for accuracy, completeness, consistency, timeliness, fitness-for-purpose, and bias controls, unique to each dataset, and keep these requirements live as models, risks, and uses evolve. Auditors expect to see not just your intentions but versioned, document-tracked benchmarks, rationale behind each threshold, and a review process tied to accountable personnel, with zero reliance on “to be assigned” placeholders.

If your data quality can’t be demonstrated, your compliance is fiction—and regulators treat fiction as failure.

Which elements must be documented to satisfy A.7.4?

- Data-specific criteria: Standards for accuracy, completeness, consistency, bias, and intended use—written down, not assumed.

- Justification of thresholds: Why each metric qualifies your data as “fit,” in context.

- Continuous logging: Versioned updates; evidence of reviews, sign-offs, and exception handling; what, who, when, and why.

- Ongoing responsiveness: Documented schedule for review and revision every time your model, use case, or regulation shifts.

Lack of formal, living evidence causes more audit delays and regulatory heat than any algorithmic risk. ISMS.online’s workflow keeps these controls current and defensible at every turn.

How should organisations assess and assure data quality under Annex A.7.4?

You need operational assurance, not annual aspiration. Leading organisations embed data profiling, anomaly detection, and drift monitoring directly into every data pipeline. It’s not a quarterly task—it’s a routine, with both automation and human checkpointing.

Define key metrics per dataset—for example: percentage of missing values, outlier thresholds, revalidation triggers by model phase, and bias indices specific to regulatory focus. Automated tools surface deviations in real time. But automation won’t catch context-driven flaws: assign reviewers to check for latent bias, relevance drift, or emergent trends. Every remediation—who saw what, who acted, and how it was resolved—is logged and version-linked to the data batch.

Data quality without a documented process is a recipe for regulatory blindside—systems that ‘just work’ fall apart as soon as scrutiny sharpens.

What does true operational assurance look like?

- Continuous checks: Each new and historical dataset is validated, tracked, and revisited after any workflow or risk change.

- Automated + human review: Machines highlight anomalies; people confirm context and correction.

- Traceable evidence: Logs capture every review, exception, and sign-off, fully attributable.

ISMS.online automates much of this cycle, but demands evidence from your team, not just settings and code.

Where do most organisations lose ground—or get ahead—on A.7.4 data quality?

Failures begin when teams treat A.7.4 as a compliance checkbox, not an operating discipline. The strongest organisations build validation, revalidation, and issue logging into everyday processes—so evidence is always ready, not scrambled post hoc.

Laggards rely on ad hoc manual review, overlook new data risks after model tuning, or leave version history and incident logs scattered in emails and wikis. This leads to breakdowns at audit time.

Organisations rise when they design quality proof into every workflow—those who scramble for logs lose credibility fast.

What distinguishes those who win at A.7.4 compliance?

- Custom validation protocols for each model and dataset—including automated testing for bias and statistical control.

- Continuous, logged evidence of every review, exception, and corrective action—never undocumented.

- Proactive risk reviews: Immediate reevaluation and retraining when business context or technical landscape shifts.

- Integrated, always-on dashboards like ISMS.online, replacing static paperwork with live audit trails.

Which templates, logs, or frameworks actually pass muster for data quality under A.7.4?

There’s no certified global template—the audit proof rests on whether your documentation matches your real-life data pipelines. What works is detailed, dataset-by-dataset matrices: not only the “what” of quality, but the “who, when, why” behind every validation result.

A template’s just paper until a breach—systems that keep logs living protect you in real time and in retrospect.

Essential framework components:

- Matrix of requirements: Dataset, standard, method, responsible party, and evidence for each validation cycle.

- Actionable, versioned logs: Each pass/fail outcome, exception, remediation step, and sign-off—version-controlled, accessible.

- Change control records: Summaries for every update: What changed? Why? Who authorised? When reviewed?

- System integration: Audit logs are embedded into operational tools (like ISMS.online), not isolated files on a server.

Audit defensibility hinges on ready, complete, and context-relevant evidence—paperwork that matches operational reality.

How can a data quality lapse trigger A.7.4 failures and create real-world risk?

A single gap—a missed log, undocumented correction, missing approval, or obsolete threshold—can collapse your compliance standing. Regulators and auditors now probe the entire workflow, not just representative samples. When gaps appear, they widen scrutiny: lost certifications, exclusion from bids, customer trust erosion, and even regulatory penalties if risk leads to incident.

The wrong audit answer isn’t just bad luck—it’s a sign your processes are fiction, and your readiness is only cosmetic.

What can happen after one evidence or standard failure?

- Immediate audit expansion, demanding logs across the full AI pipeline.

- Increased risk ratings, affecting certification and regulatory approval.

- Exposure to compensation or penalty clAIMS if poor-quality output harms clients or markets.

- Heightened demands for recovery planning, often at high operational cost.

- ISMS.online’s preventative controls and automated logging insulate your reputation and keep the evidence trail unbroken, even as personnel or systems evolve.

What evidence must be retained to ensure A.7.4 data quality certifies under ISO 42001?

Certification depends on demonstrable, consistent records—not ad hoc recollection. Your archive must include:

- Signed, version-controlled standards: mapped to every dataset and application.

- Evidence logs for all validation cycles: —automated and human—including time-stamped outcomes, reviewer IDs, and corrective action documentation.

- Full change history: Why was a standard altered? Who called it? When did review and approval occur?

- Bias and fairness logs: Including remediations, with corrective outcomes and reviewer linkage.

- Accessible, living dashboard: Auditor access should never slow for manual retrieval or patchwork evidence.

Missing links trigger immediate audit findings, or worse, signal the need for deeper inquiry. With ISMS.online, your data quality assurance isn’t a project or a mad rush before the audit—it’s a daily, automatic habit that breeds regulatory confidence and operational calm.

Defending your AI pipeline is a battle you win with daily evidence, not declarations—every log is a shield waiting to be tested.

When data quality becomes a living, defensible habit, compliance stops being a cost and becomes a competitive edge. Your ability to produce robust, ready evidence at any moment defines trust in your AI—not just for audit, but for every customer, regulator, and stakeholder who looks closely. ISMS.online puts that proof within arm’s reach, every single day.