What Does “Responsible Use” of AI Systems Really Require Under ISO 42001 Annex A.9.2?

AI is no longer a side show—it’s running your critical workflows, shaping how boards trust risk signals, and deciding which doors your team can open in regulated markets. ISO 42001 Annex A.9.2 isn’t asking for shiny policy decks or PR bliss—it demands operational, real-time proof of responsible use, recorded and verifiable without delay. Compliance officers, CISOs, and CEOs are on the hook to show that responsible use is embedded in controls, documented authority chains, and daily decisions traceable under audit glare—not just a phrase buried in a strategy slide.

When risk spreads faster than fixes, the real test is whether you can prove what you did, not just what you promised.

Every AI-fueled disaster—systemic bias in credit scoring, a rogue bot amplifying privacy leaks, automated decisions that violate patient rights—has hammered home the same lesson: “Responsible use” has become the ticket for trust, market entry, and regulatory survival. Today, anyone can demand instant evidence of how your AI operates, who checked it, and how you know it’s not causing silent, compounding risk.

Annex A.9.2 pulls responsible AI out of the shadows. It requires processes that are not just written—but alive, monitored, and aligned with what actually happens, day in and day out. Documented proof—spanning every system, algorithm, and exception—must be ready on demand. If your workflow claims and actual controls don’t match, you are flying with your compliance lights off, ripe for regulatory or reputational collision.

How Does Documentation Turn Policy Into Real-World Protection?

A thick policy binder, ignored by staff and never inspected against reality, is a breach waiting to happen. ISO 42001 raises the bar: Every statement of “responsibility” must be documented, operational, and instantly recallable under audit or incident. This isn’t busywork—it’s a survival rule when mistakes go viral in seconds.

Inventory, Mapping, and Exception Accountability

You don’t get credit for what you can’t trace. Responsible AI demands systems thinking at pace:

- Build a living inventory of every AI touchpoint—even prototypes and shadow IT deployments. Model versions, data sources, approvals, and change logs must be listed, not assumed.

- Document process ownership: step by step—from model design and data intake to operational hand-offs and human review. Every phase has a named owner, with responsibility for approvals and overrides.

- Log every exception, override, and deviation: with a timestamp, rationale, and explicit assignment of accountability.

The moment you lose track of who changed what, when, and why, you’re gambling with audit failure.

Forgetting to map a process is like boarding a plane with no co-pilot—when something slips, there’s no fallback.

Instant Traceability Under Pressure

Regulators don’t wait. Clients and boards don’t forgive missing logs when things break. ISO 42001 demands:

- Workflow records and exception logs accessible with a click: —ready for inspection, not retroactive reconstruction.

- Delay in evidence = presumption of control failure.:

In a climate where risk is measured in seconds and losses in millions, audit-at-speed is protection and opportunity. Executives who stand ready with proof protect brand reputation and seize the trust advantage against competitors left scrambling.

Everything you need for ISO 42001

Structured content, mapped risks and built-in workflows to help you govern AI responsibly and with confidence.

Why Are Fairness, Transparency, Accountability, and Human Oversight Non-Negotiable?

No organisation leads—or survives—if its AI is a black box, unchecked for bias, or left running with no clear human recourse. Annex A.9.2 cements what your stakeholders and markets already demand: fairness, openness, real accountability, and proof of human control.

Fairness Audited and Documented

Unchecked bias in data, models, or deployment quietly erodes trust and brings fines, lost deals, and public backlash. ISO 42001 expects ongoing, scheduled fairness reviews with:

- Organizationally assigned bias audits—covering both input data and business outputs.

- Action logs that don’t just record “flagged issues” but trace every fix, from source to outcome.

- Documented evidence that datasets reflect the true population and class balance; every update is logged, not hand-waved.

Bias isn’t a tech bug—it’s a business liability that grows every month it’s ignored.

Transparency That Actually Functions

Opaque “black box” decisions and unexplained outcomes are magnets for headlines and regulator visits. ISO 42001 lifts the expectation: every substantial model, logic edit, data enrichment, and workflow override needs a white-box record. Stakeholders and end users must:

- Be able to review the facts—how the decision happened, how data was handled, and how it fits the intended risk appetite.

- Challenge AI outputs with a clear remediation channel; “AI said so” is finished as an answer.

Systems only qualify as responsible when scepticism can be met with instant, actionable evidence and feedback.

Human Decision—Not System Drift

The days of hiding behind “the algorithm did it” are over. Annex A.9.2 insists:

- Map precisely where humans approve, halt, or escalate workflow outputs.

- Assign named individuals with documented power to override, remediate, or pause AI in live environments.

No software, no LLM, no automated pipeline can claim “responsible use” if humans cannot intervene—clearly, quickly, and with a record to prove it.

How Should Approval and Exception Workflows Be Structured and Proofed?

Approvals and exception handling define whether an AI system is a manageable tool or a runaway hazard. Under ISO 42001, workflows must be traceable, real-time, and rooted in explicit human authority. The system is only as strong as its evidence trail.

Approval Chains—Explicit, Verifiable, and Actionable

- No critical system changes occur without a responsible owner’s signature.

- Every approval event includes a timestamp, tamper-evidence, and a reasoned rationale—no retroactive justification allowed.

- Automated escalation routes must be seamless—exceptions, emergencies, and overrides only take place with named, justified ownership.

Every unlogged exception is a gap in your defence; every untimestamped approval is a potential headline.

Exception Management—From Weakness to Continuous Improvement

Regulators are most interested in how you handle exceptions—not how you script perfection. Annex A.9.2 wants you to:

- Treat every override or deviation as an input for fast root cause analysis. Don’t hide gaps—use them to strengthen resilience by injecting them into documented lessons learned.

- Feed exceptions into immediate control reviews, staff training cycles, and policy updates. True best practice is a living exception log that actively improves the system.

When organisations celebrate lessons from mistakes—instead of hiding them—they elevate their audit posture and build deeper trust with every incident.

Manage all your compliance, all in one place

ISMS.online supports over 100 standards and regulations, giving you a single platform for all your compliance needs.

What Does Living, Continuous Monitoring Look Like for Responsible AI Use?

Policies and controls that gather dust are dead weight. Live compliance demands “always-on” monitoring: quantitative, qualitative, and with a feedback loop that shortens risk exposure from months to minutes.

Beyond Passive Logging—Active Signal and Intervention

- Monitor error rates, false positives/negatives, model drift, and security anomalies as they happen.

- User feedback, support tickets, and audit findings must feed into dashboards monitored by compliance and business leaders.

- Alerts trigger not just reports, but action—updated controls, rapid incident response, and one-click escalation to decision-makers.

Responsible use is only real when oversight prevents a problem from compounding — before headlines or regulators come calling.

Dashboards matter less than what your team does in response. Build your alert logic so that “learning operations” is not just a phrase, but a behaviour traced from log to leadership action.

How Do You Achieve Audit-Grade Explainability and Real Transparency?

No one running “serious” AI in regulated business gets a pass on explainability—not auditors, not clients, not executive boards. ISO 42001 draws the line: explainability must be operational, not theoretical—organisation-wide, not tucked away in a wiki.

Make Explainability Your Advantage

- Document every code change, model parameter, and operational tweak: A single missing record can unravel months of trust.

- Public, reviewable FAQs and reporting channels: empower users to challenge or appeal any decision, and your internal logs must trace every appeal through to resolution.

- Maintain snapshots: before/after model changes, with explicit rationale. Each change is a proof point under scrutiny, and a shield when challenged.

Organisations that treat explainability as a client and regulator-facing asset—not a compliance checkbox—build brand preference and secure licences to operate in sensitive, high-value domains.

Trust gets won the day you can reconstruct any system decision, with a paper trail that stands up in any regulator’s hands.

Free yourself from a mountain of spreadsheets

Embed, expand and scale your compliance, without the mess. IO gives you the resilience and confidence to grow securely.

Why Is Continual Improvement Not Just Good Practice—But Survival?

Modern AI and risk landscapes are shape-shifters: laws change, adversaries pivot, and your tools age by the day. Only continuous, documented improvement keeps you on the safe side of market and regulatory lines.

Building a Cycle that Learns—Quarterly, Not Annually

- Trigger structured “lessons learned” on every incident and meaningful customer or staff feedback.

- Use these findings to update not just documents, but living workflows, staff responsibilities, and technical standards.

- Don’t wait for external audits or fines to overhaul controls. The organisations that stay proactive—reassessing compliance and technical alignment quarterly—convert risk into market share and influence regulatory direction.

The longer you wait to adapt, the bigger the learning bill when the next wave hits.

Survival isn’t a function of resources, but speed—how fast your team learns and iterates when risk vectors shift.

How ISMS.online Delivers Responsible AI, Every Day

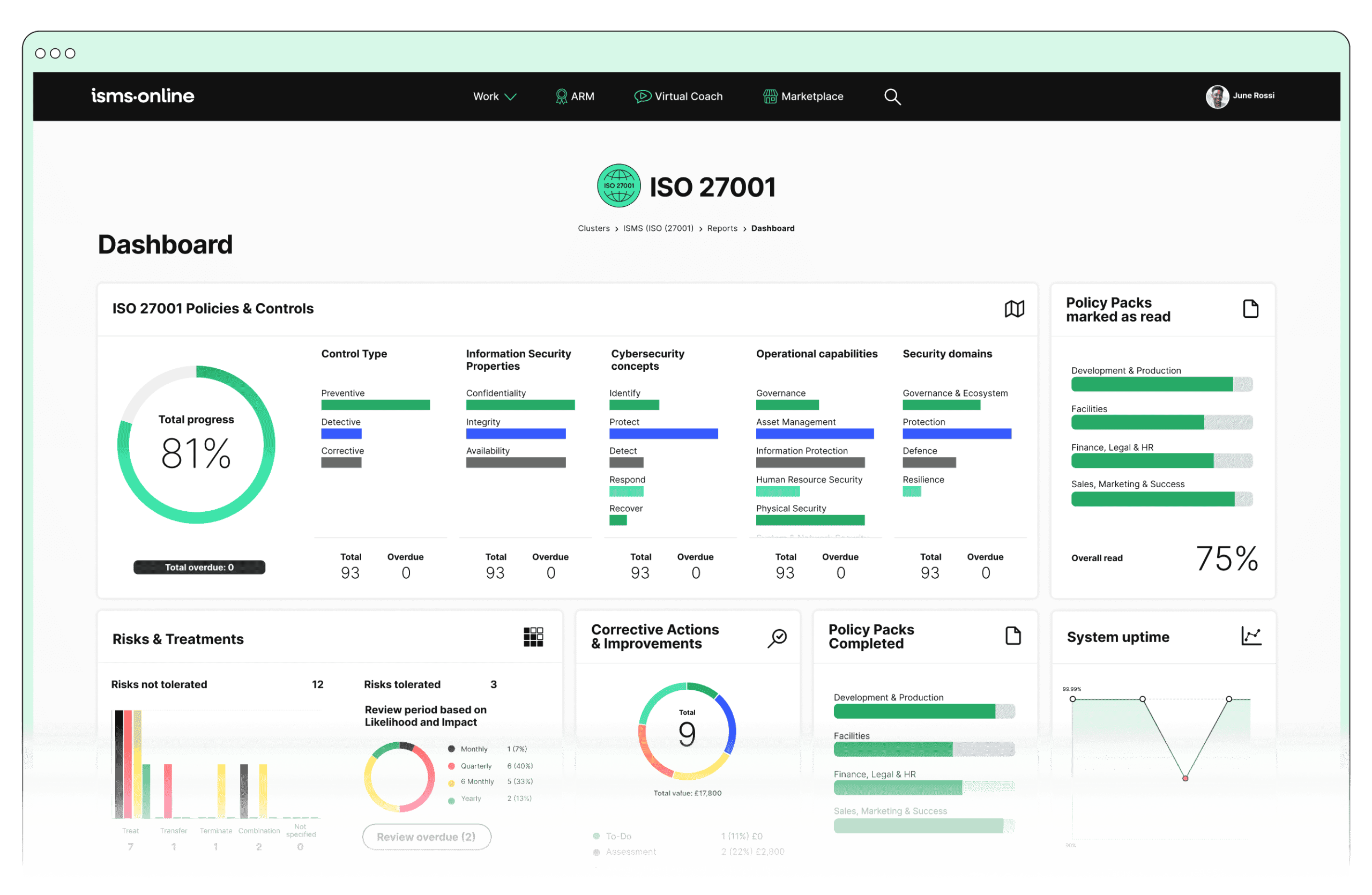

Translating ISO 42001 Annex A.9.2 from aspiration to action is hard. Manual documentation, scattered logs, and siloed approvals leave you exposed. ISMS.online replaces patchwork with platform-based, auditable workflows:

- Centralised, living inventories: —real-time mapping of AI systems, owner assignments, and process step authority.

- Unified documentation: —procedures, approvals, and change logs maintained and instantly accessible for audit or inquiry.

- Automated exception and incident logging: —every override, rationale, and corrective action tracked and traceable, without reliance on manual memory.

- KPI and dashboard visibility: —see risk, compliance, and performance metrics at a glance, and trigger cascades to action before issues spread.

Your board, stakeholders, and regulators won’t wait for “next quarter’s review.” With ISMS.online, compliance, trust, and responsible AI use stay always-on and audit-ready.

Responsible AI use is proven in the systems you run—before anyone knocks on your door.

Ready to build the defence and confidence your market, regulators, and partners demand? Empower your responsible AI journey with ISMS.online—where auditable action, not just policy, leads.

Frequently Asked Questions

Why Does ISO 42001 Annex A Control A.9.2 Make Responsible AI Use a Live Test of Business Resilience?

Responsible AI is now the audit trail you can’t fake or fix after the fact. Annex A Control A.9.2 of ISO 42001 doesn’t just flag “responsible use” as best practice—it ties it to every real decision, override, and system owner in your organisation. When a regulator, partner, or board member wants proof, you must show dynamic, timestamped records, not theoretical policies. Your licence to operate increasingly depends on demonstrating—without scrambling—that AI decisions are visible, reviewed, and owned, every day.

As digital trust becomes a real market differentiator, organisations are discovering that responsible AI is the moving target of modern resilience. Problems rarely start with code. They usually begin with the absence of a clear, reviewable record when something goes wrong. More than 75% of businesses flagged in regulatory spot checks lacked adequate documentation for AI decisions—leaving them exposed to fines and rapid erosion of partner confidence.

True resilience isn’t shown by grace under pressure—it’s proven by the evidence you can produce without warning.

How Does A.9.2 Transform Responsible AI from Buzzword to Bottom Line?

For executives and CISOs, responsible AI is no longer an exercise in reassurance. It is now a structured, measurable discipline. Your revenue, supply chain, and even executive tenure are weighted against the organisation’s ability to supply living evidence of oversight. Depending on long-expired trust cues or static intent statements? That gap isn’t just theoretical—it’s a live operational risk and a missed contract away from dominating your board’s agenda.

What Does ISO 42001 A.9.2 Really Require for Live, Documented AI Use Processes?

A.9.2 sets the expectation that every AI-related action is digitally mapped—no shadow deployments, no anonymous overrides, no paperwork gaps when the heat is on. Compliance is not about checking a box; it’s about maintaining a dynamic record that tracks each approval, incident, and follow-up. This means rigorously:

- Maintaining an up-to-date AI asset registry: with explicit owner assignments and clear status for each system—no “blind spots” allowed.

- Consistent, role-based signoff chains: for every approval, exception, or override, with action and ownership recorded at each step.

- Timestamped exception logs: capturing what triggered a deviation, who acted, and what was done to resolve and learn from it.

- Scheduled monitoring and review protocols: with role-based accountability and hard evidence—no more annual, unsupervised recaps.

- Integrated legal mapping: of each process and log to relevant regulatory mandates—showing that nothing falls through jurisdictional cracks.

Today’s reality is that regulators, partners, and major clients are now auditing not just your intent but your operational discipline—one digital thread at a time.

Components of a Dynamic Responsible AI Use Process

| Essential Function | Audit Evidence | Exposure if Missing |

|---|---|---|

| AI Owner Registry | Live, updateable list | Unowned risks, failed accountability |

| Approval Signoffs | Timestamped, tied to role and system | Delays, blame-shifting under pressure |

| Exception Handling | Root cause, remedial action records | Patterned failures, compounded damage |

| Review Scheduling | Documented checks, responsible party | Untracked bias, stale reviews |

| Legal Integration | Logs mapped to each regulation | Noncompliance penalties, missed signals |

What Practical Threats Multiply When Responsible AI Use Lacks Systematic Documentation?

Gaps in documentation don’t just create compliance headaches—they invite costly, cascading risk. In the past year alone, several high-profile enforcement actions began not with a breach, but with “routine” requests for digital logs that organisations couldn’t immediately produce. These moments—whether in a regulator’s office, a courtroom, or a major client’s due diligence session—expose fragility in supply chains, legal posture, and executive credibility.

- Regulatory fines escalate when a single approval or exception is missing: —and the penalties for gaps multiply across global frameworks like GDPR, DORA, and NIST.

- Lost commercial opportunities grow as vendors and clients require pre-audit access to your AI logs: —if you can’t produce them, the deal passes you by before negotiations even start.

- Litigation hazards compound when an absence of ownership records shifts legal risk directly onto your enterprise: , rather than the specific actors or processes involved.

- Incident response delays become the rule, not the exception: , when no single person is mapped to a live decision, making root cause mitigation nearly impossible.

- Due diligence failures during mergers or investments have increased,: with evidence now required for ongoing responsible AI practice—not just policy.

Most companies break not from a single malicious exploit, but from failing to prove—in the moment—that operational discipline exists beyond policy slides.

The Traps Hidden in Paper-Only Compliance

Many executives underestimate the exposure until forced to find records for a “trivial” incident, only to discover holes that slow remediation and increase outside scrutiny. The time to engineer resilience is long before an incident or audit—reactive scrambling is now viewed as operational immaturity.

How Can Your Team Engineer a Responsible AI Use Regime That’s Audit-Ready by Default?

To become audit-ready is to embed discipline, automation, and role-driven evidence into daily operations. This starts with mapping every AI asset, ensuring every lifecycle event (approval, exception, review) is assigned, checked, and exportable. The best defence is a living workflow—automated sign-offs, live dashboards, pre-assigned reviews, and real-time incident tracking—that leaves nowhere for future risk to hide.

- Centralised platform integration: All assets, owners, and workflows unified and visible, reducing errant systems and spreadsheet sprawl.

- Automated, irreversible approval and review processes: No manual workarounds, no undetectable erasures.

- Exception-to-resolution chains: Each anomaly is assigned a resolver and tracked until closed, with proof available at any inspection point.

- Regularly scheduled reviews with double-signature accountability: Performance, fairness, and risk monitoring on firm timelines, each review logged and accessible.

- Iterative training and improvement protocols: Policy isn’t frozen—it adapts with each review, incident, or regulatory update, embedding continuous learning into the compliance DNA.

Platforms like ISMS.online are designed for this audit-first reality, converting abstract compliance into a tangible, living asset. The end goal isn’t just passing a test—it’s ensuring your next leadership conversation is about operational confidence, not post-mortem excuses.

Excellence in discipline is measured not by absence of incidents, but by readiness and ownership evidenced at every review—automatic, not afterthought.

Building Audit-Readiness into Every Step

Live documentation is the new foundation for trust—internally, with stakeholders, and in front of any regulator. Audit-readiness comes from assembling automation, ownership, and accountability so that proof is always one click away.

What Are the Consequences of Failing to Prove Responsible AI Use When Audited?

A missing trail is more damaging than a single poor output. Organisations that can’t surface digital proof—instantly—are now presumed non-compliant by regulators, partners, and the courts. Operational inertia accelerates losses: fines, delayed deals, and reputation hits multiply when a company relies on patchwork or post-event explanations.

- Direct financial penalties under GDPR, DORA, or industry laws,: levied for lack of data production rather than bad outcomes.

- Accelerated legal loss in court,: with judges increasingly siding with claimants when responsible AI evidence isn’t there on demand.

- Erosion of credibility with clients and partners: Once public, gaps in documentation create a risk halo that repels new contracts and repeats for months or years.

- Sluggish or suspended deals: in due diligence reviews, fueled by gaps that suggest broader issues with governance and control.

- Remediation and investigation costs multiply: when records must be reconstructed or gathered from fragmented sources.

Study after study shows that firms who automate digital evidence for AI controls reduce incident costs by more than a third—and recover operational velocity faster after mistakes or investigations. In the world of modern compliance, lack of evidence is now its own governance failure.

What Forms of Evidence Satisfy Regulators During a Responsible AI Audit?

The audit isn’t won on theory or static policy—it’s settled in the digital records your team can export without assembling. Winning organisations provide:

- End-to-end workflow visibility: Digital maps showing who owns each AI system and every action taken from deployment to override.

- Immutable logs: Time-stamped, role-assigned sign-offs and exception records that cannot be tampered with or overwritten.

- Comprehensive incident and response logs: Detailed chains from anomaly to resolution, with corrective actions visibly mapped and each update recorded.

- Proactive monitoring records: Lists of scheduled reviews and substantiation of their findings, linked to each system and owner.

- Stakeholder engagement history: Documentation of concerns, feedback, and actions—your “record of response.”

- Continuous improvement records: Incidents, audits, or market changes driving real-time policy and process adaptation.

ISMS.online transforms this obligation into a daily practice by integrating all necessary logs, owners, workflows, and improvement cycles into a single, accessible system.

When every record is structured for audit, governance moves from a pressure point to a competitive strength—proof meets preparedness.

What’s the Fastest Way to Surface Audit-Ready Evidence?

Invest in centralised, automated compliance management. Platforms providing prebuilt evidence chains, digital signoffs, and workflow export make it possible to respond to any auditor’s request—before the pressure rises, and before operational confidence is shaken.

Which Emerging Best Practices Strengthen AI Control Discipline and Audit Agility?

The most resilient organisations are those automating owner assignment, digital sign-off, and live evidence trails. They make audit-readiness the default, not a scramble. Build on these principles:

- Automate workflow approvals and exception handling: —removing ad hoc, manual steps that create blind spots.

- Demand single-point accountability: tying each role, system, and action to an individual, not just a functional group.

- Run live dashboards and alerts: so nothing—task, asset, or review—can “fall through the cracks.”

- Embed AI governance in onboarding, training, and response,: treating it as an operational expectation, not an annual hurdle.

- Convert root-cause investigations and exceptions into active process updates: that get logged, not just discussed.

- Leverage platforms (like ISMS.online): that map your controls across ISO 42001, GDPR, DORA, NIST, plus sector overlays, yielding resilience as frameworks evolve.

Ready organisations know this: discipline and digital evidence now define trust. Audit trails, not intent, drive market opportunity and regulatory safety alike.

Discipline is invisible until the moment you need it—then, digital evidence reveals a leadership team worthy of trust and a business built for tomorrow.

Lead with readiness: make responsible AI use the untouchable backbone of your brand. Use ISMS.online to lock every proof, link every owner, and stand prepared for every challenge—no matter who’s watching.