Is your approach to responsible AI genuine governance—or just slogans on a policy page?

The difference between a resilient, audit-ready organisation and another casualty of regulatory headlines isn’t intent—it’s traceable execution. Responsible AI has outgrown polite declarations and “ethical” homepages that melt on contact with real risk. ISO 42001 Annex A Control A.9.3 signals a clean break: only objectives that become operational muscle survive modern scrutiny. If your responsible AI platform still depends on ideals you can’t prove—or KPIs that vanish the moment a new product launches—you’re not governing; you’re wishing.

An AI system is only as trustworthy as the objectives you can prove—under audit, in the boardroom, and after a breach.

Until recently, “responsible AI” often meant well-meaning but hollow principles tucked away in employee handbooks. Now, shareholders, regulators, and your own board demand proof: Where do your principles live in code, review cycles, and incident logs? Annex A.9.3 isn’t about grand statements; it’s about tangible alignment between risk, result, and accountability. This section pulls apart what authentic, embedded objectives look like, why most organisations fall short, and how genuine governance means putting every value on a short leash, with evidence at your fingertips.

What does ISO 42001 demand when setting objectives for responsible AI use?

ISO 42001 A.9.3 asks for proof, not faith. To pass, your organisation must turn “responsible AI” from a marketing phrase into a measurable contract. Here’s what that actually looks like in practice:

- Each objective is mapped to a regulatory and ethical obligation: If you say “fair,” “transparent,” or “privacy by design,” you must link the promise directly to a legal or policy anchor—such as GDPR, CCPA, DORA, or your own internal standards. Referencing “best practice” isn’t enough when the rules change quarterly and fines hit seven digits.

- Ownership is explicit: Committees fade, but named owners keep objectives breathing. Every objective gets a title—compliance lead, data scientist, risk officer—plus a review date and escalation logic for when metrics fall outside of tolerance.

- Objectives are measurable: If you can’t tie it to a number, timeline, or audit event, you’re not compliant. This means error rates, audit frequencies, success thresholds, and tolerance margins attached right at the start.

- Evidence is real-time and continuous: Reviews are not annual checkboxes. Automated logs, version-controlled policy changes, incident records, and feedback from both users and auditors become the living pulse of each objective.

- Objectives change with context: You don’t set them once and forget. Every new incident, regulation, or strategic shift triggers a review. Your system adapts—or it collapses when faced with the demands of modern risk.

If your objectives disappear at audit time, they were never real in the first place.

Organisations skating by on broad intentions get caught flat-footed—sometimes publicly, and always expensively.

How do robust responsible AI use objectives differ from generic values or policies?

Intentions are easy. Survival isn’t. Robust objectives don’t just sound good—they can be weighed on demand. Here’s the line ISO wants you to draw:

- Objectives are risk-driven: They emerge from incident data, legal requirements, and impact analysis—not as boardroom platitudes, but as answers to who could be harmed, what’s likely to go wrong, and when.

- Objectives materialise in your real tools: They appear on developer checklists, policy dashboards, and system logs—visible and enforced, not lingering as PDF appendices.

- There’s a single point of contact: If something breaks, the chain of responsibility is obvious; there’s a path from a breached objective to a board-level conversation.

- Review isn’t optional: Every objective has rule-driven triggers for review—a schedule (e.g. quarterly), incident-driven resets (e.g. after any drift or external challenge), and automated reminders ensuring nobody ignores new threats.

- Lifecycle coverage is total: Objectives aren’t just written at launch then forgotten. Each one follows products and processes from design and data ingestion, through user feedback, to final decommissioning.

Here’s a table showing the difference in the wild:

| Policy Layer | Weak Example | Robust A.9.3 Example |

|---|---|---|

| Statement only | “We support fairness.” | “Keep demographic selection variance <3%; reviewed each quarter, escalated at incident.” |

| Ownerless value | “Privacy matters to us.” | “All deletion requests completed inside 30 days. Owner: Privacy Lead.” |

| No enforcement/proof | “We minimise bias.” | “Model output disparity ≤2.5%. Exception triggers retrain and executive alert.” |

The only responsible AI is a process you can check, dispute, and improve—without chasing ghosts.

How do you build and integrate quantifiable responsible AI objectives?

If your objectives can’t be dissected in front of an outsider, you’re exposed. Here’s a no-nonsense workflow for building and embedding objectives that hold up—technically, legally, and culturally:

1. Define objectives from real risk and stakeholder input.

Start with everything that matters: regulatory mandates, customer needs, and threat models. Incident logs and audit findings feed new objectives; policies follow danger, not the other way around.

2. Apply the SMART model, surgically.

Vanish “Reduce model bias” in favour of “Keep recommendation disparity for all groups below 3%, checked quarterly.” Fuse values to numbers, not dreams.

3. Embed in your operational systems.

Don’t just write them—map each objective into a control: a step in training, a column in system logs, a widget in monitoring dashboards. Visibility is everything.

4. Name a real owner and escalation path.

Attach every objective to a living person or formal role, not a department. If an owner changes (vacation, churn), trigger automated reassignment and review.

5. Tie to KPIs, operational workflows, and corrective action.

When metrics trip a wire, actions fire: a review, a model retrain, or an incident response. Don’t just record; remediate.

Example step chain:

| Step | Detail |

|---|---|

| Map expectations | Link to external rules (e.g. GDPR, NYDFS), internal risks |

| Set metric | “User output explanations present ≥98%” |

| Assign owner | “Responsible: Head of Data & Ethics, monthly review” |

| Review schedule | “Automated post-model-release, min twice yearly” |

| Evidence chain | “Logs filed and versioned in audit repository” |

Objectives without a chain of evidence, ownership, and action are front-page liabilities.

What practical templates enable consistent and auditable responsible AI objectives?

Templates do the work policy pages never will. Here’s a sample frame you can clone—or adapt for each new risk, product, or regulation:

Responsible AI Objective Template

- Value: Fairness

- Objective: Maintain <4% outcome disparity across age/gender in all model outputs.

- KPI: All monitored monthly; gap exceeds threshold triggers model review.

- Owner: AI Fairness Lead

- Review: Scheduled after launches; automatically post-incident/complaint; reviewed every 6 months.

- Evidence Required: Bias audit logs, executive sign-off.

Checklist for Responsible AI Objectives

- [ ] Can you tie this to a system, owner, cycle, and evidence trail?

- [ ] Measurable KPI placed on live dashboard/report?

- [ ] Recurring review and update, automatically calendared?

- [ ] Escalation logic for exceeded thresholds?

- [ ] Traceable change log and link to incidents/updates?

- [ ] Filled in before every new model goes live?

After a breach or incident, these templates mean you can respond with records—not with “values statements” from your brand team.

Templates aren’t bureaucracy. They’re insurance against tomorrow’s headlines.

How do responsible use objectives connect to your compliance posture and risk strategy?

ISO 42001 A.9.3 sits at the crossroads of hype and enforcement. Most global regulations are sprinting towards the same expectation: objectives with a living evidence trail and hard-coded lines from board down to developer. Your compliance isn’t a patchwork—it’s a living, breathing risk-control mechanism that ties technical controls to operational and cultural levers.

- Technical: Encryption deployed? It’s tied to a “security” objective, logged, and checked for gaps monthly.

- Operational: Bias reduction is baked into employee training, incident playbooks, and every user-facing output; privacy incident response is covered with a named owner and log.

- Cultural: Leadership reinforces real objectives in meetings, memos, and budgets. Programmers know the why—not just the what—of every compliance step.

When the next incident hits, you want the answer to be, “Here’s the objective. Here’s the audit trail. Here’s the fix. We don’t hope we’re responsible; we know.”

Regulators won’t read your mission statement. They’ll ask for logs, dashboards, and the evidence behind each objective.

What ensures responsible use measurement and auditing stand up to real-world pressure?

When blame is flying and regulators want answers, trust lives—or dies—on the proof you can surface instantly:

- Every objective is matched with live metrics: Don’t covet “vanity numbers” you can’t source. Instead, use real-time dashboards and random sample audits. Velocity, not volume, is central.

- KPIs aren’t for the compliance department—they’re surfaced at board level: Metrics make the difference when executives are directly accountable. Monthly or quarterly reviews bring objectives into executive and operational light.

- Audit inside and out: Internal tests catch slip-ups; external audits find the blind spots that culture and comfort let slide.

- Incidents force learning: Each event runs through a trace: objective > process > outcome > fix. The cycle becomes a muscle, not a manual.

Sample metrics:

| Metric Type | Measurement Example | Audit Frequency |

|---|---|---|

| Fairness | Demographic selection gap <3% | Monthly, owner signs |

| Transparency | User explanation coverage >98% | Biannual, spot-check |

| Privacy | 100% data deletion in 30 days | Monthly |

| Incident resp. | Mitigation within 14 days | Per-incident review |

If your ‘responsible AI’ is hard to prove, it’s a liability. Evidence makes trust operational.

What forces responsible use objectives to deliver—despite pushback, turnover, or system shocks?

The best objectives survive stress. A flash-in-the-pan policy or a spreadsheet lost in a network folder isn’t good enough. Real resilience means:

- Standardised, enforced templates: The system won’t go live unless objectives are mapped, assigned, and evidence-tracked.

- Roles, not names: Assign to titled owners; when names change, responsibility doesn’t vanish in the handover.

- No silos: Objectives, audit evidence, and change notes are managed in an integrated, discoverable platform. No “shadow practices”—everyone agrees on what’s being measured and why.

- Automated reminders: Reviews are set at the system level. Missed updates are flagged immediately—no more “fire and forget.”

- Executive sponsorship: Leadership owns both wins and failures, makes evidence-based decisions, and ensures resource allocation always matches objective importance.

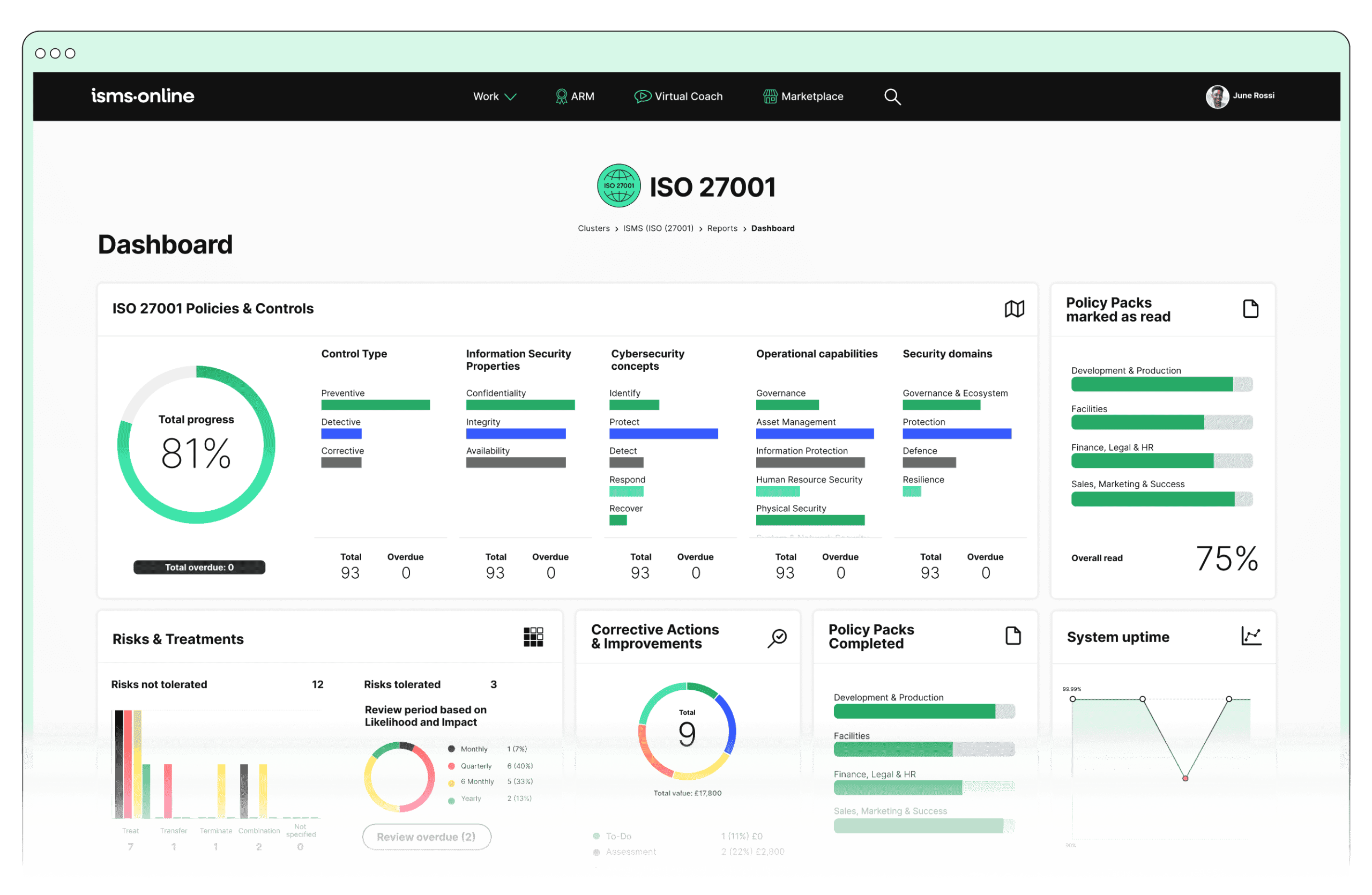

Gain evidence-driven, adaptable responsible AI governance with ISMS.online

Your responsible AI credentials aren’t judged by hopeful slogans—they’re proven, in stressful moments, through documented evidence, repeatable processes, and traceable improvements. ISMS.online was built for organisations that need to show the difference between “responsible” as a feeling and as a system. Our platform provides:

- Templates that enforce best practices: —not as busywork, but as live controls connecting values to metrics, owners, reviews, and improvement logs.

- Automated reminders and review scheduling: to keep objectives from drifting into obscurity as business priorities shift.

- Evidence repositories and escalation pathways: that make proving compliance a one-click operation instead of a last-minute scramble.

- Real-time visibility: into gaps, overdue reviews, and changes—so your risk, compliance, and executive teams work from the same playbook.

When external scrutiny hits—as it will for every responsible AI programme—you need proof that stands up to the world’s toughest boards and regulators. With ISMS.online, you put audit-ready, living evidence of responsible use objectives at the centre. That’s not hope—it’s operational strength.

Frequently Asked Questions

What guarantees a responsible use objective under ISO 42001 Annex A.9.3 survives real inspection?

A responsible use objective that holds up under intense regulatory, legal, or audit questioning is never aspirational or ambiguous. It is a well-documented, live commitment that tracks directly to a known law, risk, or contractual demand and is always anchored in ongoing business reality—not a policy binder. ISO 42001 Annex A.9.3 isn’t about intentions; it’s about operational, evidence-backed targets that anyone can verify in minutes.

To withstand scrutiny, a robust responsible use objective must deliver:

- Direct anchoring to law, risk, or policy: Each is mapped to a clear business risk, regulatory clause, or specific company exposure. If it doesn’t reference a live hazard or legal mandate, it’s decorative, not defensive.

- Measurable indicators, not vague aims: Track with explicit metrics and thresholds: “Fulfil 100% of access requests within 25 days” outclasses “handle data requests quickly.”

- Named ownership, not floating accountability: Every objective is tied to an accountable business role, not just a shifting team—ownership is traceable today if the investigator calls.

- Automated review and escalation: The lifecycle—review dates, triggers, and escalation—is embedded in systems, so objectives never get stale or lost during staff turnover or regulatory waves.

- Evidence at your fingertips: You can access and present supporting proof—logs, review histories, live dashboards—without hunting through emails or folders.

Objective evidence is the only shield in an audit. Intention is a breach waiting to happen.

How do you quickly test a responsible use objective’s resilience?

- Does it cite a living requirement—regulatory, contractual, or risk-based?

- Is the metric clear, tracked, and in use?

- Who owns it, and is someone covering it now?

- Is there an escalation or review route that works even if staff change?

- Can documentation—evidence, reviews, outcomes—be presented within 60 seconds?

ISMS.online strengthens these guarantees: it links risk, regulation, and objectives, automates accountability, and serves evidence instantly, ensuring no objective is left adrift or obsolete.

How do you set and maintain responsible AI objectives so they stand up to ISO 42001 audit or inquiry?

No responsible AI objective should originate in a vacuum or finish forgotten. Requirements start with a tailored risk and context analysis: where can your AI fail the user, the public, a regulator, or the business? ISO 42001 expects that every responsible use objective is defined, logged, tracked, and updated in the operational system, not detached in a policy shelf.

- Pinpoint the risk intersection: Privacy breaches, unfair outcomes, transparency failures, safety concerns—identify the risk, map laws like GDPR or DORA, and capture stakeholder priorities.

- Build SMART objectives, not policy platitudes: Specific, Measurable, Achievable, Relevant, Time-bound. “Log explanations for 98% of critical AI decisions within two business days” beats any generic commitment to “explainability.”

- Centralise control in a living compliance system: Objectives and metrics go where the work is managed—live dashboards or ISMS.online. Status and audit trails are updated in real time, not manually.

- Automate handoffs, reviews, and escalations: Assignment follows roles, not names. When staff leave, objectives remain active and re-allocated. Review and escalation reminders are system-triggered, not left to post-it notes or emails.

Any responsible use objective your team can’t track, update, and prove right now isn’t protection. It’s risk, disguised.

What distinguishes a defensible objective record from a paper trail?

- All objectives are versioned and mapped to an up-to-date risk register and documented regulation.

- Metrics, ownership, and audit events are updated automatically and visible to leadership.

- Reviews and escalations trigger as soon as thresholds are breached or contexts shift.

- Evidence—training, incidents, corrective actions—is digitally centralised, never scattered.

ISMS.online operationalizes all of these: objectives “live” as dynamic records, always ready for board inquiries, regulatory inspection, or external audits.

What do practical templates for ISO 42001 A.9.3 responsible use objectives look like in the field?

Templates transform theory into daily action. A robust responsible use objective template names not just the value and metric, but the responsible party, evidence, frequency of review, and escalation channel. This clarity is what auditors and boards count on.

Working template for an ISO 42001 A.9.3 responsible use objective

| Value | Objective | Metric | Role Owner | Review Cycle | Evidence |

|---|---|---|---|---|---|

| Fairness | “Maintain prediction parity gap ≤1.5% across genders” | “≤1.5% gap” | Data Science Lead | Quarterly + incident | Metrics dashboard |

| Privacy | “Fulfil 99% of data deletion requests within 21 days” | “≥99% on time” | Data Protection Officer | Monthly | Deletion logs |

| Transparency | “Record explanation logs for 96% of flagged outputs” | “≥96% explained” | AI Product Owner | Semi-annual, flagged | Explanation registry |

No rogue spreadsheet or manager’s memory holds up in compliance. Templates force discipline, automate proof, and survive staff shifts and regulator reviews alike.

Rapid-fire review: Does your objective pass muster?

- Does it tie directly to risk, legal, or contractual needs?

- Is ownership role-based and current even after turnover?

- Does the metric show live, rolling results—not stale signoff?

- Is all evidence versioned, centrally stored, and instantly accessible?

With ISMS.online, these templates are already woven into the system, ready to scale and adapt as your AI and compliance landscape evolves.

In what ways do responsible use objectives under ISO 42001 actively cut down on compliance failures and unseen risk accumulation?

Responsible use objectives, when operationalized, become early warning systems—not after-the-fact scapegoats. Static controls, wishful policies, and loose accountability are where disasters, especially silent ones, breed. ISO 42001 makes proactive measurement and live correction the expectation.

- Closes the intent–operation gap: Metrics flag divergence early. If “data deletion completion” trends below threshold, the system alerts, notifies, and asks for evidence—no auditor ambush.

- Provides continuous audit-readiness: Instead of scrambling to “manufacture” compliance, leadership has live dashboards showing objective status, review logs, and corrective actions—fulfilling GDPR, DORA, or CCPA “demonstration” requirements.

- Ensures responsive escalation and mitigation: Threshold breaches don’t wait for quarterly committee meetings; system cues, assignments, and corrective workflows kick in immediately.

Controls you can’t review, test, or explain are not controls—they’re liability magnets.

What risk explodes without live, operational objectives?

- Gaps only emerge during regulatory review or litigation—by then, mitigation is damage control, not protection.

- Incomplete records or outdated links between risk, objective, and owner leave Boards and CISOs exposed.

- Slow, reactive fixes expose systemic vulnerabilities that harm reputations and bottom lines.

ISMS.online equips your organisation to surface, test, and act on early signals—filling loopholes and shrinking the audit “oh-no” window to zero.

How is measurement, auditability, and responsive updating guaranteed for responsible use objectives under ISO 42001?

Metrics are inert if they’re not fed daily, surfaced when things slip, and instantly reviewed for accuracy and relevance. ISO 42001 demands operationalization—metrics and evidence must be visible, living components, not archives.

- Live dashboards for metrics and status: Any drift in fairness, privacy, or explanation is visible to all relevant roles—not hidden in monthly PDFs.

- Automated, role-driven reminders and task reassignment: Reviews aren’t skipped because of vacation or resignation—the system leaves no objective unowned or overdue.

- Transparent, traceable audit trails: Auditors or executives can trace each objective from creation to the latest update, including owner shifts, evidence uploads, and review outcomes—without tasteless scavenger hunts.

- Feedback and improvement loops: When a relevant incident is detected or the law changes, updates are logged, rationale is kept, and older records remain linked—helpful for learning and audit defence.

Example: Living measurement matrix

| Objective | Metric | Audit/Review Cycle | Trigger for Action |

|---|---|---|---|

| AI output fairness gap | ≤1.5% | Quarterly, complaint | Breach of metric, new law |

| Data deletion fulfilment | 100% within 21 days | Monthly | Failed request, new policy |

| Explanation log capture | ≥96% explained | Semi-annual | Negative user feedback |

When the question is, ‘How did you respond?’—you want a system to show history, not a scramble to explain actions you can’t prove.

ISMS.online integrates these cycles—linking metrics, status, ownership, and audit logs into a seamless, real-time evidence trail.

What structures preserve responsible use objectives through personnel changes and fast-changing business environments?

No resilience, no control. If a responsible use objective falls apart when a DPO leaves or your AI team is reorganised, your compliance system is brittle and audit-unsafe. Robust structures sustain live objectives regardless of human or business turbulence.

- Objectives tied to business function, not individuals: Handovers are automated; role-based assignment means objectives remain owned and active even if staff change overnight.

- Centralised, version-managed, and searchable data: Nothing relies on memory, isolated files, or legacy habits—evidence is stored, versioned, and readable across teams and time.

- System-enforced escalation and review: Automated reminders, escalating deadlines, and review notifications ensure nothing is lost in gaps between teams or during transitions.

- Embedded into onboarding and ongoing education: New staff are instantly made aware of open objectives; knowledge transfer is systemized, not improvised.

A compliance system that forgets who owns a control, or can’t show its evolution, is already broken.

Because ISMS.online weaves objectives, metrics, proof, and assignment into the operational core, resilience is automatically engineered—so you face regulators, customers, and your board with institutional memory, not excuses.

Give your responsible use objectives operational backbone. With ISMS.online as your foundation, ISO 42001 A.9.3 isn’t just compliance jargon—it’s a living, measurable shield against risk, audit, and reputational harm.